Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Researchers at the Massachusetts Institute of Technology’s Computer Science and Artificial Intelligence Laboratory (CSAIL) program have created a robot that can be directed to carry out complex tasks and movements simply by watching the user and receiving signals from the human brain.

Robot That Learns From Humans

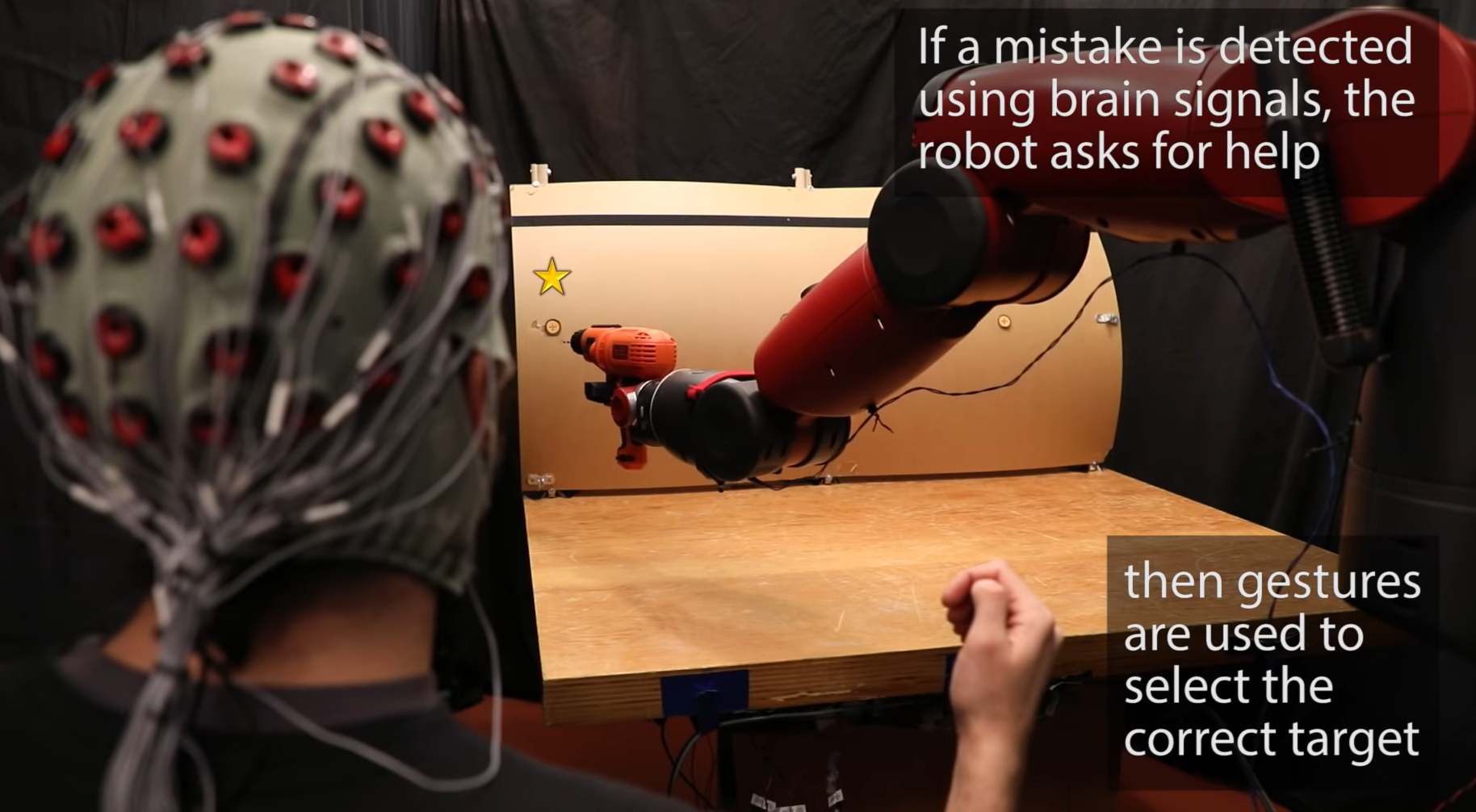

This week, MIT showcased a robot that can be fully controlled with brainwaves and hand gestures without being in direct contact with the hardware. As seen in the video provided by MIT below, the robot system, dubbed Baxter, allows users to assist robots in carrying out certain tasks and instantly correct mistakes made by the robot with brain signals and finger movements.

The MIT researchers’ motivation for creating the brainwave and finger movement-driven robot is to allow a human to lead and control an array of robots carrying out tasks that may be undemanding and simple but time-consuming for humans.

Through the utilization of machine learning (ML) and artificial intelligence (AI), robots can learn from their mistakes with the guidance of their operators and learn not to make the same mistake again. For instance, as demonstrated in the photograph below, robots can be taught to carry out tasks differently from humans, and once the new movement is registered, the robot permanently changes and replicates the movement shown by humans.

Daniela Rus, the director at MIT CSAIL and the supervisor of the project, stated that the newly-created brain signal-driven robot system has drastically improved natural human-to-robot interaction and communication. Due to its machine learning implementation and capability to directly communicate with humans, the robot system is able to carry out commands with specificity and accuracy, and is thus applicable in various scenarios to a wide range of industries.

“This work combining EEG and EMG feedback enables natural human-robot interactions for a broader set of applications than we’ve been able to do before using only EEG feedback. By including muscle feedback, we can use gestures to command the robot spatially, with much more nuance and specificity,” Rus explained.

Joseph DelPreto, a PhD candidate at MIT and the lead researcher of the project, said that the merit of the robot system is its applicability; users do not have to be trained to think in a certain way to direct the robot to carry out tasks. Users can think in the same way that they do under normal circumstances, and the “error-related potentials” (ErrPs), a system that autonomously detects errors in the thought process of humans, immediately helps the robot system to stay alert when users make mistakes.

“What’s great about this approach is that there’s no need to train users to think in a prescribed way. The machine adapts to you, and not the other way around. By looking at both muscle and brain signals, we can start to pick up on a person’s natural gestures along with their snap decisions about whether something is going wrong. This helps make communicating with a robot more like communicating with another person,” said DelPreto.

Like Communicating with Another Person

As DelPreto explained, the adaptability of the robot enables the system to adapt to humans and not the other way around. Consequently, Baxter’s adaptability improves its usability in a variety of use cases, potentially in factories and even in intricate manufacturing processes like watch manufacturing.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.