Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Well! I was reading this research paper https://arxiv.org/pdf/1703.08774.pdf and there was something unexpected! I thought of testing it myself and was really shocked seeing the results.

https://arxiv.org/pdf/1703.08774.pdf Research paper

https://arxiv.org/pdf/1703.08774.pdf Research paper

This paper ‘Who said What: Modeling Individual Labelers Improves Classification’ is presented by Melody Y.Guan, Varun Gulshan, Andrew M. Dai and ‘Godfather of ML’- Geoffrey E. Hinton.

Even if teacher is wrong, you can still top the exam! 😛

Even if teacher is wrong, you can still top the exam! 😛

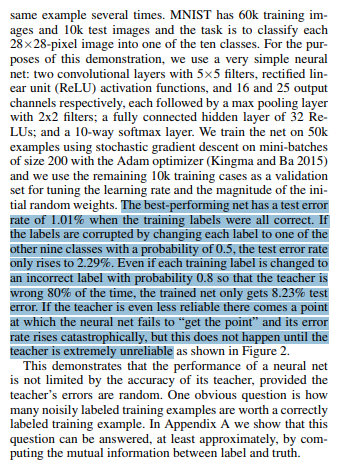

This paper has revealed some results which not everyone will be ready to digest unless proved.Some of them are:

- Train MNIST with 50% wrong labels and still get 97% accuracy.

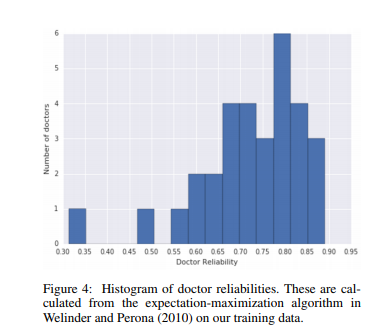

- More labelers doesn’t mean more accurate labeling of data.

Reliability of 3 doctors is highest compared to 6 doctors!

Reliability of 3 doctors is highest compared to 6 doctors!

In this article we’ll prove that training MNIST on 50% noisy labels do give 97%+ accuracy. We will use Deep Learning studio by Deepcognition.ai to make the process a bit faster.

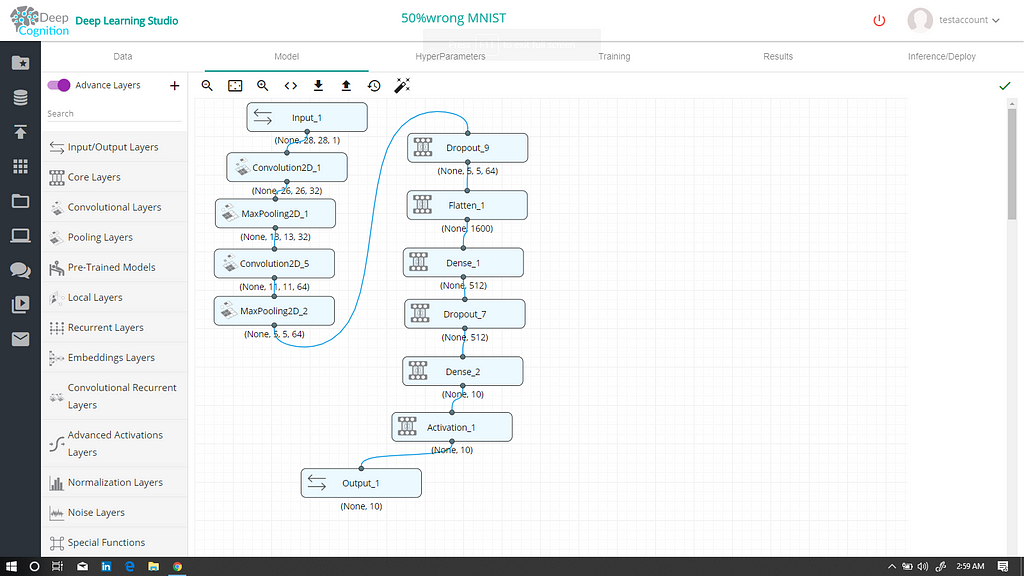

First, let me show you the architecture of our Deep Learning model for MNIST. We’ll use same model architecture to train it with true labels for the first time and second time with noisy labels to compare their accuracy.

Model Architecture model architecture

model architecture

If you are new to Deepcognition, do see my article to get basic intuition of using Deep Learning Studio.

Iris genus classification|DeepCognition| Azure ML studio

Let’s dive in now!

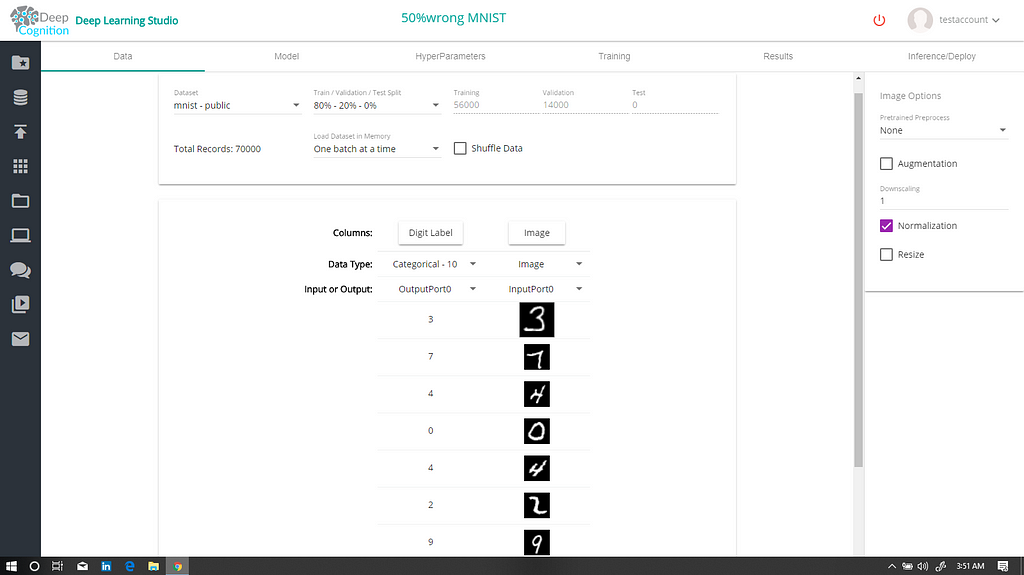

Classification of MNIST with true labelsData

True data is available publicly in Deep Learning Studio. Just select mnist-public from datasets as shown below.

You can see that all the labels are correctTraining results

You can see that all the labels are correctTraining results

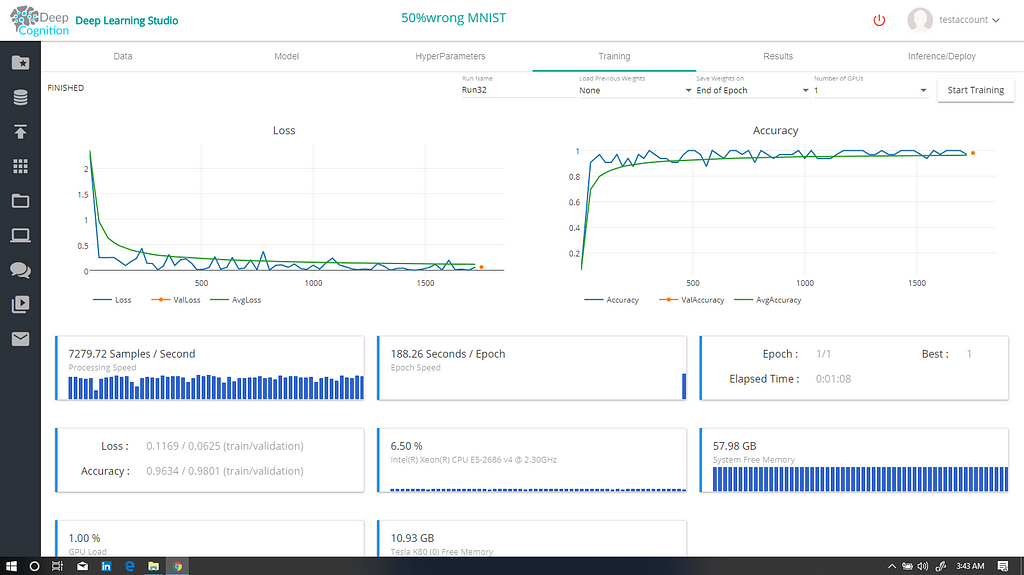

Our model achieved an accuracy of 98.01% in validation set, when trained with correct labels.

training results with true labelsLets experiment with noisy labelsData

training results with true labelsLets experiment with noisy labelsData

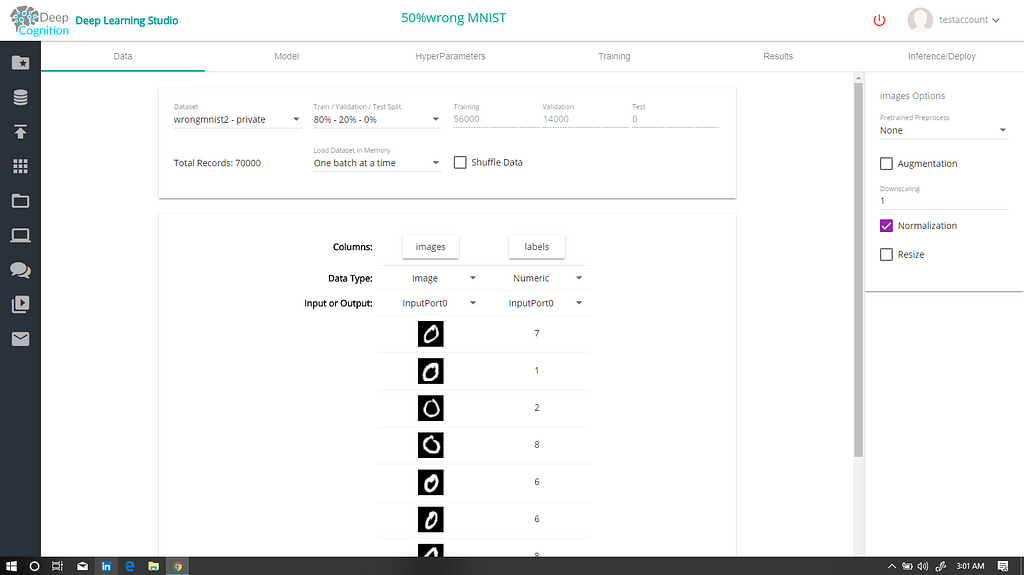

Now, we need to use data which has 50% wrong labels. You can download it from here. After downloading the data, upload it to your deepcognition’s account and select it from Data tab. It may take around 28 minutes to upload data. Be calm.

You can see that labels are wrongNote: I misclassified 50% of images from each class. But wait! . This should be done only for training data, so I did. The testing data must be present with correct labels. I made a split of 80%-20%. So when you select this dataset,choose only 80%-20%–0% or 80%-0%-20% in train-validation-test set in Data tab.Don’t shuffle the data as we’ll lose our correct training data.Don’t forget to choose ‘Normalization’ of images, otherwise our loss function won’t converge(even if all labels are correct).Training

You can see that labels are wrongNote: I misclassified 50% of images from each class. But wait! . This should be done only for training data, so I did. The testing data must be present with correct labels. I made a split of 80%-20%. So when you select this dataset,choose only 80%-20%–0% or 80%-0%-20% in train-validation-test set in Data tab.Don’t shuffle the data as we’ll lose our correct training data.Don’t forget to choose ‘Normalization’ of images, otherwise our loss function won’t converge(even if all labels are correct).Training

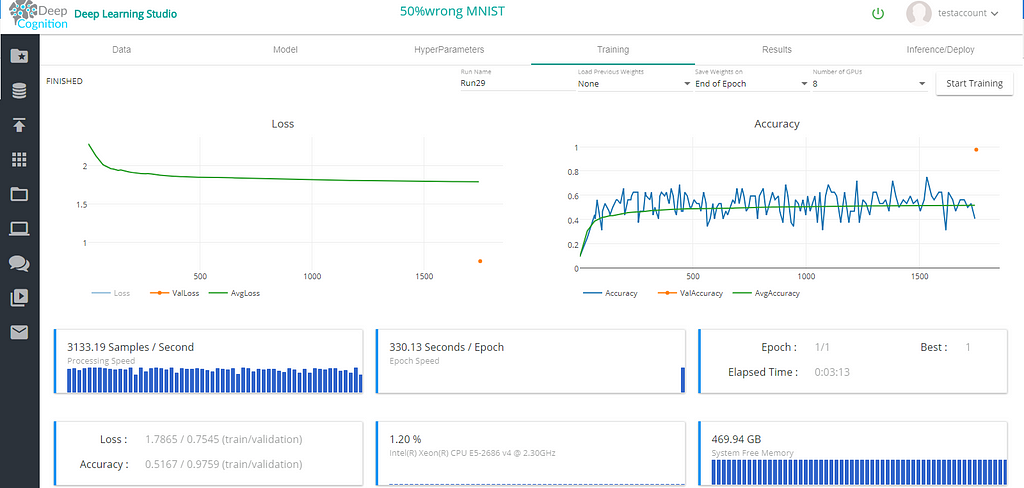

After training our model achieved 51.67% training accuracy where as 97.59% validation accuracy!

So yeah, I finally confirmed that even with 50% wrong labels, we may have high accuracy.

Training results with 50% noisy labels

Training results with 50% noisy labels

This was awesome! I insist everyone to replicate these results yourself!

Thanks for reading!

Happy Deep Learning!

CNNs with Noisy Labels! using DeepCognition.|Research paper| was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.