Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Yesterday Apple kicked off its annual World Wide Developer’s Conference (WWDC), and the keynote held some big surprises for its ARKit platform.

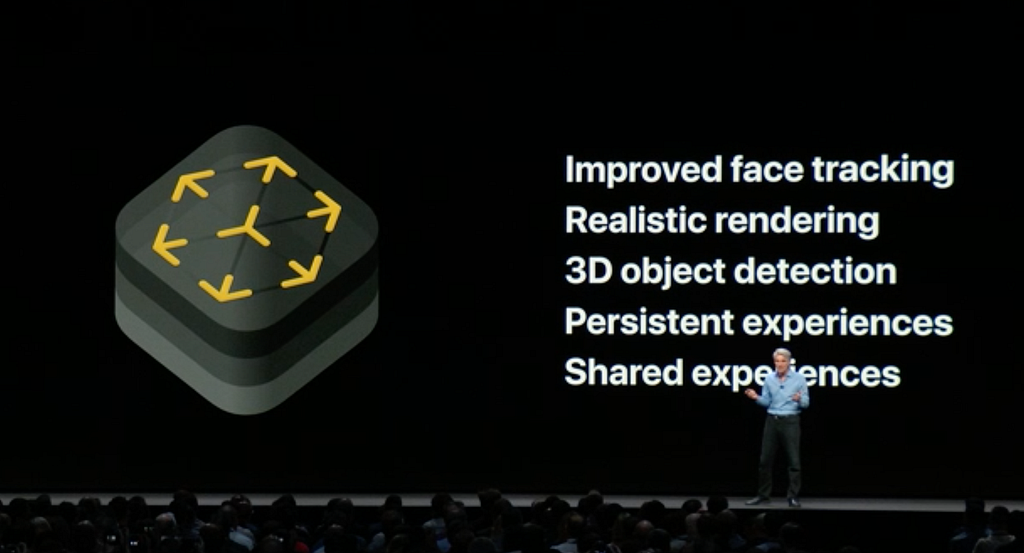

Among other improvements, ARKit 2 will hold the following new functionality:

- 3D object detection

- Persistent experiences

- Shared experiences

Now, we’ve all been hearing about the ARCloud lately, with companies like Google pushing their services at this year’s Google I/O, as well as smaller startups like 6D.ai, and Placenote trying to wedge themselves into the space as the authoritative platform. Apple, in the last few years, has been working silently (normal modus operandi) to first create a framework for detecting surfaces, and now, for detecting objects, serializing, and sharing the recognized world. Let’s take a closer look at each of these new features.

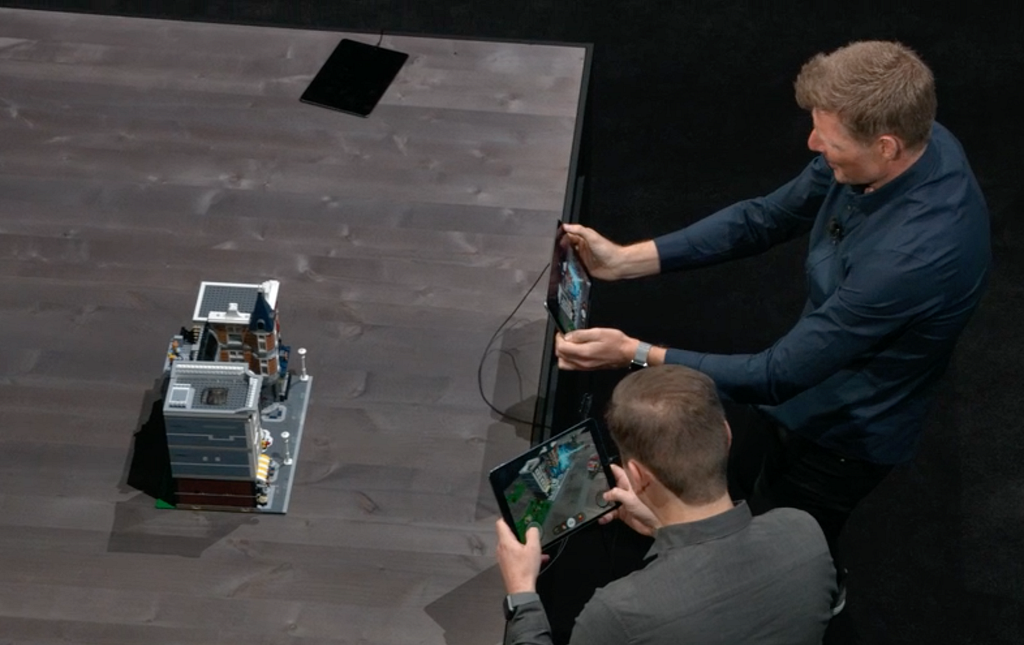

Detecting Objects

As Lego demonstrated in the keynote, ARKit is now able to detect physical objects. The way that this is done is by initially training the model (as in machine learning model, not 3D model) presumably using the Vision and Machine Learning facilities that Apple has developed under the hood. The object that is to be detected is first analyzed by pointing your phone camera at it, and moving all around the areas that are to be recognized. This allows the framework to analyze and save the feature points that it finds on your object. The framework is then able to recognize the same feature points in a different session by comparing the two datasets.

To train the machine learning model, Apple is providing the source code to a full-featured app that they wrote to simplify the process of scanning the object. From there, the process is simple. Load the model into your app, start an object detection session and when the object is detected, you’re given the proper transforms for laying out your world around the object.

Virtual content overlaid on top of real objectPersistent Experiences

Virtual content overlaid on top of real objectPersistent Experiences

Along with the new detection capabilities, the major feature is the ability to persist the recognized world. That is, once you detect enough feature points in the area that you’re scanning, you’re able to save the data that describes the world. You can then reload the world, not just in the same device, but any other device as well, allowing for a quick startup when looking at the same area at a later time.

Save a world map when your app becomes inactive, then restore it the next time your app launches in the same physical environment. -Apple ARKit docs

This is a really important feature. This allows for serializing the current scene, feature points and anchors in all, making restarting a session nearly instantaneous. This is also used with the shared experience functionality, whereby the same world can be scanned once and used by multiple users, as described in the next section.

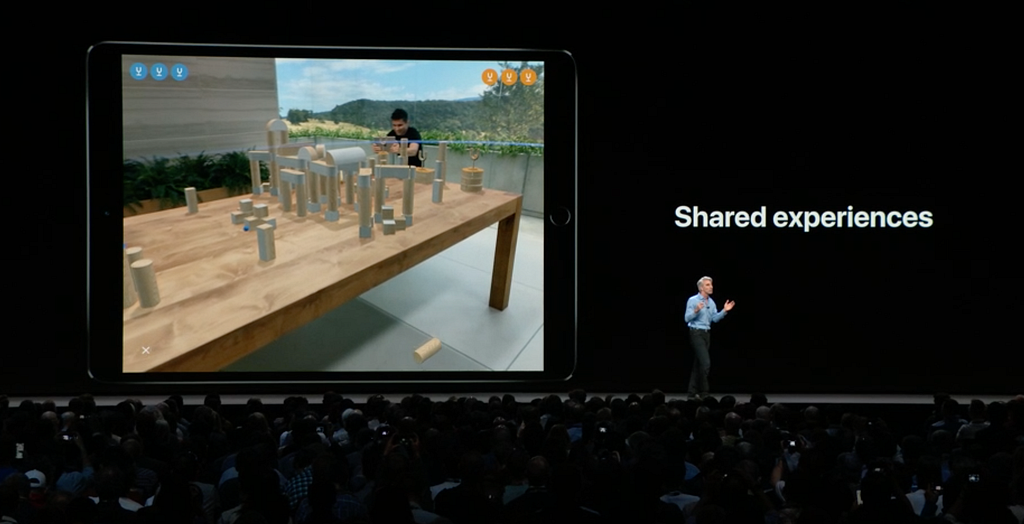

Shared Experiences

I’m currently writing this while watching a match between two conference-goers in a game that Apple created to showcase multiuser AR. SwiftShot is a take on a tower battle, where each player has to knock down the other’s towers using AR slingshots that they are controlling with iPads. The players are on each side of a large wooden table, which is holding the virtual content. The match is simulcast via a third iPad that a staff member is holding, acting as the camera person. There is a large television showing the match, which includes the players and the virtual content. The play area is rock solid on the table, showing the advanced tracking capabilities of ARKit.

Craig Federighi on shared AR experiences

Craig Federighi on shared AR experiences

This is a great example of a virtual world being shared by multiple participants. They are both seeing the same world, watching their actions affect the scene objects in realtime. This opens up amazing new possibilities for games and experiences, including being able to collaborate and work on the same subject together.

With two devices tracking the same world map, you can build a networked experience where both users can see and interact with the same virtual content. -Apple ARKit docsWhat all this means

Apple came out of nowhere with ARKit, and they are quickly iterating on the framework, even going as far as updating to ARKit 1.5 during an iOS point release, which included static image and vertical plane detection.

“Simply put, we believe augmented reality is going to change the way we use technology forever,” — Apple CEO, Tim Cook

With ARKit 2.0 they raising the bar and moving quicker than some startups, releasing technology that may render many of them obsolete even before they launch. This train seems unstoppable, with Apple making cohesive frameworks that open up new possibilities for developers to create amazing experiences.

Daniel Wyszynski is a developer who’s worked on more platforms and languages than he cares to admit to. He believes in building products that create memorable user experiences. To Dan, the users are first, the platforms are second. Follow Dan on Medium or Twitter to hear more from him. Also check out the s23NYC: Engineering blog, where a lot of great content by Nike’s Digital Innovation team gets posted.

Author’s Note: Views are my own and do not necessarily represent those of my employer

The Apple AR Train was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.