Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

As the Duke of Albany put it, “Striving to better, oft we mar what’s well”. While tokens are perfectly suited to improve operations and multiply your existing business valuation, most entrepreneurs prefer to push their luck and invent some vague future thing to deploy a token architecture there. There’s nothing wrong with dreaming big but, as you will see below, in the case of crypto tokens, the trip to the future should be undertaken in large alliances of projects.

Articles on token taxonomy normally describe types of investments-implied tokens, from investors’ perspective. There’s little one can find on classification of token features that are meant to make your business more efficient without questionable claims of ever-growing resale value. Let’s fix this.

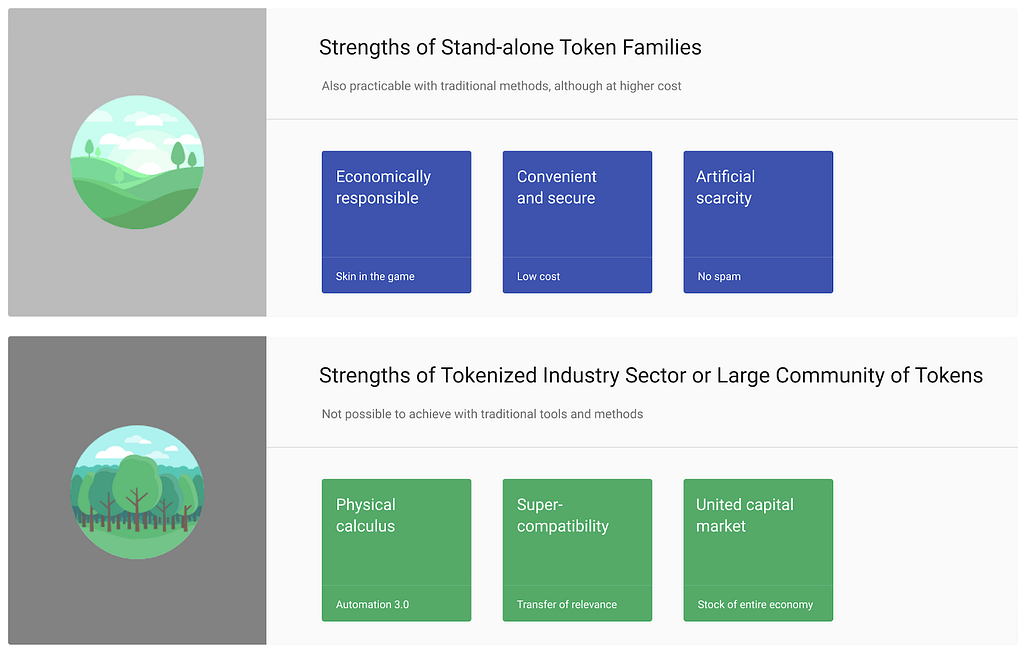

One separate family of tokens can align incentives and make holders act in economically responsible ways (for example, curation markets). A stand-alone issuance of tokens is a safe, convenient, and inexpensive way to transfer value, tags, and operating signals (think supply chain optimization as the most general purpose). Anyone can emit a token in a matter of minutes and create scarcity (collectibles). There’s probably nothing new here for the reader.

However, the tremendous, unforeseen power larger communities of tokens can become is rarely the topic placed on the marquee.

Notably, while stand-alone qualities of crypto tokens can be repeated with incumbent instruments, although at a much greater cost, none of the below described united-tokens phenomena can be observed before the world is fairly tokenized. There’s no experimentation setup that could help us envision this future with clarity, so please take our reasonings with a grain of salt. The imprecision of the picture is well compensated by its grandeur, though.

We suggest to explore the following three powers of the near-future tokenized world.

- Physical Calculus. Just like specks in an hourglass represent moments of time, crypto tokens can create “analog”, robust, self-sufficient, and often psychologically more preferable calculus and value transfer.

- Super-compatibility. Seamless token swaps on soon-to-be-ubiquitous decentralized exchanges is only a small part of the anticipated tectonic development; compatibility of tokens will create direct flow of relevance — something that people never experienced before; it is probably going to be as miraculous as reading minds.

- United Capital Markets. Each traditional company issues and sells its own equity. The only things that meaningfully unite different stocks are indexes and mutual funds that follow them. Unlike that, investment tokens can belong to a united pool of projects. This might not be a very accurate illustration, but try to think of a world where a company registered in the European Union has the right to directly emit some Euros into circulation whenever it creates auditable value.

Physical Calculus

When Alice passes a token to Bob, they perform one elementary act of physical calculus. This event is “fundamentally local” — it only takes Alice, Bob, the token, and the law of transaction that Alice, Bob, and the token carry in themselves. No one else and nothing else needs to be involved (of course, we consider distributed token-carrying substrates and bridges between them as free-access, self-maintaining ownerless entities).

The great complexity of physical phenomena we see around us is the result of endless iterations of similar “local acts”: circles on the water don’t need a concentric dispatcher. We know, when it gets to human-made tools, many people tend to think of repeated iterations as Rube Goldberg machines, those intentionally designed to perform a simple task in an indirect and overly complicated fashion. This is simply not true; it’s only one of the results of meme culture.

Junctions in tokenized interactions may be very smart. Each time Alice passes a token to Bob, she undertakes a complex analysis which only humans are capable of processing. Moreover, in token transactions, there are ways for previously unknown automation. For example, Alice sends a payment token to Bob, but it returns because Bob doesn’t have a single token called “good man” (issued by George).

Why hasn’t such calculus emerged before, you may wonder? This is one of those cases when quality only appears as the result of quantity. If many water particles in that pond don’t give a damn about the laws of physics and fail to properly interact, we’d probably never see those circles on the water, no matter how heavy a rock we drop.

Where does such calculus lead? What are the advantages? Within token-enabled supply chains, many things become less expensive and every participant seamlessly contributes to the quality automated data and event flow. Tokenization generally reduces overheads, enhances trust, and speeds things up.

How important is that? Can we provide some numerical estimation of possible gains from applying the technology? Chances are, we are talking about improving the quality of almost everything by five times. This may sound like “water was wetter” old-men’s talk, but try to find a pair of original, seventies-quality jeans. You will find them, but it will take you an arduous search to find a firm dedicated to bringing that quality back. Nothing in your local mall will qualify.

The reasons are many and beyond the scope of this post, but modern supply chains are believed to utilise only about ¾ of the entire capacity. We don’t exactly suffer from quality loss because the overall technological advancement is so great that even the work done half-heartedly by generalists lacking depth of profession still produces an okay result. However, if the rate of utilisation returns back to 95% where it once was, this indicates the x5 improvement: (100–75)/(100–95).

Super-compatibility

The theory by Shannon and successors doesn’t deal with the problem of quality of information, so Internet protocols made transport of data cheap and put the problem of relevance to recipients since senders can afford to not care.

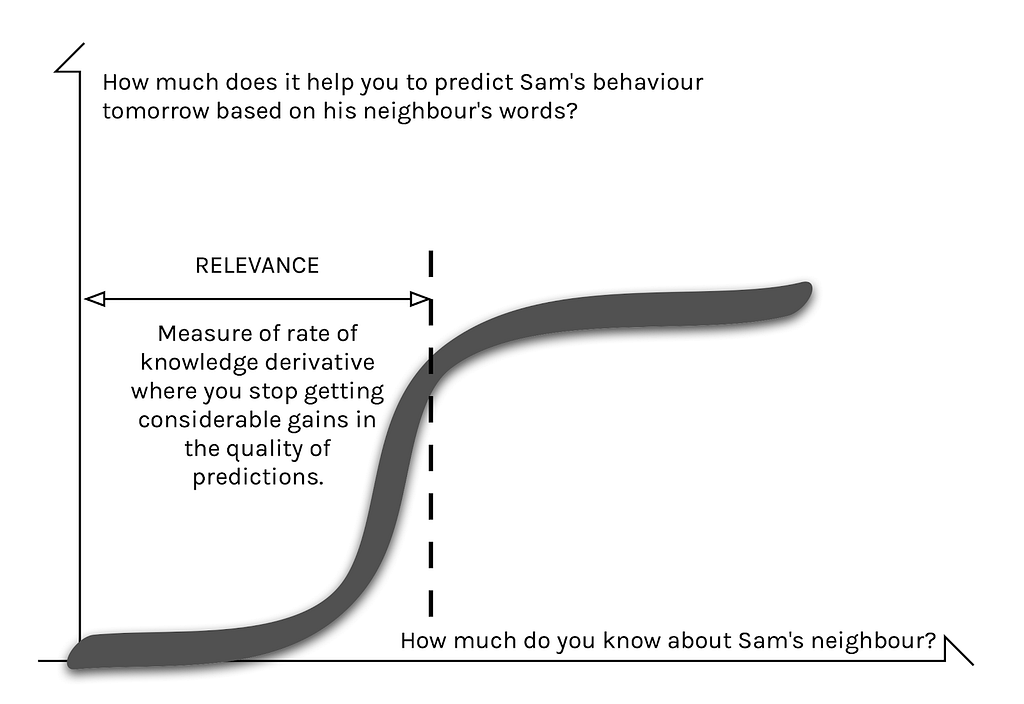

While there’s no mathematically formalised universal quality benchmark, relevance is possible to measure. John tried to call my neighbour Sam to ask whether he is going fishing tomorrow, but Sam never answered the phone. So John calls me instead. John believes in my non-zero ability to predict Sam’s behaviour tomorrow. I am relevant to the task.

What does John need to know about me to calculate my relevance? That I am Sam’s neighbour and can just walk upon his door and knock, if really needed. This is one thing he knows. But if John knew I’m also into fishing and I kept hearing from Sam during the entire week about this trip tomorrow, he’d account even more relevance to me. Within his information relevance evaluation task, John probably shouldn’t bother much more about getting to know me, unless I am a pathological liar or a pranker.

AI-supported modern relevance engines such as that of Instagram do a great job. However, if you are a blogger, you might have noticed that the number of upvotes is inversely proportional to the amount of effort you invest in a post. That really raises doubts whether you should read “hot” pages at all. Regardless of the scope of your interests and your favorite app, your feed should have surprised you with posts of grotesque stupidity receiving tons of attention and equally stupid comments that get even more attention.

Quality is low, relevance is high. But we still can’t measure quality. So what do we do? The answer is: “We split relevance into pieces and allow for direct transfer of those pieces”. That will eventually improve quality.

Of course, centralised networks will never disclose the “relevance formula” or whatever part of it they still understand themselves. In today’s world, you start with collecting an aggregate relevance, say, on fishing. You know the number of your followers and some other audience reach stats, but you don’t know the foundation for them. To get some relevance on a different topic, say hunting, you convert the one you got into money (the only universal transport so far), then you buy some ads to promote your hunting blog. Along the way you have friction, costs, and error generators.

In the tokenized world, every little component of your relevance would be created as a specific token balance. The portfolio of relevant tokens, the origins of it, its history of development — everything will remain in its original form, available for study and further manipulation. Not only will account transfer (from network to network) become possible, partial migrations of fractions of your “personality” will be possible as well.

We would like to continue with examples, however, it would be unfair to expect any particular vision from us at this point. Try to rewind your memory to the dawn of social networks and see how likely it was to predict the set of tools we use today. But, a few general things are possible to point out because the above described basic approach to computation of relevance is not likely to change.

- No-spam environment is one key factor. Whoever effectively solves the relevance problem can expect to generate enormous economic returns. But to maintain economic advantage in the tokenized world, players will have to learn to deal with non-marginal-cost to distribute information. Having a fixed amount of attention, the recipient will be biased to only evaluating information (think tokens) that can be reused in some way. This increases chances for some circulation streams to occur.

- P2P is likely to lose dominance in data exchange. The cost of evaluating information will force recipients to look for third-party evaluators, so many transactions are likely to transform from “A sends information to B” to something like “B, C, and D evaluate A’s information and split the involved value”.

- Flat consensus protocols may be replaced with multi-dimensional ones. As more and more value will start to circulate in relevance-enable apps, context-independent distributed systems such as “money” and assets of all sorts will give way to those where consensus layers include relevance measurement.

- From single-point to extended-segment on the timeline. Current relevance data is accounted in likes, mentions, links, upvotes/downvotes, etc. These things can only reflect the present point in time. Tokenized pieces of information can carry financial component so they, by definition, become extended in time. Through net present values, through rates on prediction markets, we will start seeing some expectations as well. This is specifically important for future profit redistribution, because today money follows the click and the eyeballs so those who surprise the most earn the most. Not so in a more future-aware world.

- Closer and more direct connection to bundled physical assets. Today, most of the monetisation online involves advertising in one way or another. Advertising, in its turn, involves advertised goods or services, i.e. some objects from the physical world. Since tokens will directly, legally, and officially represent many real-world assets, the “distance” between the information about the thing and the thing itself will become much less, often zero.

- Greater audience reach. Today you can become relevant to everybody by becoming rich and relevant to fisherman by becoming a prominent fishing blogger. Your available space is a cone with a foundation radius reflecting your wealth and height reflecting your knowledge on fishing. Because of tokens’ hybrid money-data nature, your relevance in a tokenized world becomes a more spacey truncated cone.

United Capital Markets

Many interested, decent entrepreneurs are mooning around crypto but are reluctant to enter because it feels like a bizarre innovation. Numerous ICOs have given crypto space the image of homeopathy — an unfortunate and cheap cargo cult. Scammers sell fake stocks of fake companies on the same shelf where real scientists present the results of years of honest and dedicated work.

Everybody sells his own token. This is wrong for many reasons. One reason is especially fatal, though. Confused by the unique-by-nature example of Bitcoin, crowds of projects try to push tokens that serve both operational and investment purposes — which are not easy to align. If you force it, as a result, the operational side suffers most. If you face a one-time-only, limited-duration sales event, you can’t really avoid protecting the interests of speculators in the first place, right?

ICO-oriented token models engender poor end-user experiences, unclear routes to value capture, technical lock-in, regulatory uncertainty and have speculator-driven instead of builder-driven communities. The cat will finally be out of the bag. When tokens sold through ICOs inevitably destroy billions of dollars of value for their investors, angry mobs will be looking for someone to blame. Even if you manage to use the harvested funds correctly and develop the product, you may get caught up in that aftermath and your reputation (if not worse) will be buried under the combined massive pressure of endless trials, negative press, and public hatred.

To be safe, one should separate the two: let operational tokens improve your business and investment tokens support your fundraising needs. One approach is the “stake token” model — it’s straightforward and powerful. Tokens “locked” into the system provide privileges in using the system in its intended commercial way. For example, one of the simplest privileges could be a discount on fees that the system charges for its services. Or it could be a priority in queues that might exist in the system for whatever business organisational purpose. Or it could be the right to stay in the system (use its services) longer (if there is some specific shortage of resources).

Stake tokens are not voting tokens. Stake tokens are not internal currency tokens — they do not allow one to get system’s services in exchange for payment. Stake tokens do not represent ownership rights of the system’s assets and liabilities. Stake tokens are not artificially “scarce” — they are emitted continuously (naturally) as the system grows.

Most importantly, one stake token can serve many projects within one supply chain or other alliance.

The emission rights for stake tokens can be estimated as the net present value (NPV) of the expected monetised privileges they provide. For example, if tokens grant rights to discounts, to estimate the fair value, the NPVs of costs avoided should be added up for the entire investment horizon timeframe.

Stake tokens generate more value for their holders if holders actually use the system, rather than remain passive “investors”. Thus, tokens have value even outside of all speculation narratives. In general, this model does not encourage speculation activities. If the system is considered a separate economy, the velocity of tokens — the frequency of changing hands inverted — is quite low, which provides positive prospects for long-term price growth.

Tokenized Union is Strength was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.