Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Photo by Aziz Acharki on Unsplash

Photo by Aziz Acharki on Unsplash

Table of Contents

- Part I: Getting started with the Fn project

- Part II: Fn Load Balancer (this post)

If you’re looking to get up and running with the Fn project, check out the first post in this series. In this post I am going to talk about Fn load balancer — what is it, how to run it locally and how to deploy it to a Kubernetes cluster.

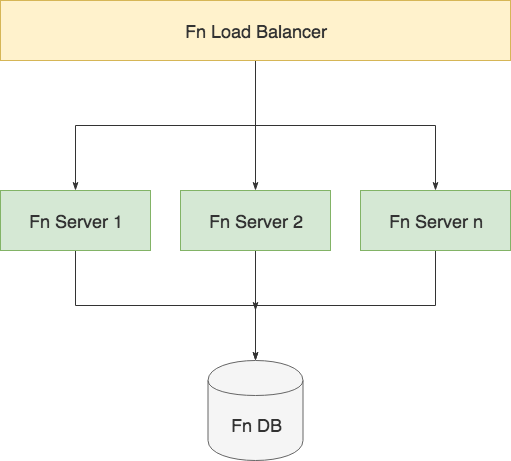

The purpose of the Fn load balancer is to intelligently route traffic to clusters of Fn servers. This is not a simple round robin routing as it has some logic that determines to which nodes to route the traffic based on the requests that are coming in. For example, if your function is “hot” on node2, the load balancer will ensure it gets routed to that node, instead of another node where the function is not loaded. That way you get much better performance.

Check the simple diagram below to see how load balancer falls into place with the Fn server(s) and the Fn database.

Load balancer also gathers information about the entire cluster and exposes the list of nodes and their states, so you can use that information to scale out your cluster by adding more Fn servers or scale in — decrease the number of servers in your cluster.

Running locally

The quickest way to get multiple Fn servers up and running behind the load balancers and to try things out is to run it locally on your machine using Docker.

The image you want to pull and run is called fnproject/fnlb . At the time of writing this, the latest version/tag is 0.0.268.

Let’s try running a couple of Fn servers and a load balancer. We will run both the Fn server(s) and the load balancer in containers — we will link the load balancer to the Fn server instance, so it can discover it.

If you don’t want to manually copy/paste the commands in this section, you can get the script below — the script will automatically start N Fn server containers and a single Fn load balancer.

Note: Make sure to run fn start in the same folder you’re planning to run the above script in. The reason for that is, so Fn can create the database file for us. If you don’t do this, you’ll get errors starting the Fn server containers.

Start by running a couple of Fn server containers. We don’t need to expose any ports to the host, as we are going to be accessing the Fn server through the load balancer:

docker run -it --rm --name fnserver1 -v $PWD/data/fn.db:/app/data/fn.db -v /var/run/docker.sock:/var/run/docker.sock fnproject/fnserver:latest

Similarly, run the second Fn server from a new terminal window. Make sure you run the second server from the same folder as they need to share the same database:

docker run -it --rm --name fnserver2 -v $PWD/data/fn.db:/app/data/fn.db -v /var/run/docker.sock:/var/run/docker.sock fnproject/fnserver:latest

Note: if you don’t feel like opening multiple new terminal windows, you can add -d to each Docker command to run the containers in detached mode. I prefer seeing logs from all containers when trying things out.

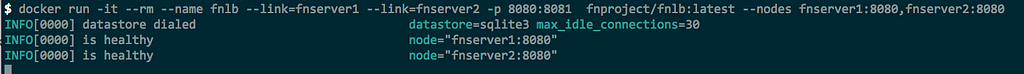

Finally, let’s run the load balancer and link to the Fn servers we have running:

docker run -it --rm --name fnlb --link fnserver1 --link fnserver2 -p 8080:8081 fnproject/fnlb:latest --nodes fnserver1:8080, fnserver2:8080

We are using --link to link the containers together — this makes the Fn server containers accessible from the Fn load balancer container using their names (fnserver1 and fnserver2). We are also exposing port 8080 to the host and linking it to the port 8081 inside of the container (that’s the port LB is accessible from inside of the container).

If all is good, you should see the following output from the load balancer container telling you that both Fn servers are running, healthy and accessible from the load balancer:

Fn load balancer with 2 Fn servers

Fn load balancer with 2 Fn servers

Let’s try this out! Since load balancer is exposed on port 8080, we can simply run the fn CLI commands to deploy the apps (we could have used a different port for the load balancer and then set the FN_API_URL environment variable to point to the actual host and port).

At this point you can create and deploy a couple of new functions:

fn init --runtime go testapp1fn init --runtime go testapp2fn init --runtime go testapp3

cd testapp1fn deploy --local --app testapp1cd ..

cd testapp2fn deploy --local --app testapp2cd ..

cd testapp3fn deploy --local --app testapp3cd ..

And then try calling them to see that the calls are being routed to both Fn server instances. I used this command to continuously call the endpoints from multiple terminal windows:

while true; do sleep 1; curl http://localhost:8080/r/testapp3/testapp3; echo $(date);done

Make sure you replace the app and func name (testappX) and eventually you should see logs being outputted from both Fn server instances.

Management endpoints

Fn load balancer also exposes a management endpoint that’s accessible on path /1/lb/nodes and supports PUT, DELETE and GET methods. With PUT you could dynamically add a node to the list of nodes load balancer maintains, the DELETE method will remove a specified node and with GET you can get a list of nodes and their states. You can imagine implementing some sort of a watcher that uses certain events to add/delete nodes from the load balancer.

Running on Kubernetes

How would we replicate a similar set up, but instead of running it locally, we would run it on Kubernetes?

Fn load balancer supports a Kubernetes mode of operation that can be activated by passing in the -db=k8s flag — in this mode the --nodes flag will be ignored and you will be able to use labels and label-selectors to pick out the pods that are running Fn servers. You could manually go and create the files necessary to deploy all this on Kubernetes, but the good news is that the Fn team already did this for you!

There’s an Fn Helm chart available and you can use that to install Fn on your Kubernetes cluster.

Helm chart on the link above will also install an instance of MySQL and Redis on your cluster as well as the UI and Fn Flow — so you get the full deal with one Helm command.

Instructions on how to install it are here, but in short once you have Helm installed you run the following commands to initialize Helm, clone the repo, install the chart dependencies and finally install the Fn chart:

helm initgit clone git@github.com:fnproject/fn-helm.git && cd fn-helmhelm dep build fnhelm install --name myfn fn

Once installed, you will also see the instructions on how to set the FN_API_URL environment variable that points to the load balancer. If you’re using Docker for Mac you can access the load balancer on http://localhost:80 .

If you’re watching the logs from the Fn load balancer (pod that’s created as part of the deployment fn-fnlb-depl), you should see a bunch of activity that indicates new pod was detected and there should be a line that look like this:

time="2018-05-21T22:31:49Z" level=info msg="is healthy" node="10.1.0.23:80"

and indicates that Fn load balancer was able to connect to the Fn server.

The great thing if you’re using Kubernetes that’s part of Docker for Mac is that the Fn load balancer service is accessible via http://localhost:80 :

$ curl localhost:80/version{"version":"0.3.455"}Note: if you’re deploying this to a “real” cluster, you can look up the external IP of the fn-fn-api service by running kubectl get svc and checking the EXTERNAL-IP column.

Hooking up the Fn CLI

Assuming you don’t have any Fn servers running locally anymore, you will get an error if you try to run an Fn CLI command. For example:

# Try to get the list of apps$ fn apps lERROR: Get http://localhost:8080/v1/apps: dial tcp [::1]:8080: connect: connection refused

Since we have Fn load balancer exposed on localhost port 80, we can hook it up with the CLI by setting the environment variable FN_API_URL or running the CLI with the variable pre-pended:

FN_API_URL=http://localhost:80 fn apps l

Because we haven’t deployed anything yet, the above command will return “no apps found”. Set the FN_REGISTRY to your Docker hub repository and then deploy the testapp1 from before:

FN_API_URL=http://localhost:80 fn deploy --app testapp1

Note: we had to set the FN_REGISTRY, because we won’t be deploying the apps to a “local” Fn server like we did before. We will actually build and push the image to the Docker hub, so Fn server running in the Kubernetes cluster can pull it and run it.

Last thing to do is to try and run the deployed function. You can either list the routes of the app we deployed to get the full endpoint (e.g. http://localhost:80/r/testapp1/testapp1 ) or use the Fn CLI to invoke the function like this:

$ FN_API_URL=http://localhost:80 fn call testapp1 testapp1{"message1":"Hello World"}

Conclusion

In this article I talked about Fn load balancer and how to set it up either locally for development and just to try things out as well as how to deploy it using a Helm chart on a Kubernetes cluster.

With the knowledge from the previous post you could create your custom Fn server image (including your custom extensions) then deploy and run it inside the Kubernetes cluster and perhaps expose the functionality to the end users and allow them to use your platform to deploy their services.

Thanks for Reading!

Any feedback on this article is more than welcome! You can also follow me on Twitter and GitHub. If you liked this and want to get notified when I write more stuff, you should subscribe to my newsletter!

Part II: Fn Load Balancer was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.