Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

The first thing a seasoned tester thinks when hearing about record/playback IDE is: “Been there, done that, it doesn’t work. I’ll stick with Selenium, thank you very much.” We built Screenster around test recording, so I’m here to argue why Screenster will succeed where countless Selenium alternatives have failed.

Problem with classic Record/Playback IDEs

Every vendor has one. Most testers have tried one. Yet, 99% of the progressive companies I know code UI tests using Selenium or Protractor or some other framework. Allow me to opine on the major flaws in the approach of record/playback IDEs.

The idea behind those IDEs is that they enable a tester to quickly create a test. Even a tester who doesn’t quite understand JavaScript, HTML, CSS and the underlying technology behind the application they are testing.

The problem is, classic IDEs like UFT and TestComplete use the script as the output of the recording. The script is typically lines of auto-generated code in some programming language that the tester then has to understand and maintain. In the worst case, it’s also a custom language that the tester has to learn.

So recording is easy, but then you are stuck with junk code that you don’t quite understand and that is extra hard to maintain. Values are hardcoded, there is not much reuse, selectors are simplistic, and there is very little organization around the overall test architecture. You saved yourself 30 minutes of typing the code but you ended up with a mess that you are supposed to maintain for years. Painful.

True, some of these IDEs have improved over the years. Some tools offer solutions that don’t require dealing with auto-generated code. But most of this tools are still a pain to learn and manage. While packed with good features, these beasts require gigabytes to install, the manuals are hundreds of pages, and in the end, you are neither a good developer nor a good automation tester. Everything still takes too long and it certainly is not fun.

How is Screenster different

We’ve created Screenster with several goals in mind. There should be nothing to install. It should not require reading a manual. Simple things should be easy, and complex things should be possible. Automating a single test case shouldn’t take more than 10 minutes. Maintenance should be low. No ugly auto-generated code. It should do as good of a job as a human tester will. Oh yes, and it should be a joy to use :-)

Let’s look at how we succeeded at each one of these admittedly lofty goals where others have failed.

Nothing to install

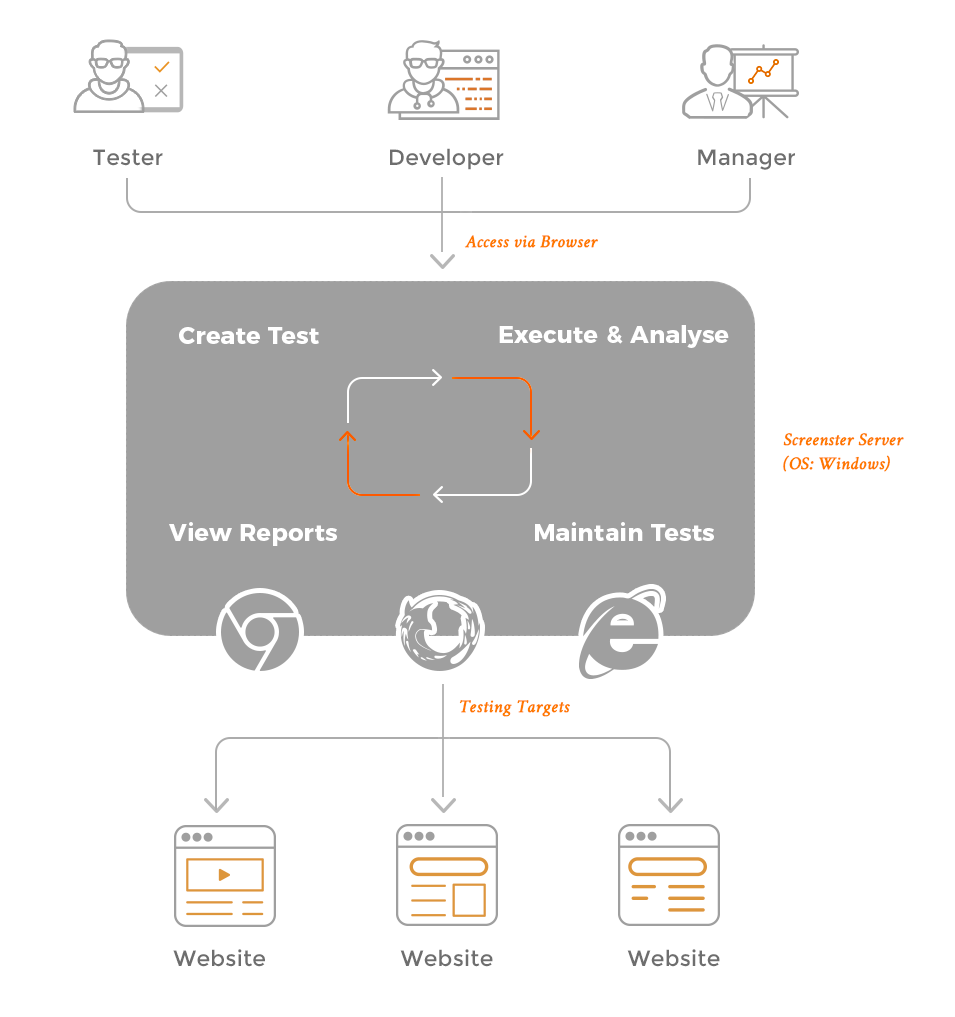

Screenster is 100% web-based, no plugin cheating. Runs on the cloud or a locally-installed server, even in offline mode.

No manuals to read

Nuff said. Just start using it and you’ll figure it out along the way. We guide you through each step, and there is a panel with helpful tips that facilitate product discovery. For more advanced stuff, we have very short tutorials that you can cover in less than 10 minutes.

Simple things should be easy

We’ve designed the UX around the common flows so there is no guessing. We’ve sprinkled magic each step of the way, like automatically extracting parameters, using smart self-healing selectors, and intelligent timeouts handling. Everything is visual and discoverable with a simple mouse click. It’s easy because 90% of what you normally have to think about and implement just works out of the box.

Complex things should be possible

That’s a big one. We don’t want to take away your toys :-) We recognize that no record/playback tool can cover 100% of the test logic. So we focused on making 95% of the logic work out of the box, and leaving the remaining 5% for the tester to code in Java using Selenium API without leaving the browser.

There’s also a JavaScript command that allows writing JS code to run in the page. And all Screenster magic can be accessed through its API so you get the best of both worlds.

Test case automation in less than 10 minutes

Like most record/playback tools, Screenster shines at test development. By the time you are done clicking through a test case, you are practically done automating it. You need to run it again and review the results, but typically, it’s just a matter of a couple of clicks.

No auto-generated code

You can edit test flows, add, delete, and alter tests steps using the IDE and not touching the code. Screenster handles WebDriver under the hood, providing you with automation functionality that just works.

Low Maintenance

We achieved low maintenance by reducing the number of times the tester has to edit the test case after it is recorded by up to 90%. Screenster automatically extracts and applies parameters to user input, so changing a value that was entered in 3 different places is a single parameter change.

Screenster stores a fully-qualified selector and later uses a smart matching algorithm to find moved and changed elements. During each run, It analyzes the page state and updates the test script and the baseline so you don’t have it.

When a difference is detected, it is shown visually on the page’s screenshot, and it can be marked as a bug, approved as an expected change to the baseline or ignored from the future comparison with a single click.

Do as good of a job as a human tester

Without intelligent visual verification, NO test can cover what the human will see. You can have a thousand passing Selenium tests and broken UI because a stylesheet was changed, wrong image was deployed, text is wrong or button is not visible to the user. Human tester can easily see these issues which is why the majority of testing is still done by manual testers.

Screenster’s visual testing is the best in the world. Really! There’s nothing like it. Here’s why. Vast majority of tools don’t even bother with visual testing, so the bar is very low. The few that advertise “screenshot comparison” rely on pixel-by-pixel comparison to some stored image, with some tolerance expressed as % of pixels allowed to not match.

Ladies and gentlemen, while this is better than nothing, this is a doomed approach by design which is why nobody really uses it. A simple shift of all content down by a few lines due to increased page margin will cause pixels to be 100% different resulting in every single test case failure. A moved panel on a page will break it too. Anti-aliasing will cause failures. But small changes, even meaningful ones like a missing label or a number, will not be detected if they are below the allowed threshold.

Screenster is able to handle all cases above and more because of its sophisticated visual comparison algorithms and because it combines DOM with the screenshot. It understands that a group of pixels is text, and that a group of characters is a date, and then applies higher level verifications accordingly. So a “current date” that keeps changing every time you run the test does not cause visual verification failure.

Screenster handles moved content, shifted elements, and dynamic areas that are constantly changing. This magic reduces false positives by 90% while doing a very strict verification, not missing changes that a tired human will overlook.

Joy to use

Let’s face it, testing is not fun. Testing the same UI over and over again is a mind-numbing and degrading activity. Coding simple Selenium is not at all challenging for a good developer, it takes a long time and adds no business value.

We built a product that’s fast, simple and awesome by design. It frees up humans to do tasks that they are supposed to be doing, and that freedom makes Screenster a joy to use. Check it out and try to tell us that Selenium is a better option, I dare you:).

The proof of the pudding is in the eating

There’s a lot that I can say about how awesome Screenster is. But the truth is, the only way to see if it will work for you is to try it out. And you can do this for free, by going to our website and tying our online demo. I’m sure you’ll enjoy Screenster, and our team will greatly appreciate your feedback.

Screenster vs classic Record/Playback IDEs was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.