Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

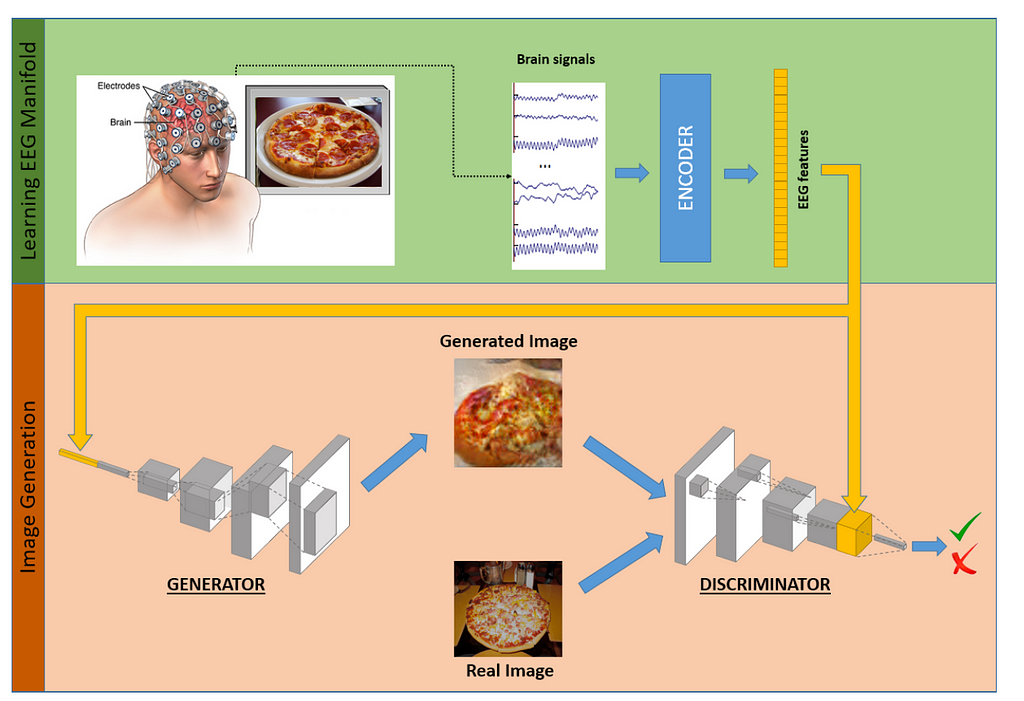

The method consists of a “EEG-in/image-out” processingpipeline, where EEG signals given to a Generator are converted to Realistic images.

Images Generated using Conditional GANJust an intro into GAN!

Images Generated using Conditional GANJust an intro into GAN!

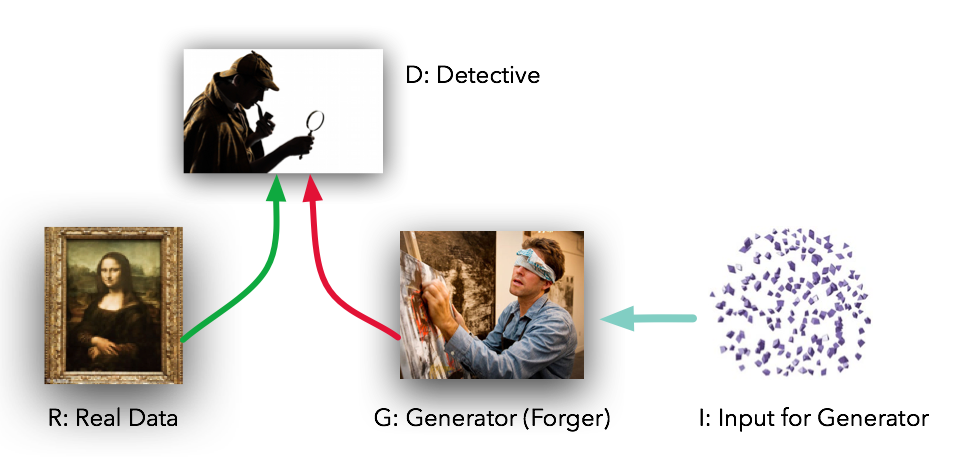

Generative Adversarial Networks (GAN) are used to generate data similar to that of the training dataset distribution from scratch. In our case, creating images similar to that of our training dataset.

GAN consists of two networks — Generator and Discriminator

- The Generator in GAN tries to create training set images from scratch

- while the discriminator checks whether the generator is actually generating training set images or not.

Is that enough?

If we are using ordinary GAN, we can generate realistic images from noise. Here, no condition is provided as input to the Generator.

In our case, the Generator needs to generate a realistic image given it’s corresponding EEG signal (Condition).

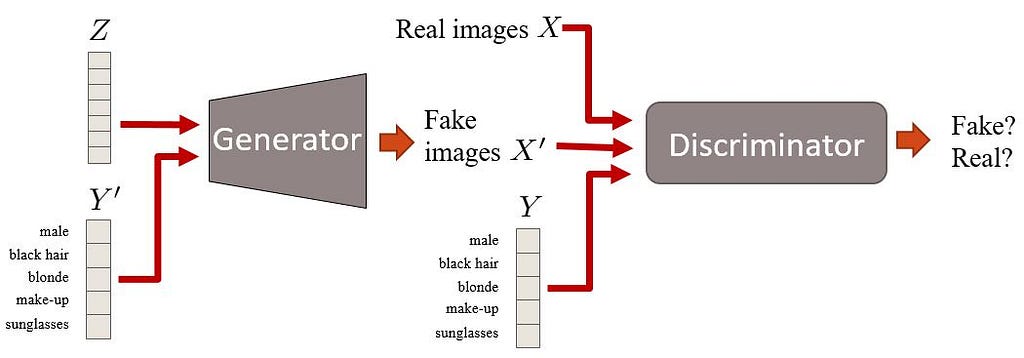

Condition GAN! Example of a Conditional GAN

Example of a Conditional GAN

The difference between GAN and Conditional GAN lies in the additional parameter y in both a discriminator and generator function.

How it Work’s — with Conditional GAN

Overview of the architecture design of the proposed EEG-driven image generation approachTraining the Generator

Overview of the architecture design of the proposed EEG-driven image generation approachTraining the Generator

The generator network is trained in a conditional GAN framework toproduce images from EEG features, so that the generated images matches that of the conditioning vector (EEG features).

Conditioning Vector?

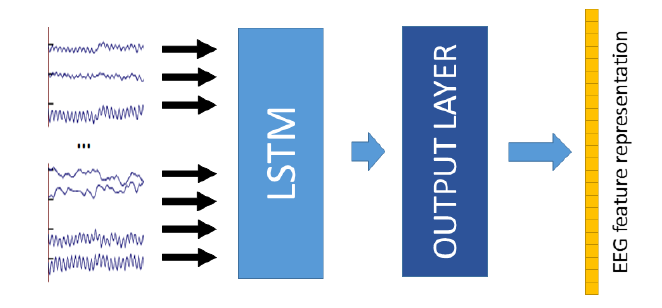

EEG feature encoder architecture.

EEG feature encoder architecture.

Raw EEG signals are processed by an RNN-based encoder, which is trained to output a vector of what we call EEG features, containing visually-relevant and class-discriminative information extracted from the input signals.

Training the discriminator

A discriminative model then checks whether the outputs of the generator are plausible and also match the conditioning criteria.

Instead of training the discriminator with real images with correct conditions and fake images with arbitrary conditions (which forces the discriminator to learn how to distinguish between real images with correct conditions and real images with wrong conditions without any explicit supervision), we also provide a wrong sample consisting of a real image with a wrong condition, randomly chosen as the representative EEG feature vector from a different class.

Reference

- Generative Adversarial Networks Conditioned by Brain Signals

Generating Images from Brain Signals was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.