Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Varnish Software CDN

Varnish Software CDNCDN — Content Delivery Network

Let’s start with defining a CDN. A content delivery network (CDN) is a system of distributed servers that traditionally delivers web content to a user, based on the geographic locations of the user, the origin of the webpage and the content delivery server. I use the term traditionally because we’re entering an era where CDNs are doing more than just delivering web content.

An example would be Cloudflare Workers, which lets you use their CDN to run code at the edge, rather than just serve web pages / cached content. You are basically able to deploy and run JavaScript away from the origin server — allowing you to decouple code from a user’s device. According to Cloudflare, “these Workers also enable programmatic functionality for routing, filtering and responding to HTTP requests that would otherwise need to be run on a customer’s server at the origin.”

The main point is that CDNs and edge computing are continuously evolving — whereby the two are starting to meld together in an era where high scalability is paramount.

Melding Realtime Data Push with Realtime Data Pull

Many realtime applications need to work with data that is both pushed and pulled (i.e live sports scores, auctions, chat). Separately, data push and data pull are fairly straightforward as independent entities. At initialization time, past content could be retrieved from a pull CDN and new/future updates could be pushed from a separate service.

But, what if you could chain these mechanisms together?

Proxy Chaining with Fastly and Fanout

Fastly is an edge cloud platform that enables applications to process, serve, and secure data at the edge of a network. It is essentially high scalable data pull and response, using a platform that can listen and respond to users’ needs in realtime. Similar to a traditional CDN, Fastly does allow you cache content, but it also lets you deliver application logic at the edge.

On the other hand, Fanout is high scalable data push — serving as a reverse proxy that handles long-lived client connections and pushes data as it becomes available.

Both Fastly and Fanout work as reverse proxies, so it is possible to have Fanout proxy traffic through Fastly — rather than sending that traffic directly to your origin server. Together, this coupled system has some interesting benefits:

- High availability — If your origin server goes down, Fastly can serve cached data and instructions to Fanout. This means clients could connect to your API endpoint, receive historical data, and activate a streaming connection, all without needing access to the origin server.

- Cached initial data — Fanout lets you build API endpoints that serve both historical and future content, for example an HTTP streaming connection that returns some initial data before switching into push mode. Fastly can provide that initial data, reducing load on your origin server.

- Cached Fanout instructions — Fanout’s behavior (e.g. transport mode, channels to subscribe to, etc.) is determined by instructions provided in origin server responses (using a system of special headers called Grip). Fastly can subsequently cache these instructions and headers.

- High scalability — By caching Fanout instructions and headers, Fastly can further reduce the load on your origin server — bringing that processing logic closer to the edge.

Mapping the Network Flow

Using Fanout and Fastly, let’s map the network flow to see how these push and pull mechanisms could work together.

Let’s suppose there’s an API endpoint /stream that returns some initial data and then stays open until there is a new update to push. With Fanout, this can be implemented by having the origin server respond with instructions:

HTTP/1.1 200 OKContent-Type: text/plainContent-Length: 29Grip-Hold: streamGrip-Channel: updates{"data": "current value"}When Fanout receives this response from the origin server, it converts it into a streaming response to the client:

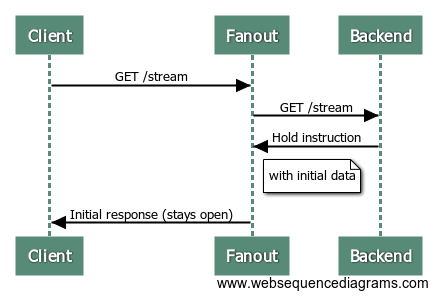

HTTP/1.1 200 OKContent-Type: text/plainTransfer-Encoding: chunkedConnection: Transfer-Encoding{"data": "current value"}The request between Fanout and the origin server is now finished, but the request between the client and Fanout remains open. Here’s a sequence diagram of the process:

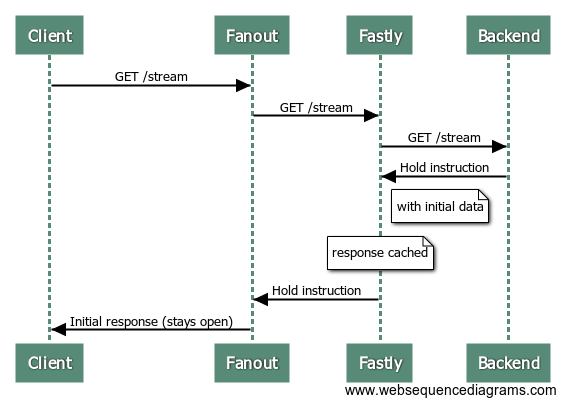

Since the request to the origin server is just a normal short-lived request/response interaction, it can alternatively be served through a caching server such as Fastly.

Here’s what the process looks like with Fastly in the mix:

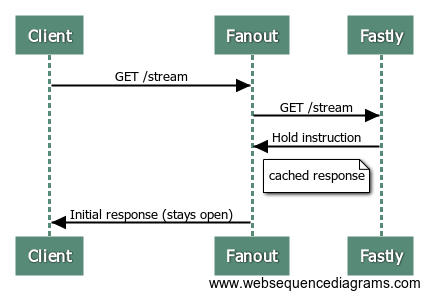

Now, when the next client makes a request to the /stream endpoint, the origin server isn’t involved at all:

In other words, Fastly serves the same response to Fanout, with those special HTTP headers and initial data, and Fanout sets up a streaming connection with the client.

Of course, this is only the connection setup. To send updates to connected clients, the data must be published to Fanout.

Purging the Fastly Cache

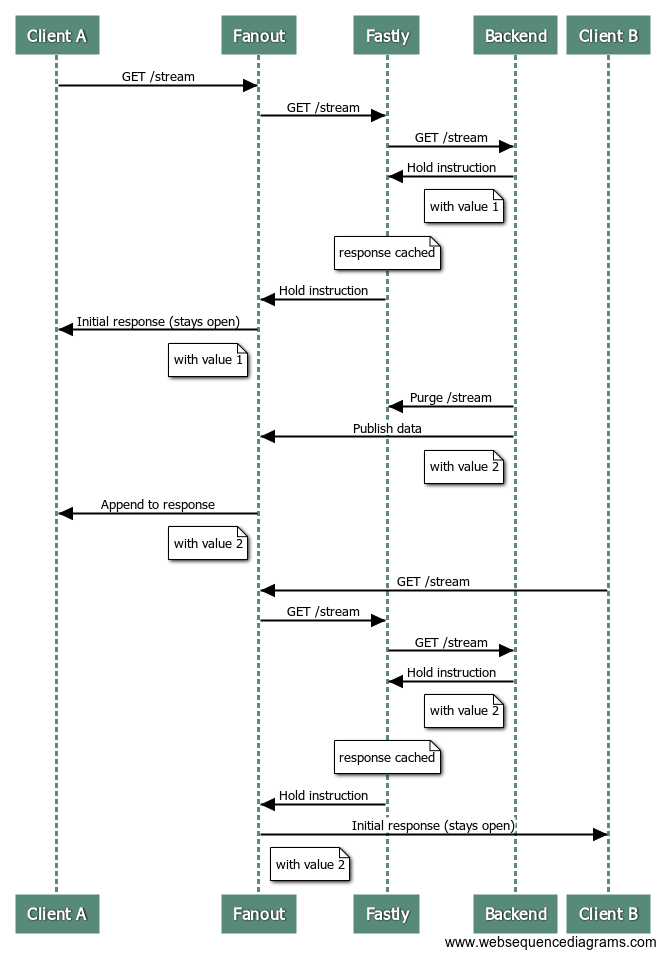

If an event that triggers a publish causes the origin server response to change, then we may also need to purge the Fastly cache.

For example, suppose the “value” that the /stream endpoint serves has been changed. The new value could be published to all current connections, but we’d also want any new connections that arrive afterwards to receive this latest value as well, rather than the older cached value. This can be solved by purging from Fastly and publishing to Fanout at the same time.

This sequence diagram illustrates a client connecting, receiving an update, and then another client connecting:

Effectively Handling Rate-Limiting

If your publishing data rate is relatively high, then this can negate the caching benefit of using Fastly.

The ideal data rate to effectively harness Fastly’s cache would be data that is:

- Accessed frequently — many new vistors per second

- Changed frequently — updates ever few seconds or minutes

- Delivered instantly — in milliseconds

An example of this would be a live blog, whereby most requests can be served and handled from cache.

However, if your data changes multiple times per second (or has the potential to change that fast during peak moments), and you expect frequent access, you really don’t want to be purging your cache multiple times per second.

The workaround is to rate-limit your purges. For example, during periods of high throughput, you might purge and publish at a maximum rate of once per second or so. This way, the majority of new visitors can be served from cache, and the data will be updated shortly after.

Demo

You can reference the Github source code for the Fastly/Fanout high scale Live Counter demo. Requests first go to Fanout, then to Fastly, then to a Django backend server which manages the counter API logic. Whenever a counter is incremented, the Fastly cache is purged and the data is published through Fanout. The purge and publish process is also rate-limited to maximize caching benefit.

Final Thoughts: The Emergence of a Messaging CDN?

Broadly speaking, we could define a messaging content delivery network as a geographically distributed group of servers which work together to provide near realtime delivery of dynamic data and web content.

This new genre of CDN could allow data processing to take place at the edge, away from an app’s origin — thereby ushering in a new era of realtime computing that is both affordable and scalable.

Powering Your App With A Realtime Messaging CDN was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.