Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

How I programmed my way into making an acceptable painting.

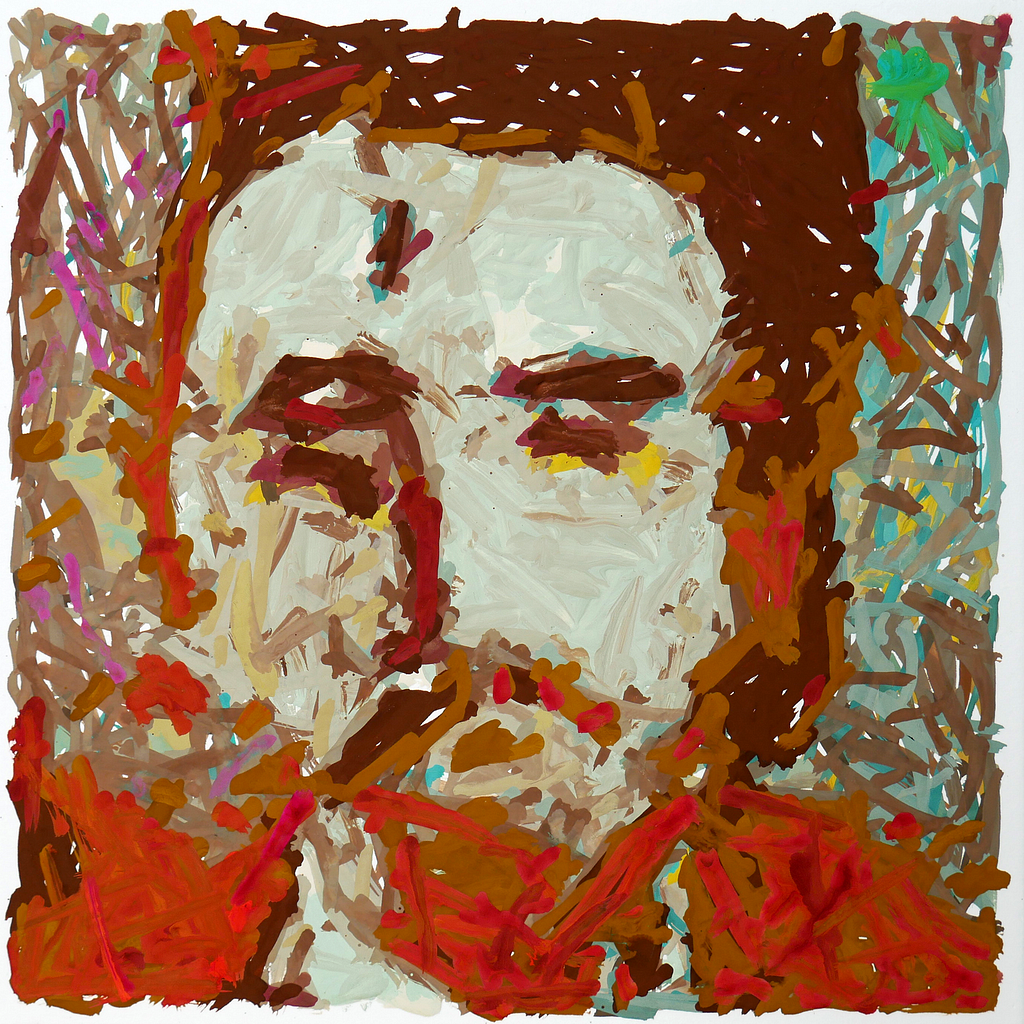

How I programmed my way into making an acceptable painting. “028749_0001_08”, my submission to Robotart 2018. 20cm x 20cm, gouache on watercolor paper.

“028749_0001_08”, my submission to Robotart 2018. 20cm x 20cm, gouache on watercolor paper.

The image above is a physical painting I submitted to the Robotart 2018 competition. It was created by artificial intelligence and painted by a robotic arm, generated by a set of computer programs and AI’s that I created and/or trained. I’m happy with the result, and would greatly appreciate it if you would vote in the competition.

I first heard of Robotart.org a couple years ago. At the time, I was dabbling with the idea of using a robot or some form of software to assist me in painting. After almost 40 years of being completely non-artistic, I had taken an interest in art, and had been following some online courses to teach myself how to draw and paint. The results were better than I expected to achieve, but staggeringly short of what I wanted to produce. Being a lifelong programmer, I thought: if there’s a way to improve my results with software, I want to try that.

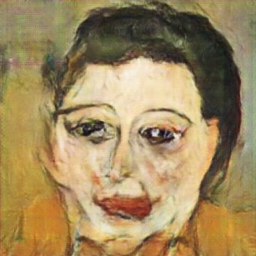

A hand-made painting I did in 2015. Let’s just say… I’m not very good.

A hand-made painting I did in 2015. Let’s just say… I’m not very good.

About nine months ago, I got access to a robot lab here in Melbourne (Australia), and jumped at the opportunity with the goal of submitting to Robotart 2018.

My goals for this project were to answer two questions: can I use a robot to create work I would be proud to display? And can a robot help achieve my creative goals? The answer to both of these questions, based on my first result, is a definite yes.

Contents

The Source: AI-Generated imagesThe Destination: Robot InstructionsThe Journey: Physical RealityThe Reality: The Final Painting

The Source: AI-Generated images

“028749_0001_08”, the image that became the painting.

“028749_0001_08”, the image that became the painting.

Until recently, my plan was to enter a portrait based on a photograph in the “Re-interpreted Artwork” category. But after playing with some of the amazing AI image generation algorithms that have been created in the past few years, I decided to try my hand in the “Original Artwork” category by creating an image from scratch using AI.

The source image to be painted was created by a deep convolutional generative adversarial network (DCGAN) whose architecture is based on the 2015 paper “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks” by Alec Radford, Luke Metz, and Soumith Chintala. I used the Tensorlayer implementation from Github. “028749_0001_08” is the auto-generated name my network gave to the image. Apologies for the tiny image, but the network only creates 64x64 pixel images!

I trained the network to generate portraits in a style I was looking to produce. I did this by training it on several thousand painted male and female portraits that I like. I selected, filtered, hand-edited, and augmented them as I improved the training process. The final training set was about 100,000 training images after augmentation. After weeks of preparation and trial and error, final training and image generation took approximately seven days on a machine learning cloud server (an AWS p2.xlarge instance).

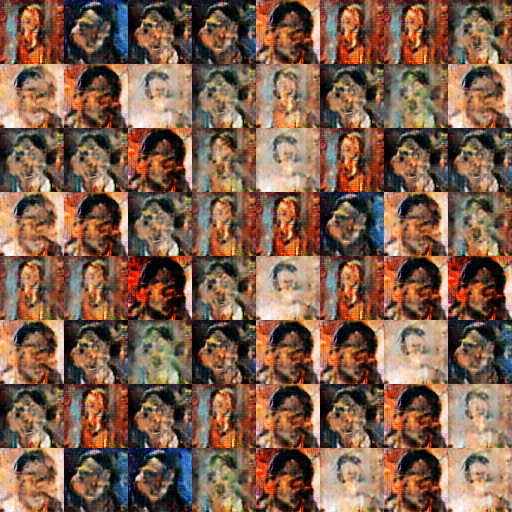

Playing with the DCGAN was fun, but also frustrating at times. It’s interesting to see how changes in the training set influence the output, but it’s also difficult to understand why a particular trained network outperforms another. I encountered many instances of so-called “GAN mode collapse”, due to my tiny training set, in which the generator side of the network “figures out” how to generate just a few archetypal images which pass the discriminator’s test. They can get into a positive feedback loop which can lead to weird results:

This set of images was from a state of GAN collapse. Scary stuff.

This set of images was from a state of GAN collapse. Scary stuff.

And cases of direct forgery, where the generator infers so much from the discriminator that it learns how to create forgeries:

DCGAN-generated image in mode collapse.

DCGAN-generated image in mode collapse. “The Twins” by Boris Grigoriev (1922), part of the training set.

“The Twins” by Boris Grigoriev (1922), part of the training set.

After a LOT of trial and error, I got a few OK results, but they were winnowed down from a mountainload of junk. I’m sure that a much larger training set, and better fine tuning of the network parameters, would produce better results.

After seeing it produce “forgeries” like the one above, I built a program to detect if any of the images getting through resembled images in the training set to make sure I wasn’t just seeing a collapsed GAN regurgitating items that it inferred from the discriminator’s feedback. 028749_0001_08 had a low forgery score, and I double-checked by manually scanning back through every image in the training set. The images in the set with the highest forgery match score, and also that seemed the most visually similar, were:

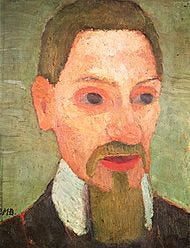

“Rainer Maria Rilke” by Paula Modersohn-Becker (1906)

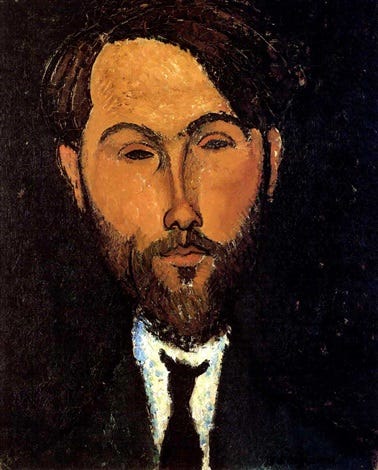

“Rainer Maria Rilke” by Paula Modersohn-Becker (1906) “Portrait De Leopold Zborowski” byAmedeo Modigliani (1917)

“Portrait De Leopold Zborowski” byAmedeo Modigliani (1917)

There are similarities — facial hair, collar, Zborowski’s hairline, painting texture. I certainly consider Modigliani’s Leopold Zborowski portraits to be a significant “influence” on this work — I love those portraits and had several of them in the training set — but the produced work appears to be quite independent of any of the images in the training set.

I also created several dozen additional discriminator neural networks, trained to recognize paintings by subject and format as well as to recognize paintings that I may like. These networks were trained on about 20,000 paintings that I manually classified over a period of 6 months (it was my TV time activity). The discriminator networks were built in Keras on top of Karen Simonyan and Andrew Zisserman’s VGG Network, and each trained their top layers for about two days each.

The DCGAN network produced hundreds of thousands of images over a period of a few days. To speed the filtering process to select an image for this project, and to delegate as much decision-making to the AI as possible, I used the discriminator networks to filter the output of the DCGAN to only show me paintings it recognized both as portraits and in styles that I may like. Of these, a handful of images met my ideal parameters for this project.

I personally selected 028749_0001_08 due to its uniqueness, framing, dissimilarity to any of the training set paintings, and color scheme. The AI-based filtering step turned out to be largely moot for this project — I ended up viewing almost all of the produced images and really babysitting the process — but the idea of having auxiliary AI’s that help with classification is one that I will continue to use when dealing with large output sets.

The output of this GAN is just a 64x64 pixel image. That’s it. It’s more of a thumbnail than a painting, but I was intrigued by this particular result:

“028749_0001_08”, the image the GAN generated that was the subject of the painting.

“028749_0001_08”, the image the GAN generated that was the subject of the painting.

There was an intended next step, which is that I trained another series of neural networks (based on the 2017 paper “Image-to-Image Translation with Conditional Adversarial Networks” by Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros) to be capable of adding detail to painting-style images to allow them to grow in size while increasing in detail, using “imagined details” filled in by the network. I ended up abandoning this step for this project because the network wasn’t performing well on portraits of my chosen style, but I intend to produce much larger-scale physical work in the future using this approach.

That network produced some interesting results on landscapes that I was working with, but not so much on the portraits I got out of the GAN:

A source landscape generated by another DCGAN instance I trained on landscapes.

A source landscape generated by another DCGAN instance I trained on landscapes. “ENHANCE.” A trained AI upscale of the above image, filling in imagined details.

“ENHANCE.” A trained AI upscale of the above image, filling in imagined details. An AI upscale of 028749_0001_08. Better than most it produced in this particular run, but not really what I was going for and certainly not true to the source.

An AI upscale of 028749_0001_08. Better than most it produced in this particular run, but not really what I was going for and certainly not true to the source. One of the rejected DCGAN portraits, upscaled below.

One of the rejected DCGAN portraits, upscaled below. Above portrait, AI upscaled. I like this image, but it is objectively frightening.

Above portrait, AI upscaled. I like this image, but it is objectively frightening.

I think the detail upscale approach has promise, and I’m very interested in making large scale paintings, so I’m keeping that in the toolbox. But it didn’t work out for this project. I’ll give it another go in the future with more training data and more time in training.

BEGAN / StackGAN

At the 11th hour, I did spend some time with more recent GAN networks, specifically BEGAN and StackGAN. They both render interesting results with the right training sets, but given my tiny training set and time constraints, I ran into two problems.

BEGAN gave nice smooth results as designed, but resulted in GAN collapse almost immediately given my training set and learning parameters:

BEGAN results after 73,000 steps

BEGAN results after 73,000 steps

And StackGAN was incredibly slow to train, with this type of output after a full day of training:

StackGAN didn’t get off the ground, due to time constraints.

StackGAN didn’t get off the ground, due to time constraints.

Maybe I did something wrong? I messed around with both of them with less than two weeks left in the competition deadline, and abandoned them prematurely given my time constraints. I think they both look really interesting with the right training set and training time, but I had to get down to painting. I’ll revisit them in the future, but they didn’t meet my time constraints for this project.

DeepDream / Neural Style

I played around with DeepDream and Neural Style, and immediately rejected them for this project. They are good for producing computer-based images that look like paintings, but I wanted that step to occur by using actual paint.

The Destination: Robot Instructions

My first several months of working with the robot arm, starting in July 2017, were about getting familiar with its capabilities, and validating that I could get it to produce somewhat human-looking brush strokes. I didn’t want to start messing with paint yet, so I experimented using Winsor & Newton BrushMarkers, which were really fun to work with. They are artist markers with a brush-style tip, which were ideal for testing brush strokes without worrying about paint mixing.

I didn’t produce anything terrific initially, but I felt pretty confident I could get usable results with a paint brush.

Final product of the above test run. Some good ideas, some bad ideas in here.

Final product of the above test run. Some good ideas, some bad ideas in here.

I got really busy with work, and the submission deadline crept up on me, and by March 2018 I hadn’t even started using real brushes yet. I wanted a shortcut. I planned to sidestep the issue of paint mixing by using Aqua Brushes, a paintbrush with an in-built paint reservoir. I decided to settle with just 8 pre-mixed colors (the competition restriction) and use those to paint with.

That didn’t work well. AT ALL. My tests came out so poorly that I threw them out before even leaving the lab. Aqua brushes require way too much water for what I was going for, and the results were not good. I’m sure I could have gotten better results with a different medium, but based on my manual tests and the designs I had been building, I was committed to gouache by this point.

So I bit the bullet just a couple weeks before the deadline and decided I had to do it the old-fashioned way, with a traditional brush and paints. I had almost no time to test this in the lab, so most of the work was theoretical and done at home.

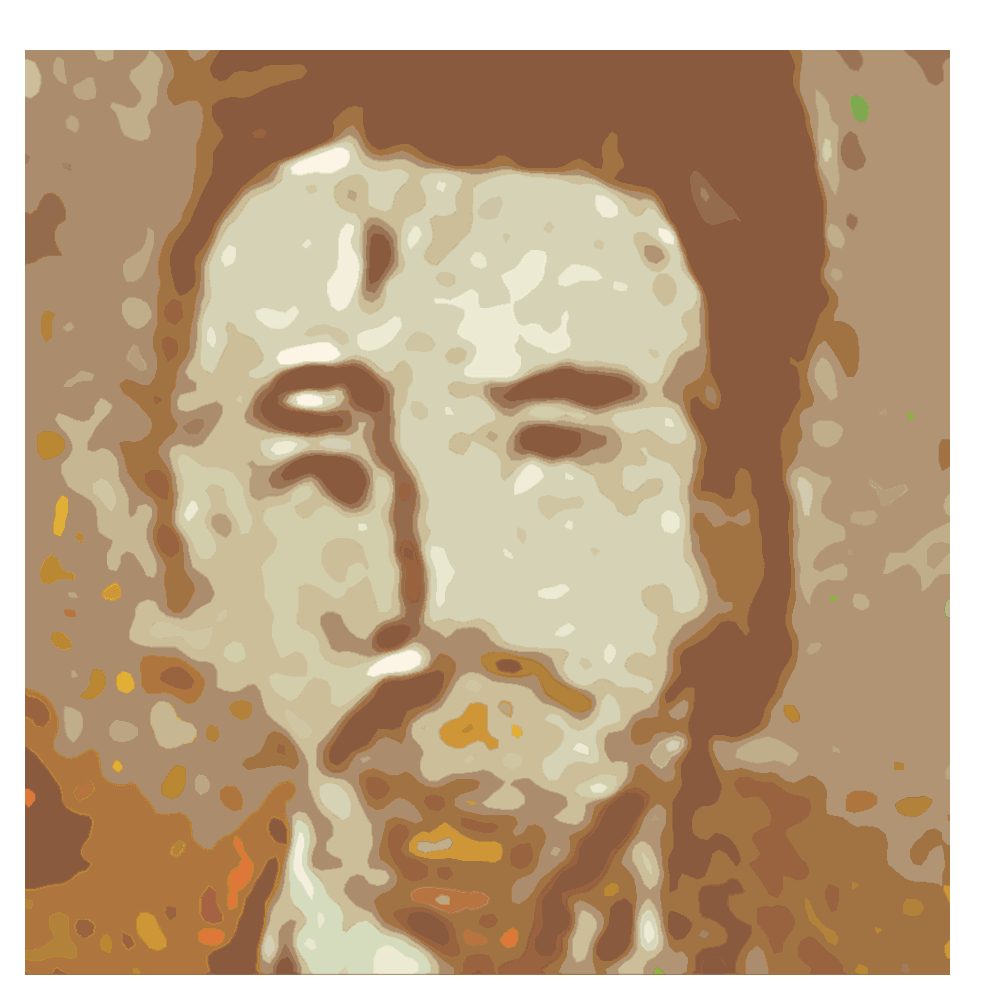

For the final step before attempting to paint 028749_0001_08, the image was translated into a final full series of ABB robot instructions using an algorithm I have been working on. It translates the input image into a series of brush stroke instructions based on a color palette. The color palette for this image was selected for colors that could be mixed by the robot in a small number of steps while preserving a consistent color scheme.

The target image for the image-to-brush-stroke algorithm after upscaling, smoothing, and color reduction.

The target image for the image-to-brush-stroke algorithm after upscaling, smoothing, and color reduction.

The image-to-brush-stroke program is my only “from-scratch” technical contribution on this project; all of the other AI-based steps were largely based on pre-existing papers and code from the past few years. Writing and testing the image-to-stroke process has consumed hundreds of hours of my time over the past two years and is still very much a work in progress.

The only edits made to the source image during this entire project were at this step and were all purely mechanical: upscaling the DCGAN-generated image, color reduction to match the palette approximation, and mechanical smoothing of the image to improve the smoothness of the resulting brush strokes.

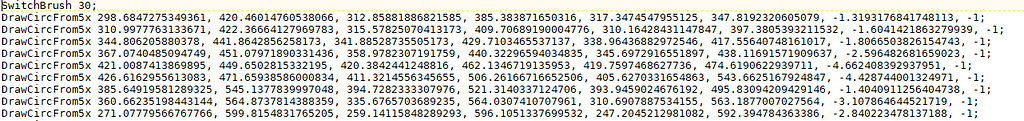

My process relied on a round-trip translation from ABB RAPID instructions (the programming language of the robot arm), to images, to an execution plan, and back to RAPID.

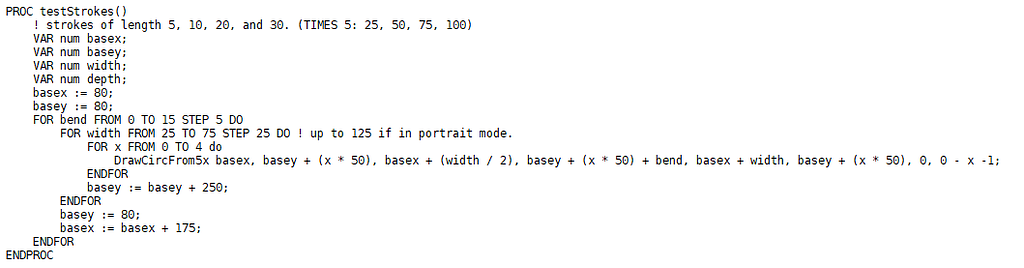

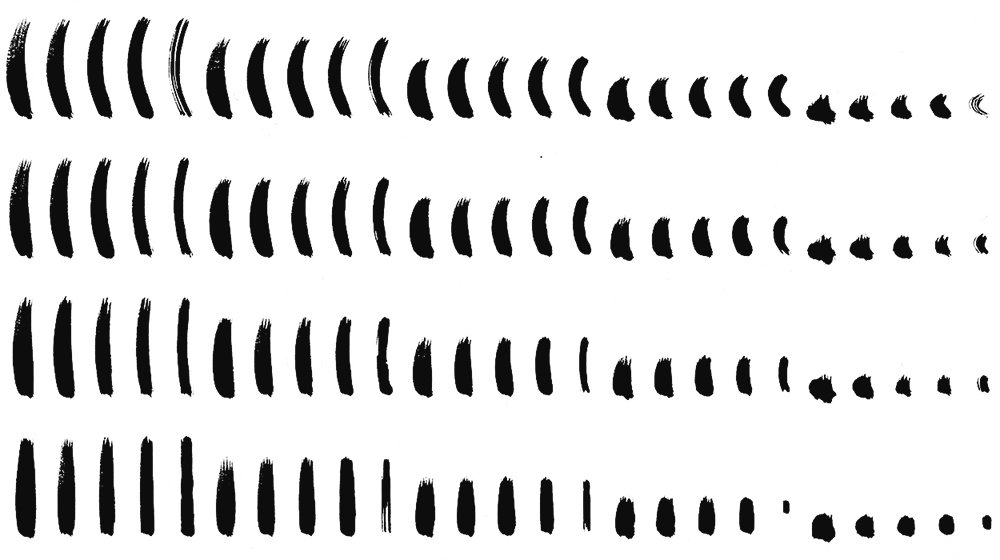

I started by capturing black gouache brush strokes based on a set of RAPID instructions to the ABB arm:

The RAPID code that created the test strokes.

The RAPID code that created the test strokes. The resulting captured strokes. A few failures in here that I had to drop from the training set.

The resulting captured strokes. A few failures in here that I had to drop from the training set.

I chopped these in Photoshop to create a training set for the stroke generator. These, along with paint-mixing instructions, were the inputs into the image-to-painting algorithm; it essentially used versions of the above strokes to compose the painting. My original plan was to use a much larger set of potential strokes, but again time got the better of me.

My original plan (formulated about 12 days before the deadline) was to start with a cream color, gradually mix it to a dark brown, and then use palette mixing for the remaining colors. It was an OK idea, but hopelessly uninformed by real-world robot tests due to time constraints.

I made a big mistake at this step; the proportions I used in my manual mixing were way off what I would eventually get from the robot due to the way I set up the mixing instructions and (as you can see in the final timelapse) I had to make several very last-minute adjustments to fix that.

Some paint mixing notes. This test was done manually, not with the robot arm, which caused a lot of problems in production.

Some paint mixing notes. This test was done manually, not with the robot arm, which caused a lot of problems in production.

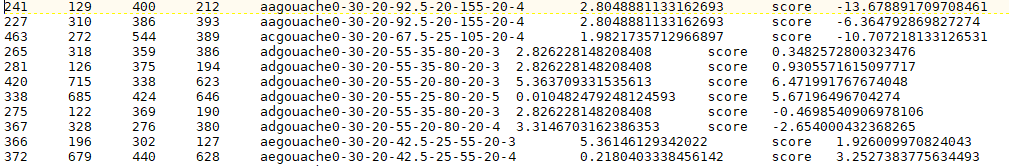

My planning program takes an input image, and a set of mixable colors with an execution order, and attempts to paint the image using robot arm instructions. It uses an organic process of trial-and-error, considering many potential brush strokes and selecting each stroke based on how it fits with the target image.

The code attempts to do some of the on-canvas mixing and blending a human would do, but I didn’t take this idea as far as I wanted given the time constraints.

The final output of the AI painting algorithm was a set of approximately brush strokes along with palette mixing instructions for the robotic arm: 6,000 strokes for the first attempt, 4,000 for the final painting. Rendering the instruction set using my image-to-brush-stroke code took 8 hours on my home PC.

Initial output of the image-to-brush-stroke code.

Initial output of the image-to-brush-stroke code. Translation of the initial output to RAPID instructions that were fed to the ABB arm.

Translation of the initial output to RAPID instructions that were fed to the ABB arm.

The Journey: Physical Reality

I did my first production run in two nights: Thursday, April 5, and Monday, April 9. I intended these to produce the final result, because I had no guarantee of lab time after April 9.

April 5 went OK. I realized almost immediately that my color mixing instructions were way too heavy on the cyan, and way too light on the burnt umber. I also realized the robot was going way too slow and I used too many strokes: only 1,800 strokes were executed in three hours of painting against an initial set of 6,000 strokes! I was in panic mode.

When I got back in the lab on April 9, I learned that the ABB has a production mode that allows it to move much more quickly than I had been running it for months, so I tried that. The speed was great, and the strokes looked more “human”, but I made a lot of other additional mistakes during that first run: I had watered down the gouache so much that it was bleeding on the page, I had instructed the arm to rely far too heavily on burnt umber for color mixing, and there were too many layers involved.

It was effectively my first ever test run with actual paint, but I intended it to be my last. I would have submitted that painting, but a happy accident occurred. I had a one-line bug in my transition from pot-mixing to palette-mixing which caused the brush to move too low across the surface. I had actually tested this in a dry run, but hadn’t noticed the tip of the brush touching the surface of the page. In production, I ended up with a long brown stroke across the surface of the painting. A disaster!

So much wrong with the first attempt: terrible color mixing, overwatered paint, and that software bug that resulted in a long brown line coming out of 028749_0001_08’s mouth.

So much wrong with the first attempt: terrible color mixing, overwatered paint, and that software bug that resulted in a long brown line coming out of 028749_0001_08’s mouth.

The Reality: The Final Painting

Luckily, I was able to get some last minute lab time on Tuesday, April 10, three days before the submission deadline. I tweaked the image-to-stroke generator to get more efficient brush strokes, which brought the stroke count down to 4,000 from 6,000 in the first run. I also re-designed the way paints were mixed to hopefully produce more interesting colors.

Visualization of the final execution plan for the robotic arm.

Visualization of the final execution plan for the robotic arm.

I kicked off the final stroke rendering that morning before work, and it finished a few minutes before I got to the lab.

Final production occurred in that one evening. It took 2.5 hours of setup & testing and just over 3 hours of painting. No breaks were intended during the painting process, but I had to stop a few times to make some last-minute changes to the paint mixing instructions.

My last-minute paint mixing approach resulted in lots of deviations from the source, but also a lot more variety in color. Some of this was intentional, some was not. Many elements of the final product are direct results of running out of time as the deadline approached, and unexpected consequences of my code, but I’m treating them as happy accidents.

Animated visualization of the robot execution plan, with the final product.

Animated visualization of the robot execution plan, with the final product.

I like “organic inaccuracy” for the unexpected results it can produce. What’s the point of doing a physical painting with a robot if you just want it to behave like a printer?

The final run went much more smoothly than the previous two sessions:

Here’s the entire final production process in timelapse, with commentary:

By the end of the night, I was exhausted but glad that I produced a painting that was in line with what I wanted to create. I carried it home in two protective hands like it was the holy grail.

Here’s a closeup of the final product, showing the textures that the robot produced. After I submitted the painting, I manually finished the surface with wax to give the gouache some sheen and depth. I submitted the unwaxed version to the competition since the finish was not applied by robot.

The paints I used were Winsor & Newton Designers Gouache, using the unmixed colors Magenta, Spectrum Yellow, Cobalt Turquoise Light, and Burnt Umber, each mixed with a small amount of water. In addition to these primary colors, I manually pre-mixed a single base cream color using Zinc White, Spectrum Yellow, and Burnt Umber, which was placed in the first of the five source paint pots in the video. All other colors were mixed by the robot.

The painting was done on 300gsm hot press Fabriano Studio Watercolor paper on canvas stretcher boards. I used a single brush — a size 1 Winsor & Newton long-handled round Galeria brush.

This was a labor of love, and wow was it a labor! I’ve spent about 500 hours in total on development and testing to get to my first real robot painting. I’d love to be able to have a pet robot that produces a new painting for me every day, but I fear that day may be farther in the future than I hoped when I started this project.

I am happy with this painting and it met all of my goals for my first robot painting project. Unfortunately, access to robot systems in Melbourne is extremely limited so my ability to work on projects like this is very time-constrained. If you happen to know of anybody in Melbourne who can provide regular night-and-weekend access to an ABB-style robot arm, I would love an introduction!

I’d like to continue to work with AI-generated paintings, but my next plan is to train an AI to take more explicit instructions and concepts from me and translate them into physical paintings while we develop a style over time.

As to the question, “is this art?” — I think the term “art” is almost too overloaded a term to use in such a question. For this project specifically, while it definitely achieved some creative goals I had, I approached it more as a technical proof-of-concept than as a personal expression. But I am going to proudly display this painting in my home with a sense of pride and achievement, and that works for me.

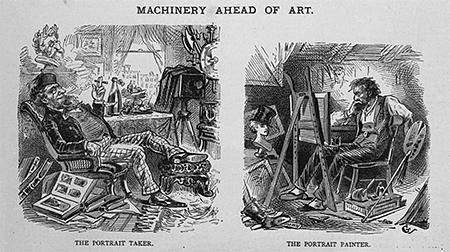

A cartoon from around 100 years ago, in the photography vs painting debate. (Source: Library of Congress collection)

A cartoon from around 100 years ago, in the photography vs painting debate. (Source: Library of Congress collection)

Certainly the debate as to whether new pieces of disruptive technology can be used in art is nothing new. Reading about the debates that took place well over a hundred years ago around photography in the art world, for instance, gives me hope that one day soon the general public will fully accept robots and AI as just another tool that can be used for human expression — I think it’s inevitable.

If you read this far, thank you, and please vote in the competition! The competition is still very much under the radar — last year only about 3,000 voters registered — so your vote really counts!

I would like to give a very heartfelt thanks to Loren Adams, Tom Frauenfelder, Ryan Pennings, Chelle, Hans, and the entire team at the Melbourne School of Design Fablab, without whose support and encouragement this project simply would not have happened. Thank you to Michelangelo, the tireless ABB IRB 120 whose hand ultimately created this work. I would also like to thank Paul Peng, Djordje Dikic, and Rocky Liang of Palette, whose dedication to color was an inspiration during this project. Thanks to Cameron Leeson, Li Xia, Ryan Begley, Joseph Purdam, Rayyan Roslan, Steven Kounnas, Trent Clews de-Castella, Alex Handley, Maxine Lee, Abena Ofori, Heath Evans, and my brother Jon, who all helped me out greatly with resources of various kinds. Thanks to the great staff at Eckersley’s Art and Craft and Melbourne Artists’ Supplies for answering my many questions. And finally, thank you to my loving partner Mannie and my entire family for their support and putting up with me ranting about robot art for quite some time. This was fun, and I’m glad I did it. Thanks to Robotart.org for putting on this competition, which I hope will become part of a lively debate between humans and non-humans in years to come!

I Can’t Paint, But Meet My Robot was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.