Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Poe knows a fair bit about automating

Poe knows a fair bit about automating

There is a plethora of products who want to help us with running our application in the cloud. Unfortunately, none of them covers the entirety of what we need. Sometimes we need to bring our own server. Or they take care of hosting but we can’t access the underlying infrastructure.

So not only we pay for them, bet on closed-source software and there are handling only a fraction of what we need.

I’m sharing a full Terraform and Docker configuration that lets you provision AWS and deploy Laravel / Nginx / MySQL / Redis / ElasticSearch from scratch, in a few commands. We’ll be doing this using Infrastructure-as-code and Configuration-as-code, for the process to be repeatable, shareable and documented.

A word about automating: automating is the only way we can grow your technical teams efficiently. The deeper we automate, the more standard our processes become. We will replace your stray scripts by universally understood technologies like Docker, Terraform, Kubernetes, Consul etc and their design patterns. We can build and handover them to a new developer quickly. We can forget about them and get back to it months later with no brain freeze.

The full source code we need to deploy Laravel on AWS is publicly available on GitHub here:

Ready? Let’s start!

This is a procedure I use to deploy my clients’ Laravel applications on AWS. I hope this can be helpful to deploy yours. If your use case is more complex, I provide on-going support packages ranging from mentoring your developers up to hands-on building your application on AWS. Ping me at hi@getlionel.com

1. Clone the deployment scripts

We start by downloading the Terraform and Docker scripts we need to deploy Laravel. From our Laravel root directory:

git clone git@github.com:li0nel/laravel-terraform deploy

This will create a deploy directory at the root of our Laravel installation. For now, we’re going to use the Terraform scripts from that directory to create our infrastructure.

├── deploy └── terraform # our Terraform scripts ├── master.tf # entrypoint for Terraform (TF) ├── terraform.tfvars # TF input variables └── modules └── vpc # VPC configuration ├── vpc.tf └── outputs.tf └── ec2 # EC2 configuration ├── ec2.tf └── outputs.tf └── aurora # Aurora configuration ├── aurora.tf └── outputs.tf └── s3 # S3 configuration ├── s3.tf └── outputs.tf └── cron # cron configuration for Docker └── artisan-schedule-run └── nginx # Nginx configuration ├── default.conf ├── nginx.conf └── ssl # Nginx SSL certificates ├── ssl.cert └── ssl.key ├── php-fpm # PHP-FPM config├── Dockerfile # Dockerfile for Laravel containers├── Dockerfile-nginx # Dockerfile for Nginx container└── docker-compose.yml # Docker Compose configuration

2. Configure our AWS command line

We start with authenticating our command line by downloading the API key and secret for a new user in the IAM section of our AWS console. This user will need to have to have permissions to create resources for all the services we will use below. Follow the prompts from:

aws configure

3. Update Terraform input variables

Terraform automatically loads input variables from any terraform.tfvars or *.auto.tfvars present in the current directory.

4. Build our infrastructure with Terraform

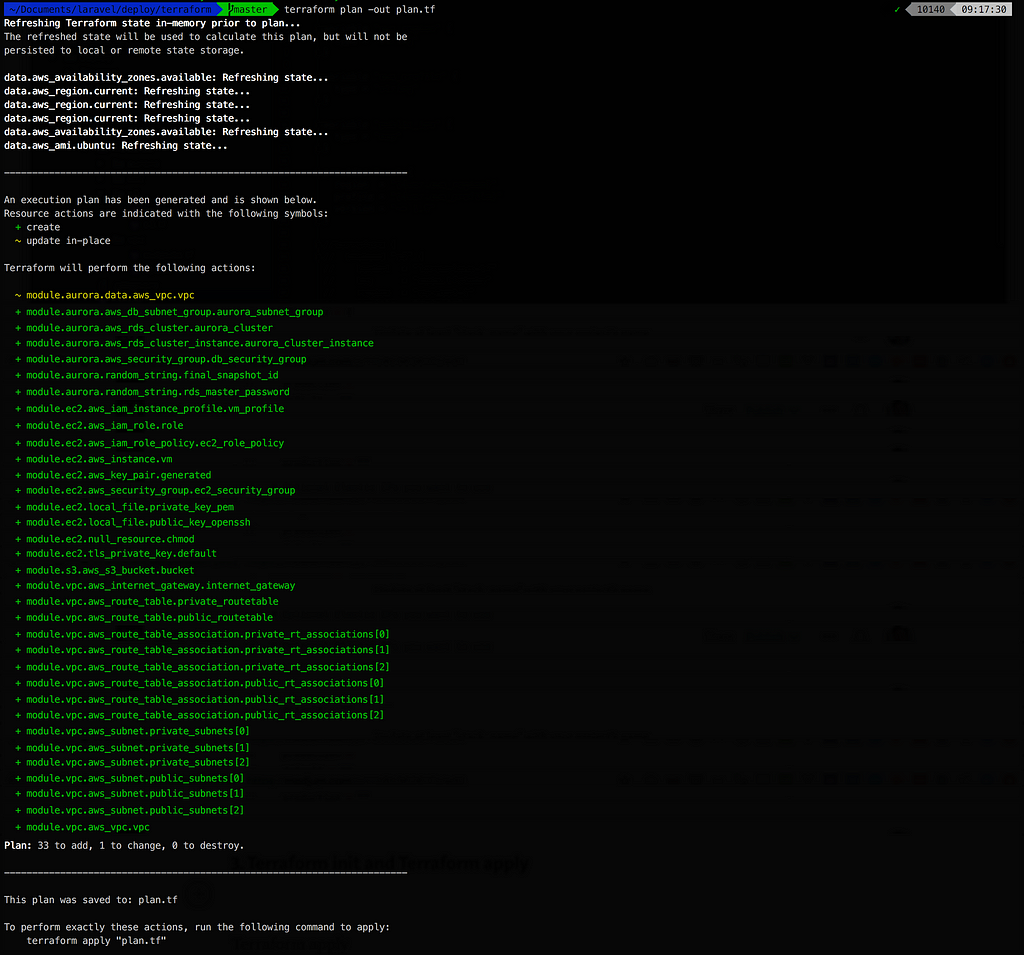

Let’s check what Terraform is about to build for us in our AWS account with terraform plan :

These are the 33 infrastructure parts needed to deploy Laravel on AWS. It would easily be ~60 of them once we include monitoring alerts, database replicas and an auto-scaling group.

Can you see how risky and painful it would be to set that up manually in the AWS console for every new project?

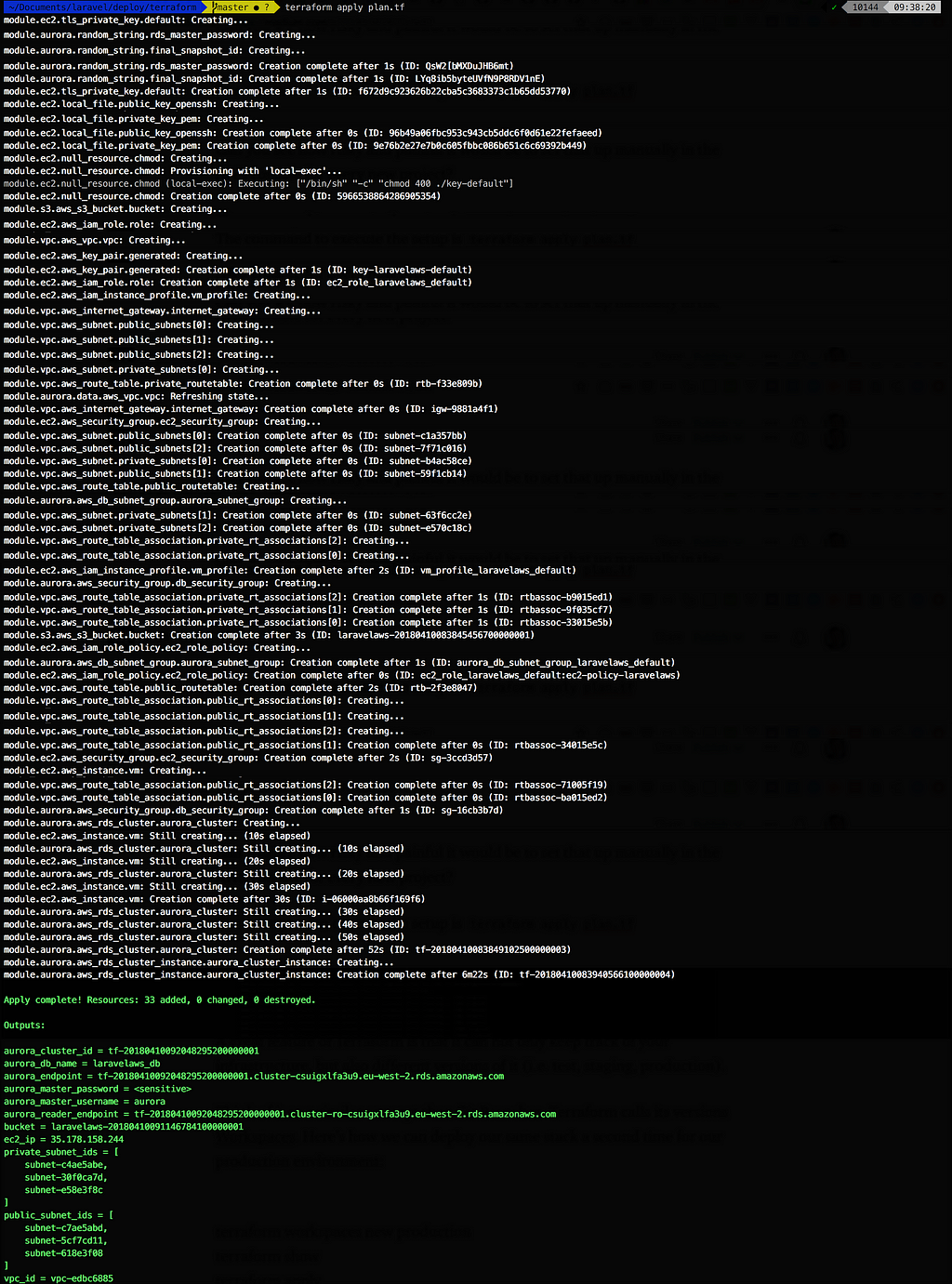

The command to execute the setup is terraform apply plan.tf

Terraform created our infrastructure in less than 8 minutes

Terraform created our infrastructure in less than 8 minutes

Terraform can not only make us extremely efficient, but is also a real pleasure to write infrastructure with. Look at an example of its syntax:

2 things to remember when using Terraform: — We tag every resource we can with our project name, to identify them in the AWS console — We will setup Terraform to store our state file on S3 (more info in step 7 below) and make sure bucket versioning is enabled! If we don’t, and accidentally overwrite our state file, Terraform would have stopped tracking our stack and we need to go and manually delete everything in the console.

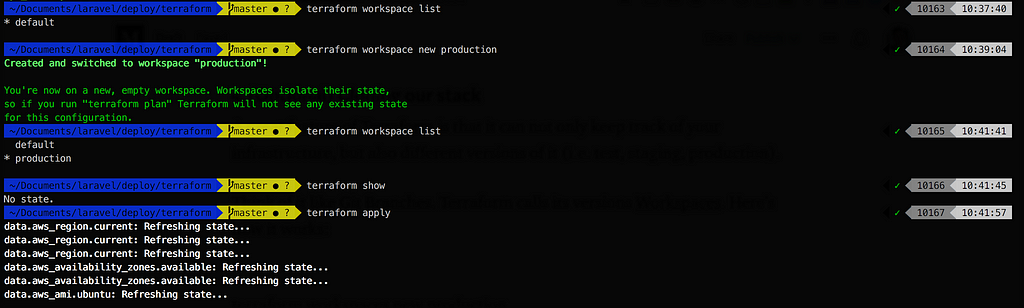

4. (Optional) Cloning our stack

A great feature of Terraform is that it can not only keep track of our infrastructure, but also different versions of it (i.e. test, staging, production).

Think of it like Git Branches. Terraform calls its versions Workspaces. Here’s how it works:

Creating the exact same stack, in production

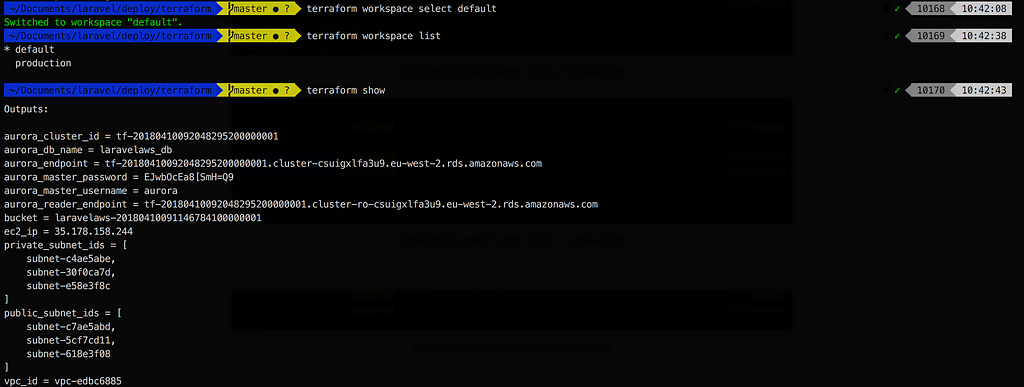

Creating the exact same stack, in production Switching back to our initial workspace5. Install Docker on our EC2 instance using Docker Machine

Switching back to our initial workspace5. Install Docker on our EC2 instance using Docker Machine

Docker Machine is the single easiest way to provision a new Docker host or machine.

— it’s blazing fast — the command line is dead simple — it manages all of the SSH keys and TLS certificates even if we have dozens of servers — makes our servers immediately ready for Docker deployments

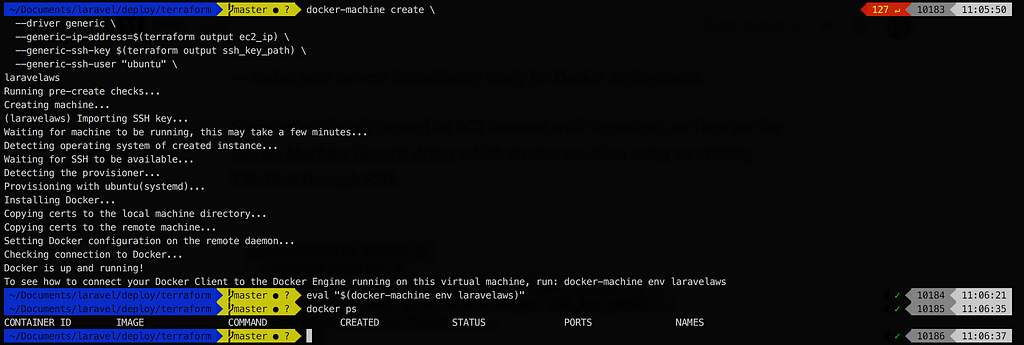

Since we’ve already created an EC2 instance with Terraform, we then use the Docker Machine Generic driver which creates machines using an existing VM/Host through SSH:

docker-machine create \ --driver generic \ --generic-ip-address=$(terraform output ec2_ip) \ --generic-ssh-key $(terraform output ssh_key_path) \ --generic-ssh-user "ubuntu" \laravelaws

Docker Machine will connect to the remote server through SSH, install the Docker daemon and generate TLS certificates to secure the Docker daemon port.We can then connect at any point to our machine using eval "$(docker-machine env your_machine_name)" , from which we can list running containers.

Provisioning our existing EC2 instance with the Docker daemon with Docker Machine6. Build and deploy the Docker images with Docker Compose

Provisioning our existing EC2 instance with the Docker daemon with Docker Machine6. Build and deploy the Docker images with Docker Compose

Great, we’re half-way through! We have a fully provisioned infrastructure we can now deploy our Laravel stack on. We’ll be deploying the following Docker containers:

— our Laravel code in a PHP-FPM container — Nginx as a reverse proxy to PHP-FPM — (optional) Redis as a cache and queue engine to Laravel — (optional) ElasticSearch for our application search engine — our Laravel code in a container running cron, for artisan scheduled commands — our Laravel code in a worker container that will listen for Laravel Jobs from the Redis queue

Just like we don’t want to manage all our infrastructure’s moving parts manually, there is NO WAY we will manage all of this configuration and orchestration manually.

Docker Compose to the rescue!

The configuration for all the above containers is committed into the Dockerfiles:

└── deploy ├── Dockerfile # Dockerfile for Laravel containers├── Dockerfile-nginx # Dockerfile for Nginx container└── docker-compose.yml # Docker Compose configuration

… and the script to orchestrate them all is the docker-compose.yml file:

Copy these files at the root of your project:mv Dockerfile Dockerfile-nginx docker-compose.yml .dockerignore ..

Then create a docker-compose.env file for our remote environment variables:cp .env docker-compose.env

Update the docker-compose.env file with appropriate env vars: — DB_HOST with the output of terraform output aurora_endpoint — DB_DATABASE with the output of terraform output aurora_db_name — DB_USERNAME with the output of terraform output aurora_master_username — DB_PASSWORD with the output of terraform output aurora_master_password — CACHE_DRIVER, SESSION_DRIVER, QUEUE_DRIVER with redis

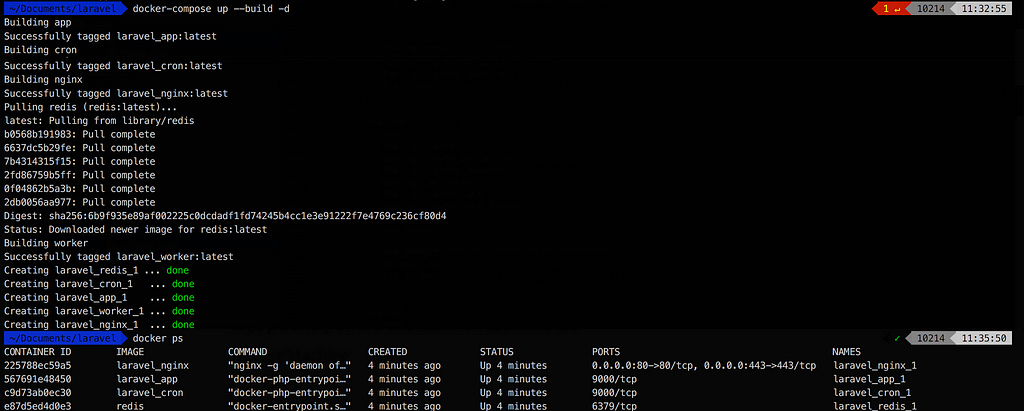

We’re now ready to build our images and run our containers:docker-compose up --build -d

Building and running all containers at once

Building and running all containers at once

Let’s confirm that our Laravel code is correctly configured with the right database connection details, by running the default database migrations:

Our Laravel project successfully connecting to Aurora

Our Laravel project successfully connecting to Aurora

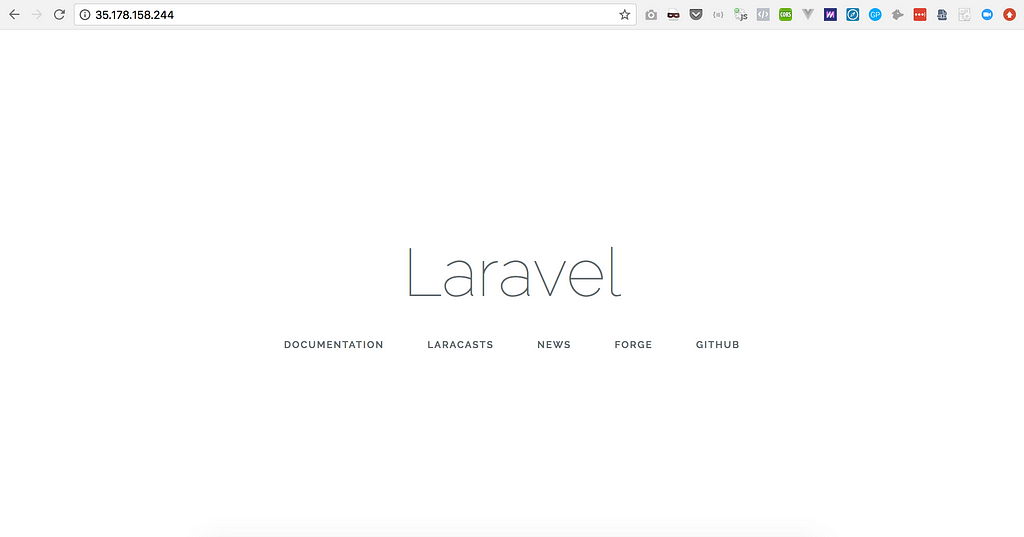

…and that our web project is accessible on port 80:

Laravel running in its Docker container on our Terraform-provisioned EC2

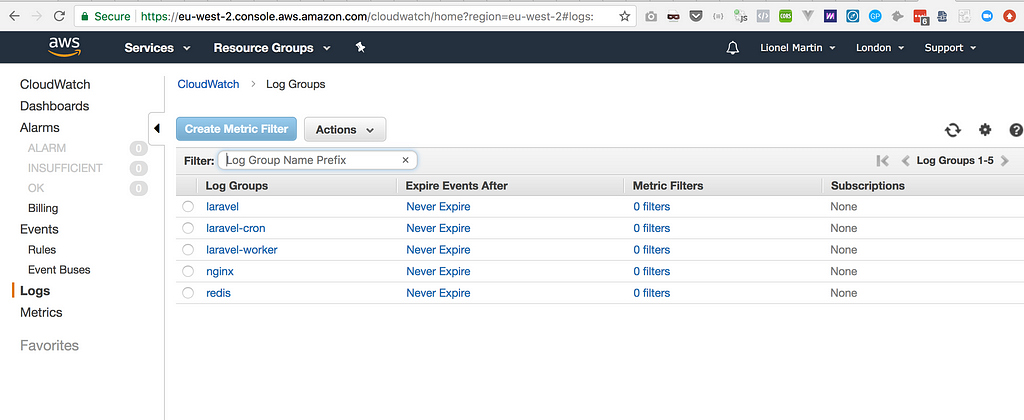

Laravel running in its Docker container on our Terraform-provisioned EC2 Each container’s log stream is centralised in our CloudWatch console7. (Optional) Share access with teammates

Each container’s log stream is centralised in our CloudWatch console7. (Optional) Share access with teammates

Amazing! We can now re-deploy your Laravel code at will, modify our infrastructure and configuration while keeping track of all changes in your code.

Teammates can access all the Docker configuration and the Terraform files by pulling our code from our source code repository. However, at this stage, they can not: — modify the infrastructure or access Terraform outputs because they can’t access Terraform’s state file that is saved locally on our machine — connect their Docker client to our EC2 instance because they haven’t installed a Docker Machine pointing to that EC2 like we have

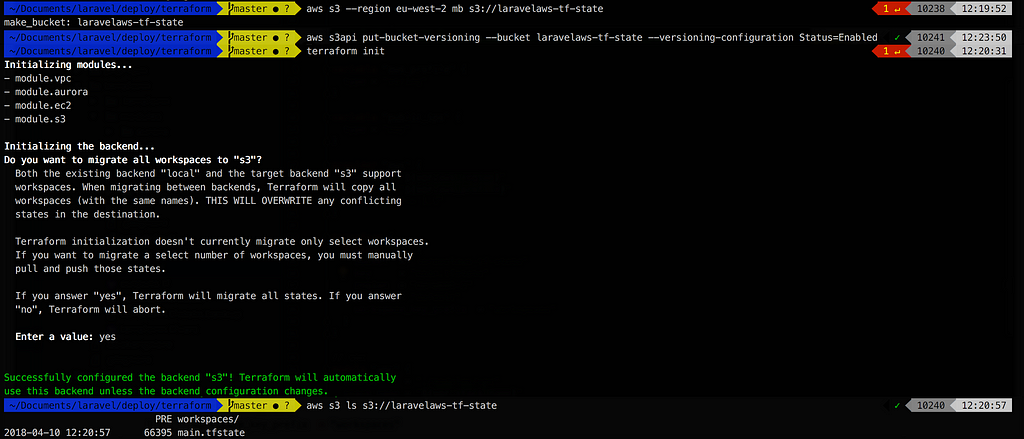

The solution to the first point is to get Terraform to use the S3 remote backend, where the Terraform state is effectively saved remotely on an S3 bucket for sharing. This is done by un-commenting the following lines in master.tf and run terraform init to migrate the state to the new backend:

It is strongly recommended to activate versioning on your S3 bucket to be able to retrieve your Terraform state should you overwrite it accidentally!

Migrating Terraform’s state to S3 for security and collaboration

Migrating Terraform’s state to S3 for security and collaboration

Now, provided our colleagues use AWS credentials allowing access to our S3 bucket, they will connect to the same Terraform backend as we do, and we can work on the stack at the same time with no risk.

8. (Optional) Destroy and re-create your stack at will

Congrats for making it that far in this guide! Once you’re done, clean up your infrastructure using terraform destroy so you don’t get a billing surprise at the end of the month.

Lionel is a senior developer turned devOps, helping tech companies architecting their web platform in the cloud and building automation in their operations and marketing to set them up for success. He geeks out on Laravel, AWS and email marketing automation. You can reach out to him on https://getlionel.com

Stop manually provisioning AWS for Laravel — use Terraform instead was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.