Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Photo by Johannes Plenio on Unsplash

Photo by Johannes Plenio on Unsplash

At the start of the career as a software developer, most people think that a program’s performance boils down to the asymptotic complexity of their operations. But once you apply best algorithms out there and your code still doesn’t give you that desired performance, what should you do?

Here are some basic techniques that may help you get that extra juice out of a CPU core.

Loop unrolling

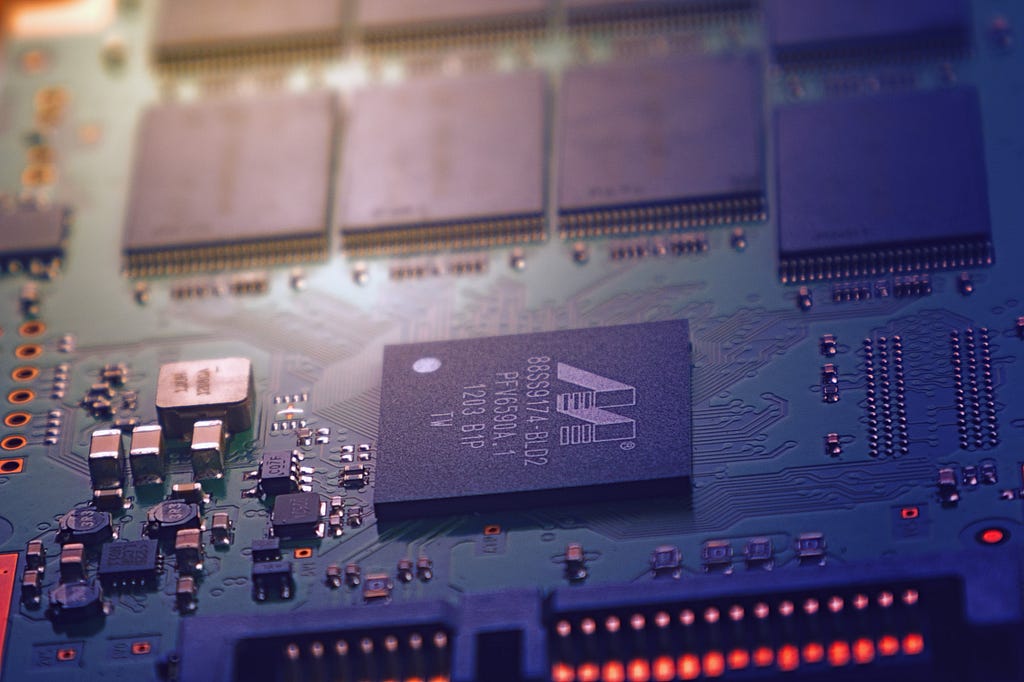

A modern CPU core has multiple functional units such as ALU , Load/Store , branch in it’s execution unit.

Kaby Lake - Microarchitectures - Intel - WikiChip

Programs should always try to exploit this to achieve parallelism.

One of the techniques is to use Loop unrolling. Loop unrolling allows you to break the dependency chain on a argument. An out of order CPU can execute multiple instructions in parallel and will make your program run faster.

func F1() int {var acc int = 1;for j := 0; j < length; j++ { acc = acc + inputList1[j] }return acc}func F2() int {var acc1 int = 1;var acc2 int = 1;for j := 0; j + 1 < length; j += 2 { acc1 = acc1 + inputList1[j] acc2 = acc2 + inputList1[j + 1] }return acc1 + acc2}In F1, the program typically has to wait for acc to update before executing next loop. However, in F2, your program will take advantage of the multiple integer addition units in the core and will proceed in parallel. If you run the benchmarks, you’ll notice the F2 is almost twice as fast as F1.

Vector instructions

Modern CPUs can operate on vectors (array of primitive types) as a unit rather than individual elements. Vector instructions involve loading multiple values at once operating on them in a single instruction and then storing them. These instructions are known as SIMD (Single Instruction, Multiple Data). The popular extensions of SIMD are SSE, AVX and there extensions.

SIMD instruction can usually operate on 8 32-bit or 4 64-bit values at once and thus can make a program really faster. Typically compilers, take care of this.

Caching

Caches are usually taken for granted by the programmers. The code you write typically has a great impact on whether there will be effective caching or not.

Taking an example from github

func ColumnTraverse() int {var ctr intfor col := 0; col < cols; col++ {for row := 0; row < rows; row++ {if matrix[row][col] == 0xFF { ctr++ } } }return ctr}func RowTraverse() int {var ctr intfor row := 0; row < rows; row++ {for col := 0; col < cols; col++ {if matrix[row][col] == 0xFF { ctr++ } } }return ctr}Both of these functions are doing the same thing and have the same asymptotic complexity. If you run the benchmarks, you’ll find out that RowTraverse is usually 4 times faster than ColumnTraverse.

This is because row traverse utilises the value already in cache but ColumnTraverse has to fetch a value from memory on each comparison.

You can read more on caching in my article

Why you should care about a KB?

Branch Prediction

Modern CPUs do not stop when they see a branch (i.e. a if else type of condition). Instead they speculatively execute the next instruction assuming the branch is taken or not. The result is only committed when it’s determined if the prediction was correct or not. In case of a mis-prediction, the CPU discards the results and starts executing the instructions from the first statement after branch. This miss generally causes a penalty of 5–10 ns apart from the additional overhead of executing more instructions.

If your program toggles a lot between if-else then the branch mis prediction penalty will take a toll on your performance. Best way to avoid this is to use as few branches as possible and if absolutely necessary, make them predictable (e.g. sorting the values before comparison).

Concurrency != Parallelism

You increased the number of threads from 10 to 100 and your program’s performance got worse. Why would that happen?

The problem is the CPU. It only has a limited number of cores to support parallel operations. When seems to happen in parallel in application is generally the processor rapidly switching between the threads. However, that switch is costly because it involves copying PC, SP and all the registers in the memory/cache back and forth.

If you have a large number of threads, CPU will spend most of the time just juggling between these threads rather than executing the actual instructions. Modern languages try to tackle this by multiplexing large number of abstractions (such as goroutines) over a few OS threads.

Mutex

If you achieve optimal concurrency, but you are using locks to synchronise data structures, you still won’t notice as much performance gain as it should be.

It’s because a simple mutex lock/unlock takes about 25ns. This doesn’t seem like a lot but once you are running your program for 10 million RPM, this 25ns becomes 250ms which is a lot.

One of the easiest way to avoid mutex is to have thread local data structures. If you need to share data between threads, use lockless communication techniques (e.g. channels in goroutines) rather than shared memory DS.

Memory references

Memory references shouldn’t be used unless they are absolutely needed. Compilers usually avoid doing optimisations on memory references as it can have unprecedented implications.

func AddPointers(x *int, y *int){ *x += *y *x += *y}A simple optimisation compiler should do is to make it *x = 2* (*x) + *y thus reducing the number of instructions.

However, if x and y point to the same variable, the AddPointers will give the output 4(*x) while the optimisation will give 3(*x) which is incorrect. Compilers play safely when they encounter such scenarios and will avoid any optimisation.

There are many more techniques that can be applied such as kernel bypass, avoiding inter-core communications etc. But those don’t satisfy the criteria of being simple. I’ll talk about those in detail in another article.

Some of the resources to dive into these topics are:

- Computer Systems A Programmer’s Perspective

- http://highscalability.com/all-time-favorites/

- Scott Meyers: Cpu Caches and Why You Care

- Latency Numbers Every Programmer Should Know

Connect with me on LinkedIn or Facebook or drop a mail to kharekartik@gmail.com to share the feedback.

Here are some simple optimisations to squeeze performance out of CPU was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.