Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Blocks of rocks vanishing at the distance

Blocks of rocks vanishing at the distance

If you have trained your deep learning model for a while and its accuracy is still quite low. You might want to check if it is suffering from vanishing or exploding gradients.

Intro to Vanishing/Exploding gradients

Vanishing gradients

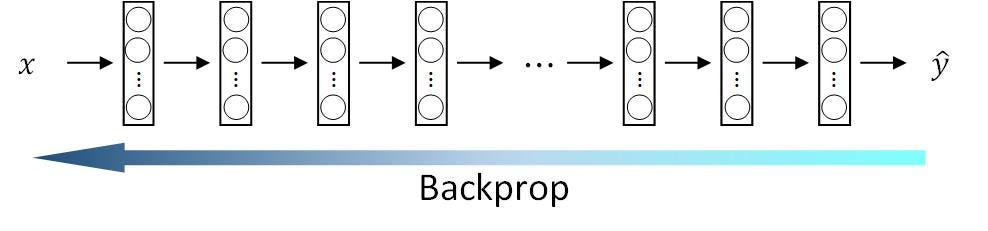

Backprop has difficult changing weights in earlier layers in a very deep neural network. During gradient descent, as it backprop from the final layer back to the first layer, gradient values are multiplied by the weight matrix on each step, and thus the gradient can decrease exponentially quickly to zero. As a result, the network cannot learn the parameters effectively.

Using a very deep network can represent very complex functions. It can learn features at many different levels of abstraction, from edges (at the lower layers) to very complex features (at the deeper layers). For example, earlier ImageNet model like VGG16 and VGG19 are striving to achieve higher image classification accuracy by adding more layers. But the deeper the network becomes, the harder it is to update earlier layers’ parameters.

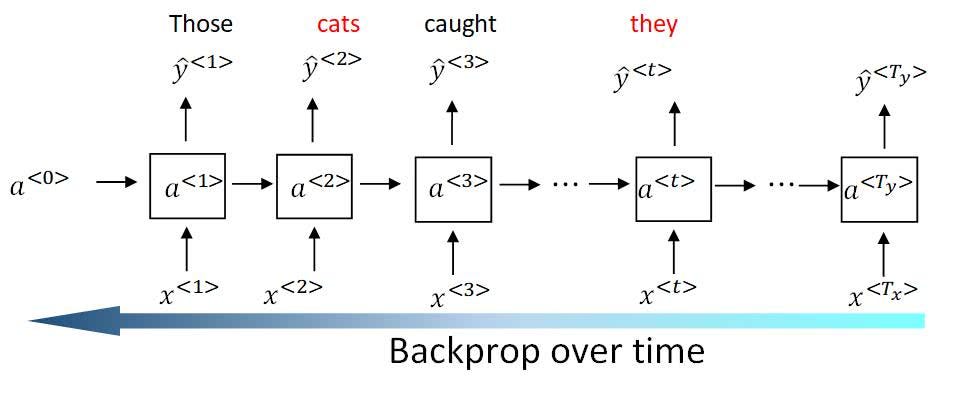

Vanishing gradients also appear in the sequential model with the recurrent neural network. Causing them ineffective in capturing long-range dependencies.

Take this sentence as an example. The model is trained to generate a sentence.

“Those cats caught a fish,….., they were very happy.”

The RNN needs to remember the word ‘cats’ as a plural in order to generate the word ‘they’ in the later sentence.

Here is an unrolled recurrent network showing the idea.

RNN backprop over timeExploding gradients

RNN backprop over timeExploding gradients

Compared to vanishing gradients, exploding gradients is more easy to realize. As the name ‘exploding’ suggests. During training, it causes the model’s parameter to grow so large so that even a very small amount change in the input can cause a great update in later layers’ output. We can spot the issue by simply observing the value of layer weights. Sometimes it overflows and the value becomes NaN.

In Keras you can view a layer’s weights as a list of Numpy arrays.

Solutions

With the understanding how vanishing/exploding gradients might happen. Here are some simple solutions you can apply in Keras framework.

Use LSTM/GRU in the sequential model

The vanilla recurrent neural network doesn’t have a sophisticated mechanism to ‘trap’ long-term dependencies. On the contrary, modern RNN like LSTM/GRU introduced the concepts of “gates” to artificially retain those long-term memories.

To put it simply, in GRU(Gated Recurrent Unit), there are two “gates”.

One gate called the update gate decides whether to update current memory cell with the candidate value. The candidate value is computed by previous memory cell output and current input. As compared in vanilla RNN which this candidate value will be used directly to replace the memory cell value.

The second gate is the relevant gate tells how relevant previous memory cell output is to compute the current candidate value.

In Keras, it is very trivial to apply LSTM/GRU layer to your network.

Here is a minimal model contains an LSTM layer can be applied to sentiment analysis.

And if you are struggling to choose LSTM or GRU. LSTM is more powerful to capture long-range relations but computationally more expensive than GRU. In most case, GRU should be enough for the sequential processing. For example, if you just want to quickly train a model as a proof of concept, GRU is the right choice. While you want to improve an existing model’s accuracy, you can then replace the existing RNN with LSTM and train for a longer time.

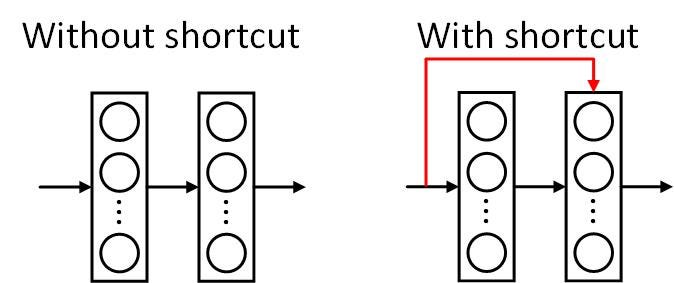

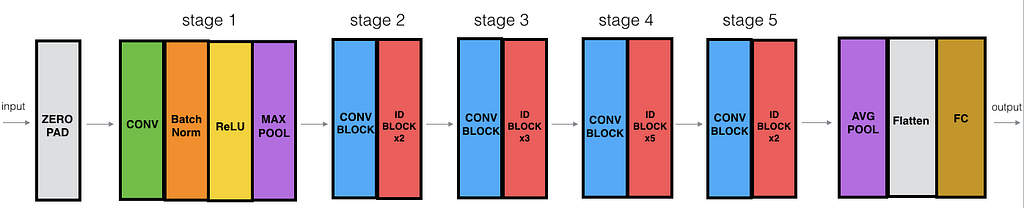

Use Residual network

The idea of the residual network is to allow direct backprop to earlier layers through a “shortcut” or “skip connection”.

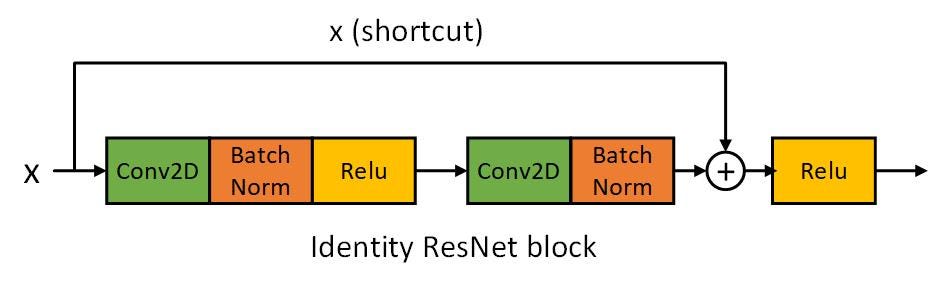

The detailed implementation of ResNet block is beyond the scope of this article but I am going to show you how easy to implement an “identity block” in Keras. “Identity” means the block input activation has the same dimension as the output activation.

And here is the Keras code for this identity block.

There is another ResNet block called convolution block, you use it when the input and output dimensions don’t match up.

With the necessary ResNet blocks ready, we can stack them together to form a deep ResNet model like the ResNet50 you can easily load up with Keras.

ResNet50Use ReLu activation instead of Sigmoid/Tanh

ResNet50Use ReLu activation instead of Sigmoid/Tanh

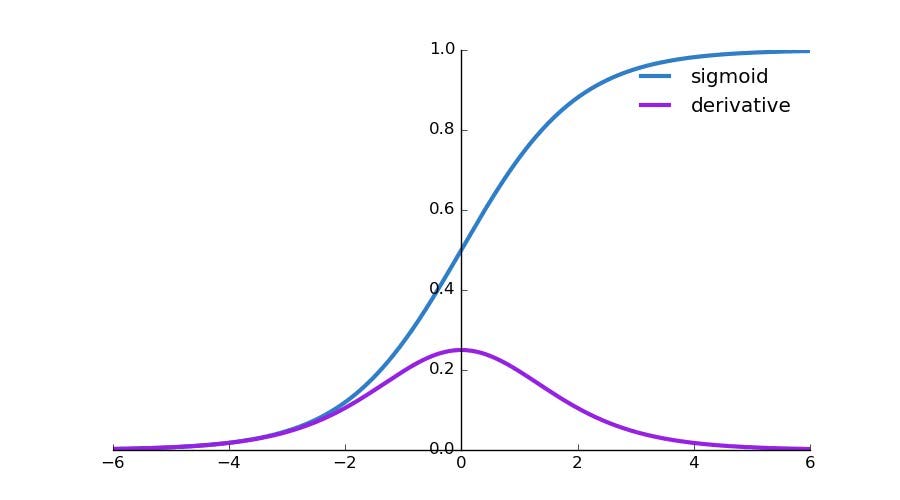

Sigmoid function squeezes the activation value between 0~1. And Tanh function squeezes the activation value between -1~1.

As you can see, as the absolute value of the pre-activation gets big(x-axis), the output activation value won’t change much. It will be either 0 or 1. If the layer gets stuck in that state, the model refuses to update its weights.

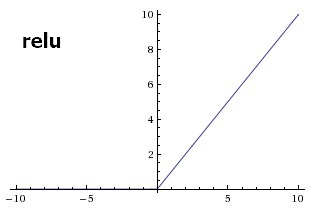

On the other hand here is the ReLu activation function.

For a randomly initialized network, only about 50% of hidden units are activated (having a non-zero output). This is known as sparse activation.

You might worry about the zero section of ReLu, it could shut down a neural entirely. However, experimental results tend to contradict that hypothesis, suggesting that hard zeros can actually help supervised training. We hypothesize that the hard non-linearities do not hurt so long as the gradient can propagate along some paths.

Another benefit of ReLu is it is easy to implement, only comparison, addition and multiplication are needed. So it is more computationally effective.

To apply a ReLu in Keras is also very easy.

Weight Initialization

- The weights should be initialized randomly to break symmetry.

- It is, however, okay to initialize the biases to zeros. Symmetry is still broken so long as weights are initialized randomly.

- Don’t initialize to values that are too large.

Keras default weight initializer is glorot_uniform aka. Xavier uniform initializer. Default bias initializer is “zeros”. So we should be good to go by default.

Gradient clipping for Exploding gradients

As this name suggests, gradient clipping clips parameters’ gradients during backprop by a maximum value or maximum norm.

Both ways are supported by Keras.

Apply Regularization like L2 norm for Exploding gradients

Regularization applies penalties on layer parameters(weights, bias) during optimization.

L2 norm applies “weight decay” in the cost function of the network. Its effect is controlled by the parameter λ, as λ gets bigger, weights of lots of neurons are very small, effectively making them less effective, as a result making the model more linear.

Use Tanh activation function example, when the activation value is small, the activation will be almost linear

In Keras, usage of regularizers can be as easy as this,

Summary and Further Reading

In this article, we start by understanding what is vanishing/exploding gradients followed by the solutions to handle the two issues with Keras API code snippets.

Further reading

Keras usage of regularizers https://keras.io/regularizers/

LSTM/GRU in Keras https://keras.io/layers/recurrent/

Other Keras weight Initializers to take a look. https://keras.io/initializers/

Originally published at my website www.dlology.com.

Find me on GitHub, LinkedIn, WeChat, Twitter and FaceBook.

How to deal with Vanishing/Exploding gradients in Keras was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.