Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

The Internet is our digital information superhighway which we use so ubiquitously today. The term “online” has become synonymous with the Internet. We are actually almost always online and sometimes we are not aware of it. This is because of the transparency in service that Internet Service Providers (ISP) and cellular phone providers have given us. Our Internet plan and smartphone service provide data access to the Internet 24/7/365. We don’t just use the Internet for online shopping and general research. We also use the Internet to pay bills, RSVP to invitations, post photos from our daily life and even order groceries. It has become a necessity for modern living that it seems we cannot live without it. If the Internet were to suddenly shut down, it will cause anxiety among people whose lives have become very much dependent on it. Bloggers and vloggers, social media influencers and online gamers form a large percentage of the online community. This goes to show how the Internet has now become a big part of daily life. This is due to commercialization and how all aspects of modern life revolve around it. That is what has lead to the centralization of the Internet. This centralization is now controlled by the big players who provide it as a service, yet the original Internet was not like this.

In order to understand, the original Internet’s plan was not to be centralized. In fact it was a project by the US DoD (Department of Defense) to establish a computer data communications network that could withstand unforeseen events and disasters like war. Therefore it must be decentralized so that if one part of the system fails the rest can still function. It must also be able to communicate using peer to peer interconnectivity without relying on a single computer. Another important consideration is that the computers must be interoperable among dissimilar systems, so that more devices can be a part of the network. It all started with ARPANET in October 29, 1969 when the first successful message was sent from a computer in UCLA to another computer (also called node) at the Stanford Research Institute (SRI). These computers were called Interface Message Processors (IMP).

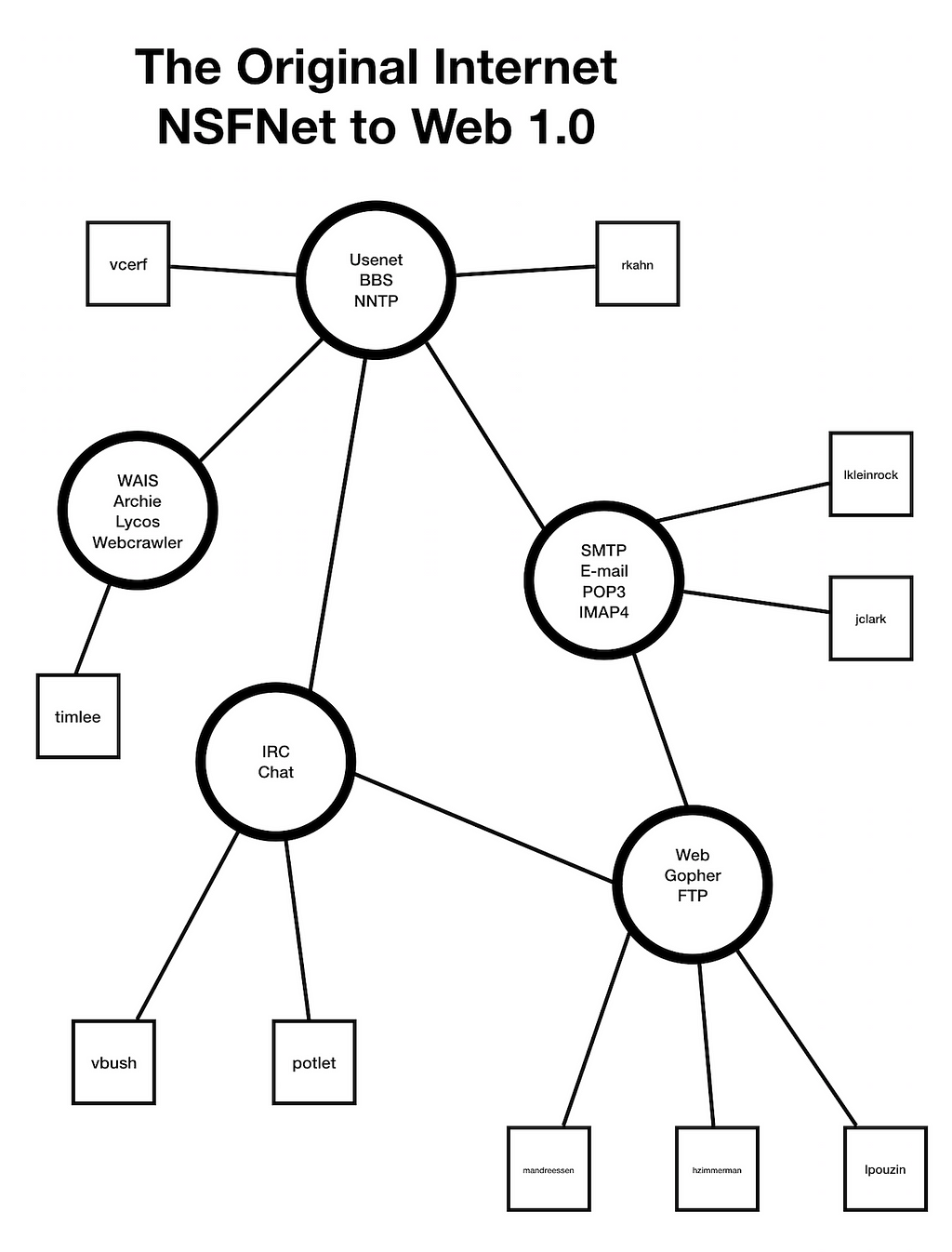

In the beginning ARPANET benefited not just the military but also research institutes, so it had it’s origins in the academic community though it was a military project. The system slowly evolved so it was not immediately adopted for commercial use. Instead in the early 1980’s it was adopted by universities and research institutes through an initiative by the NSF (National Science Foundation). It was called the NSFNET Project and it’s aim was to promote research and education. The best way to do this was to use an interconnected network of computers that can provide a way to collaborate and share information. This provided a backbone that included the Computer Science Network (CSNET) that linked computer science research among academics. Eventually ARPANET and NSFNET would be decommissioned, thus paving the way for the commercialization of the Internet. It was also called the “Internet” as a sort of portmanteau of “interconnected” and “network” and has been called the Internet since. This would involve the development of standards maintained by the IETF (Internet Engineering Task Force) with contributions from among many organizations the IEEE (Institute of Electrical and Electronics Engineers), IESG (Internet Engineering Steering Group) and the ISO (International Organization for Standardization).

The Internet would not have achieved mass adoption if not for the success of 5 important developments, in my opinion. These are:

- TCP/IP — Transmission Control Protocol/Internet Protocol are the standard set of data communications protocols used on the Internet. It was developed under the DARPA (DoD Advanced Research Projects Agency) by Robert Kahn and Vint Cerf. It is now a de facto standard for the Internet and is maintained by the IETF. These protocols are what gave the Internet e-mail, file transfer, newsgroups, web pages, instant messaging, voice over IP just to name some. This is like a common language that computers use to communicate with one another on the network.

- World Wide Web and HTML — This is credited to Tim Berners-Lee who developed a system that would allow documents to be linked to other nodes. This was the beginning of hypertext, which are links to information stored on other computers in the network. Users would no longer need to know the actual location or computer name to access resources through the use of HTML (Hypertext Markup Language). Thus a resource called a website can be accessed that provides these links which can be clicked with the mouse. This whole linked system became called the World Wide Web and to access resources on it one must type “www” followed by the domain name “servername.com”.

- Browser — The World Wide Web would be useless if not for a software program called a browser. Early development of the web browser started with Mosaic in 1993. Prior to browsers, there was a software called Gopher that provided access to websites, but it was tedious and not user friendly. Eventually more robust features evolved with a new generation of browsers like Mozilla and then Netscape. It was actually Microsoft’s introduction of Internet Explorer (IE) in 1995 that led to wider adoption of the World Wide Web and use of the Internet.

- Search Engines — In order to get information and content from the Internet, a search engine software was needed. The early days of searching began with Gopher. it became less popular when browser based search engines emerged. Other web based systems evolved like Lycos, Yahoo and Webcrawler. Then Google appeared toward the late 1990’s and became the most popular search engine. It was simple and fast, offering the best way for users to get information on the Internet. The term “google” now became synonymous with searching on the Internet and is also the most well known search engine.

- Internet Service Providers — The early days of the Internet required a dial-up modem connected to a telephone line with data speeds of 14.4–28.8 kbps. That was sufficient to meet the data demands during the late 80’s and early 90’s since most Internet. As the Internet grew more popular and businesses began to adopt it, more content required faster data speeds. This led to Internet Service Providers (ISP) beginning with the likes of AOL bundling service by mailing free CD software to encourage users to sign up. The catch was getting an e-mail address and free hour of Internet use. ISP’s continued to improve service by offering faster DSL and ADSL service as alternatives to dial-up. DSL service bumped speeds up to 128 kbps. Cable companies then provided even faster Internet speed using cable modems that became known as broadband service. The infrastructure was built by telecommunications companies and cable TV giants to offer even faster speeds that would allow users to stream video, chat, browse active content on the web, video conference and faster data downloads. Cable modem speeds, based on DOCSIS (Data Over Cable Service Interface Specification) offer speeds between 20 to 100 Mbps and even greater (depends on how many users are connected on the subscriber circuit).

When the Internet was just starting out in the early 1990’s, it was basically college kids and researchers. A user would connect a device called a modem via a serial port to their computer and use a dial-up service. The connection to the Internet was via a telephone line. To access the Internet, all users needed to do was know the telephone number of the connected computer. From this computer, the user can establish connections to other computers. This computer was called a server and provided basic or specific services for users. This is known as a client/server architecture. A user can dial-up to their school’s e-mail server to check for messages. Then when a user needed to do research they can disconnect and dial-up to the Gopher server. The organization of the Internet was very chaotic and highly decentralized. There is no central authority at all and every computer is independent of each other. If one server was not working, users can always dial-up another server. Many early Internet users accessed what are called Bulletin Board Services (BBS) which are servers that host an electronic version of a bulletin board. It was a place to look for information regarding certain interest groups. Users can meet other people and collaborate with them using this service. This made messaging programs like Internet Relay Chat popular for real time communication as result of more social interactions. Eventually bigger services like USENET opened up and these services provided more information through discussion forums or newsgroups. Users also used a service called FTP, which allowed them upload or download files. This was important among researchers who needed to exchange data files that may be too large to attach with e-mail. This type of system had it’s disadvantage though. If the server that contains the information or a user’s e-mail fails, then it can be a big problem. Most universities do have backup data, but other servers did not. It was also an inconvenient process having to dial-up to a different computer every time when you needed to. This is where Internet Service Providers (ISP) entered the picture. This was the period of the Internet called Web 1.0. This was a time when the Internet was mainly web pages and hyperlink content. By providing the Internet as a service to users, they can make using the Internet more convenient. Thus began the commercialization of the Internet.

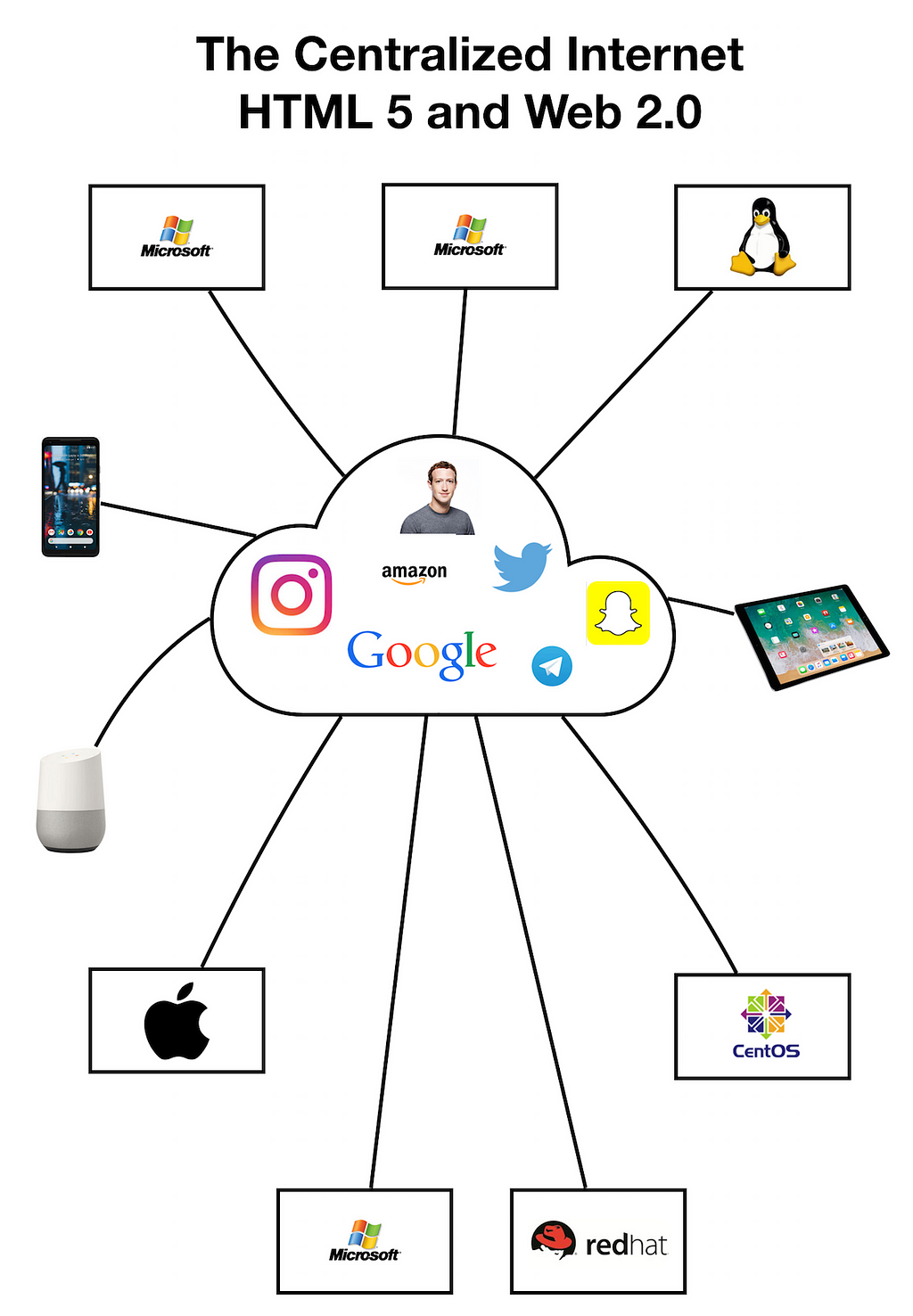

The centralization of the Internet began with it’s commercialization. Companies like AOL began this push as an ISP. Microsoft then bundled IE with the Windows OS starting with Windows 95. By embracing and extending the Internet to Windows users, Microsoft effectively killed off the competition. Netscape closed shop while other browsers like Mozilla were marginalized. Offering IE for free was the starting point for most users since the majority of them had a PC running Windows. Now that they had the software to access the Internet, it would be much easier. All they needed to do next was subscribe to an Internet service. While some critics predicted the Internet was a fad and would eventually fall along with the dotcom bubble toward the late 1990’s, instead it thrived thanks in part to it’s commercialization. You can call it a network effect in which more users who joined created more connections, allowing more information to be shared and accessed. Even the mainstream news giants took notice and soon they too established an online presence. The Internet was now a competitor to news media outlets as a source of information. Meanwhile, AOL would face stiffer competition in the 2000’s, this time from broadband Internet providers who offer bundled services, not just the Internet. The big players offered Internet with telephone and cable service at good starting rates to get more customers. This affected smaller ISP’s who can only offer Internet and so they had to close shop. This convergence of service was what led to a more centralized Internet.

Gone are the days when you can access the Internet loosely. It now requires an ISP for the majority of users. As commercial interests grew along with Internet use, many platforms emerged that became known as social media. This would bring Web 2.0, when user generated content and interoperability of web pages would become more dynamic and work with newer devices. It was also the emergence of cloud computing in which access to servers are obscured by the Internet. The cloud was a virtual collection of servers that provided services to users. The giants in this game were Facebook, Google, Twitter and more recently Snapchat and Instagram. Facebook began to use it’s clout to buy up smaller companies and use their features. It attempted to buy out Snapchat, but instead acquired Instagram and started incorporating Snapchat features. It became clear that Facebook, with 2.2 billion+ users (as of this writing), is far ahead. Facebook was not exactly an open system since they can control what users can see and this is already becoming an issue. Twitter, another platform, can also censor certain users, either due to violation of their policy or even for other reasons. There have been complaints that Twitter is not fair when blocking users from using the platform. Another platform like YouTube allow users to upload videos. It is also content controlled by YouTube in order to weed out harmful content which is a good practice. The only issue is that sometimes the content is not really harmful but YouTube will ban it due to some sort of violation of it’s policy. So policy is always something that social media platforms impose on users. These maybe apps, but they are the reason many people are using the Internet. Without these platforms, there would be less ways to share or convey information. Perhaps the biggest issue with social media platforms is that they collect user data and sell it to an interested third party. This is all legal since the Terms of Service (TOS) agreement allows the platform to share your information and use it for their own purposes. According to Facebook’s own TOS:

“By using or accessing Facebook Services, you agree that we can collect and use such content and information in accordance with the Data Policy as amended from time to time.”

The problem with this is if the data winds up in the wrong hands. That can be used by malicious actors for more devious purposes. For the most part, the data collected from social media is used more for targeting users by brands. The third party would most likely be ad agencies, marketing firms and research groups. They use analytics on the cold data they get from social media and harvest useful information from them. What they get are insights that is information that provides data about the users of these platforms. The information is then sold, as a product, to brands in order to allow them to target the users for ads and services. Information is not really free, it has become a commodity that has a price people are willing to pay for. While a centralized platform controls all your data and information you feed it, a decentralized platform would not. There is also more privacy on decentralized platforms as none of your data or activity is being monitored and collected.

A related topic to the centralized Internet is Net Neutrality in the US. A deregulated Internet may be good for the ISP’s involved, but perhaps not for the users. The repeal of Net Neutrality has given more control to ISP’s to do whatever they like. They can throttle speed, control media content access and even increase rates without any intervention from the Federal Communications Commission (FCC). Instead another government agency, the Federal Trade Commission (FTC), will be tasked with monitoring ISP’s. It still doesn’t give users the assurance that ISP’s will not abuse their power. In the interest of business, repeal does mean that ISP’s are more incentivized to innovate and improve their networks and service. Once again centralized power given to the ISP’s leaves the consumers with little choice. There are concepts for decentralized ISP projects. One such project is called Open Internet Socialization Project (OISP). Their goal is for members of a community to own the means for delivering Internet to each other with incentives for that delivery. It’s just a question of how effective these projects will be implemented.

There are pros and cons to a centralized Internet. In terms of pros, it has allowed the Internet to expand due to the services provided by the ISP’s and also the popularity of social media platforms. The cons is that since it is centralized, there is not much choice for users. A user’s access to the Internet is at the mercy of their provider and the platform. This is a single point of failure, not something the original Internet was designed for. Most places don’t have many options for the Internet either since an ISP may have cornered the market in that area. Security is also be a concern here, since a centralized location for data makes it an easy target for hackers. Users have experienced hacks where their personal information was compromised because they were stored by a single company. Often the information ends up in the wrong hands. Centralized systems are also easy targets for disruptive activities like DDOS attacks, service outages and malware virus infections. I won’t go into further detail on decentralized solutions for the Internet, but I am just pointing out the cons of having too much centralization of the Internet. For this reason some are worried about the centralized Internet. The balance of power tilts toward the ISP and platforms who have big business interests. As these giants grew however, they are stifling innovation that makes it harder for others to compete in the same space. Decentralization offers it’s solutions to these problems so it is worth exploring for the future development of the Internet.

Suggested Reading:

Decentralized Internet on Blockchainhttps://hackernoon.com/decentralized-internet-on-blockchain-6b78684358a

Why a Centralized Internet Suckshttps://bdtechtalks.com/2017/10/27/why-does-the-centralized-internet-suck/

The Evolution of the Internet, From Decentralized to Centralized was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.