Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

CEO of Apple Tim Cook with CEO of IBM Ginni Rometty

CEO of Apple Tim Cook with CEO of IBM Ginni Rometty

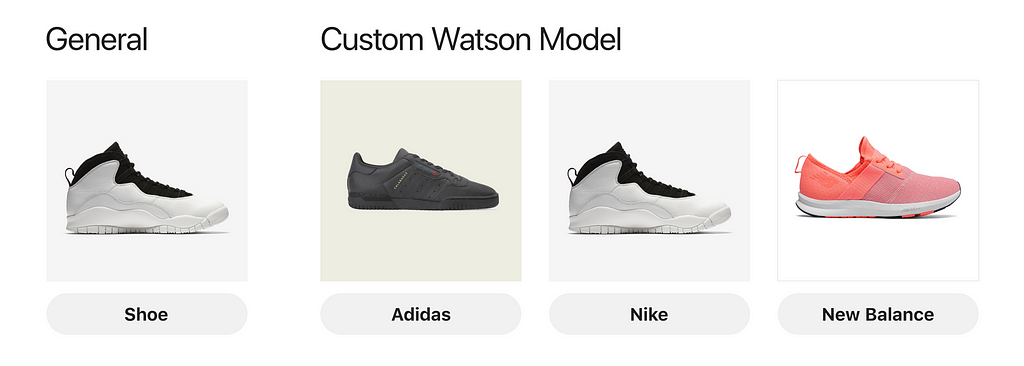

IBM Watson just announced the ability to run Visual Recognition models locally on iOS as Core ML models. I’m very excited.

Before now, it was fairly easy to integrate a visual recognition system into your iOS app by just downloading a model from Apple. However, the models you can use are very cookie cutter and specific to a standard set of items that it can recognize (cars, people, animals, fruit, etc).

But what if you wanted to have a model that could recognize items for a use case specific to you?

Before now, if you wanted to do this and you weren’t familiar with the ins and outs of AI, this could be a fairly difficult task. You would need to familiarize yourself with a machine learning framework such as TensorFlow, Caffe, or Keras. However, with Watson we can train our own custom model without having to touch any code.

Before, if you trained a model with Watson

- It was stuck in the cloud

- You needed an internet connection to access it

- You were charged to use it

Now

- You can download your model as a Core ML model

- You can use it locally like any other Core ML model

- No strings attached

Collecting Data

To build a custom model we need to collect data to train it on. If we want to train our model on different brands of shoes we need to collect a bunch of pictures of each brand.

I went ahead and downloaded from Google:

- 100 pictures of Nike shoes

- 100 pictures of Adidas shoes

- 100 pictures of New Balance shoes

This is a very lazy approach to collecting data. It won’t produce great results unless our use case happens to be classifying Google image search results. A much better approach (given this is for an iOS app) is to take pictures of the different shoes with your iPhone camera. However, I only happen to own 2 pairs of shoes and they both fall into the category of “Nike”.

We do what we can 🤷♂️

Note: As expected the model didn’t work as well as I wanted, so I ended up just using 10 pictures of my Nikes, 10 pics of my dad’s New Balances and 10 pics of my friend’s Adidas shoes (instead of the Google photos).

After you collect your image data, we need each category to be zipped up in its own .zip file.

Getting Started with Watson

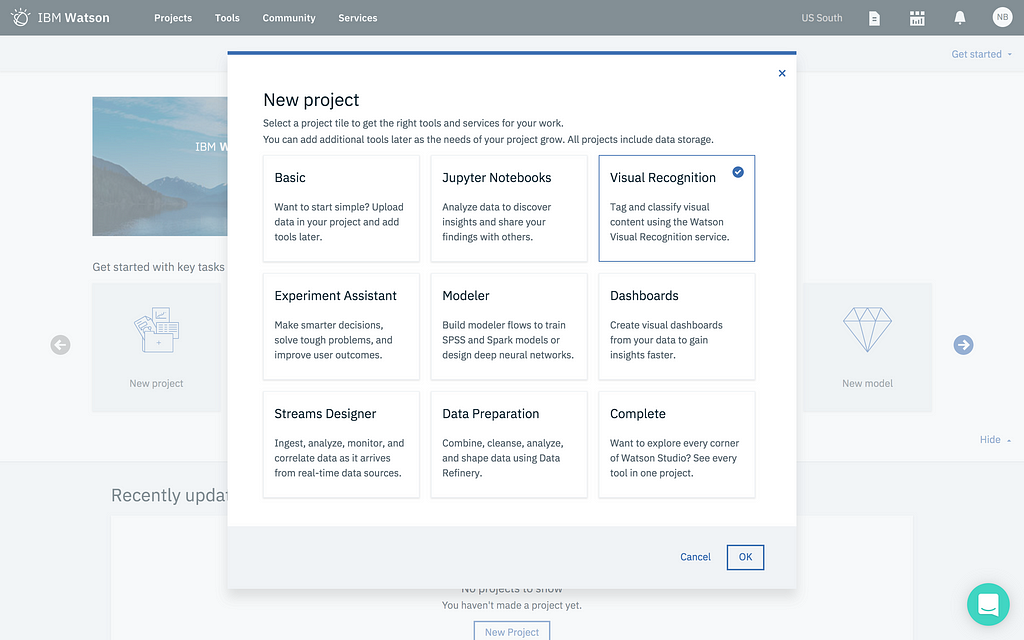

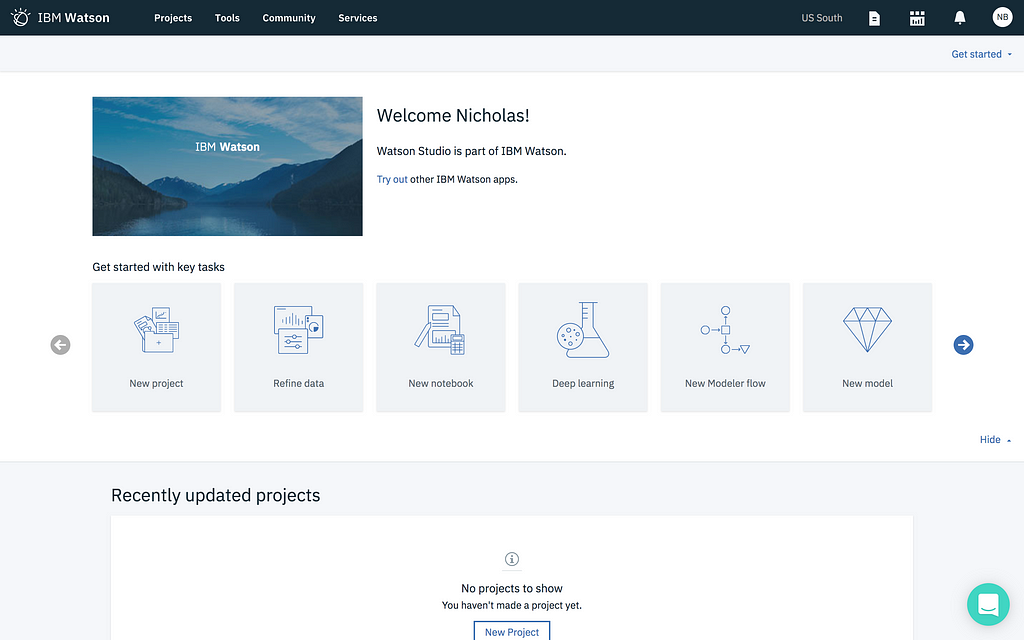

To use Watson we need to create an IBM Watson Studio account. Sign in or sign up and then make your way to the main page:

Note: If you don’t see New project, Refine data, New notebook, etc... Click the Get started dropdown in the top right corner.

Note: If you don’t see New project, Refine data, New notebook, etc... Click the Get started dropdown in the top right corner.

Click on New project and then select Visual Recognition:

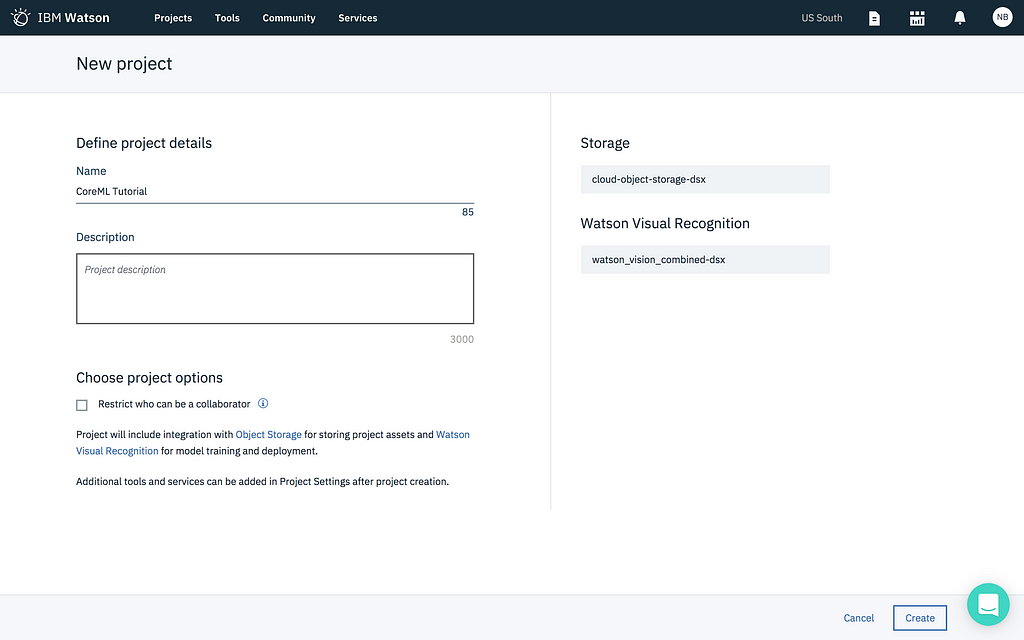

Give your project a name and continue:

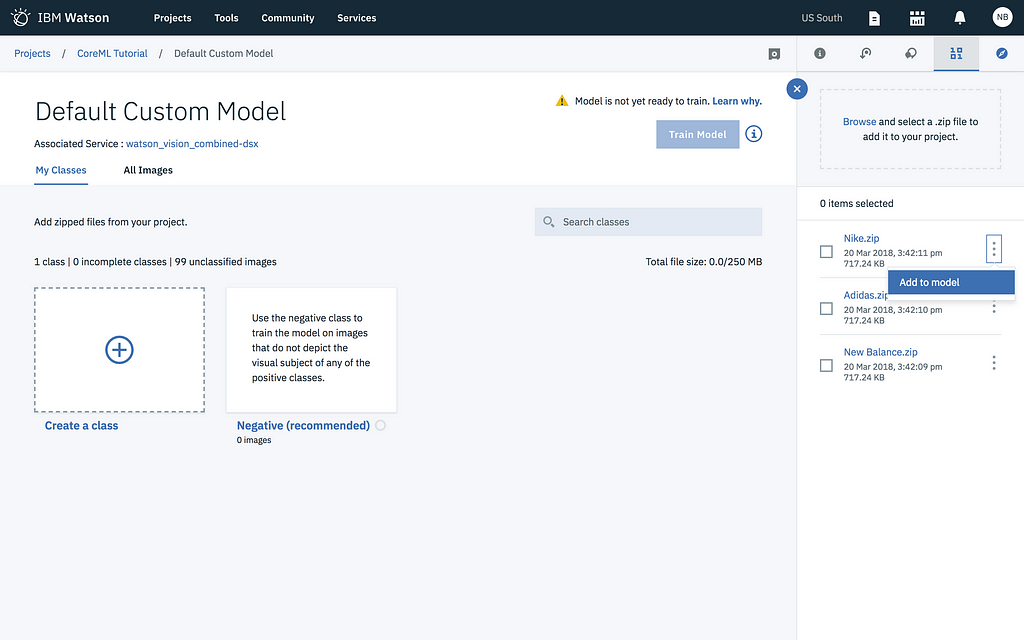

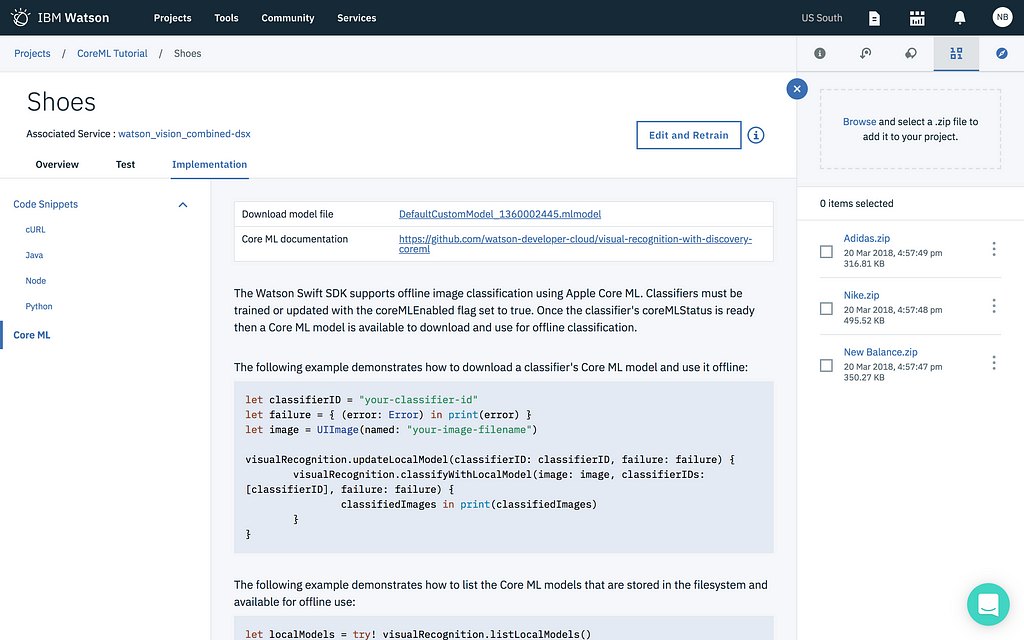

Browse for your .zip files that you created:

Then add them to the model by clicking the 3-dot menu and choosing Add to model:

Then click to train the model:

Downloading the Core ML Model

Once the model finishes training you will be greeted with a small notification. Click to view and test the model:

That will bring you to the page that will allow you to download the model:

Choose Implementation followed by Core ML. Then download the model file:

Important Note: Safari downloads the file with a.dms extension. Just rename it to have a .mlmodel extension.

Important Note: Safari downloads the file with a.dms extension. Just rename it to have a .mlmodel extension.

Running the App

For the simplicity of this post I’m going to assume that you have a working knownledge of Xcode 😬

I built a sample app that you can clone from my GitHub.

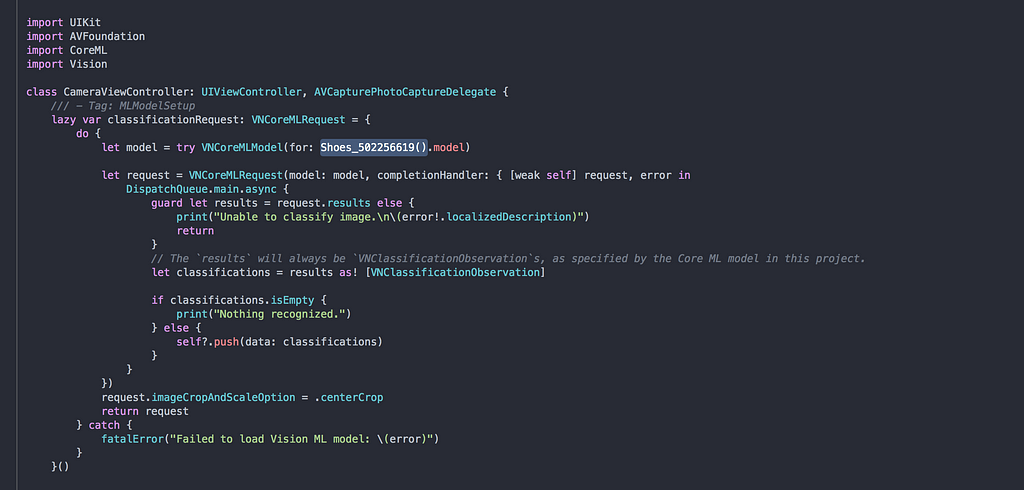

All you need to do is open up the project. Import your Core ML model file that you downloaded. Followed by changing the name of the model to match yours in the CameraViewController file:

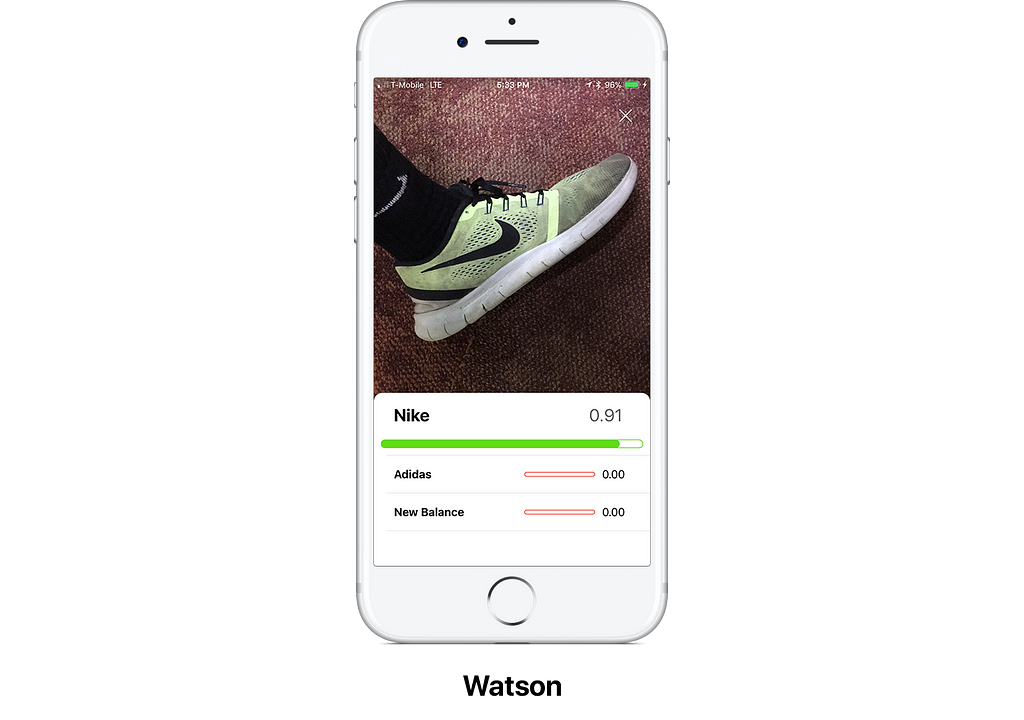

That’s all! You should be able to run the app, take pictures and get classifications back (please excuse my extremely dirty shoes):

Final Thoughts

I know I work for IBM, but hopefully I’m not the only one excited about this 😝 I’ve been waiting for the ability to run my custom Watson models locally for a while now. No more need for an internet connection and the models run fast no matter where you are.

Thanks for reading! If you have any questions, feel free to reach out at bourdakos1@gmail.com, connect with me on LinkedIn, or follow me on Medium and Twitter.

If you found this article helpful, it would mean a lot if you gave it some applause👏 and shared to help others find it! And feel free to leave a comment below.

Simplify Adding AI to Your Apps — Core ML Say Hello to Watson was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.