Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

TL;DR

Django has always been one of the outliers in the modern era of real-time, asynchronous frameworks and libraries. If you want to build a chat application, for example, Django, most probably wouldn’t be your first choice. However, for those of you out there who hate JavaScript, or if you’re a “perfectionist with a deadline”, Django Channels presents you with a great option.

Django Channels is a library which brings the power of asynchronous web to Django. If you aren’t familiar with Django Channels, I highly recommend getting yourself familiarized with it before you read further. There are excellent articles out there explaining what Django Channels is, and how it can transform the way you use Django. https://realpython.com/blog/python/getting-started-with-django-channels/ and https://blog.heroku.com/in_deep_with_django_channels_the_future_of_real_time_apps_in_django are two great examples. It also shows you how to build a basic chat application using Channels. It is fairly straightforward to set up, and will get you going in a few minutes!

The problems with hosting a Django Channels Application

In traditional Django, requests are handled by the Django application itself. It looks at the request and URL, determines the correct view function to execute, executes it, produces a response and sends the response back to the user. Fairly straightforward. Django Channels, however, introduces an Interface Server (Daphne) in between. This means that the Interface server now communicates with the outside world. The interface server looks at the request and URL, determines the right “Channel”, process the request and creates a “message” for the worker process to consume, and places the message in that Channel. A message broker, like Redis, listens to these Channels, and delivers the messages to worker processes. The worker process listens to the message queue, processes the message (much like a view function) and produces the response and sends it back to the interface server, which then delivers it back to the user. (Please feel free to take a minute to grasp this, it took me many hours :’))

This means now, instead of just a single process running, which you would be starting using:

python manage.py runserver

You would be running:

daphne -p 8000 your_app.asgi:channel_layer

and

python manage.py runworker

This enables Django Channels to support multiple types of requests (HTTP, Websockets etc). But clearly, it requires more resources than a standard Django application. For one, it requires a message broker. You can get away with an in memory message broker, but it is not recommended for production purposes. In this example, we will setup Redis in an EC2 instance and use that as the message broker.

If you’re using Elastic Beanstalk, it is configured to listen to port 80 by default, which is where generally your worker process will be running. But we want the application to listen to Daphne instead, so that would require configuring the Load Balancer to forward requests to the port where Daphne is listening.

But before all that, we first need to host our Django Application itself. If you are not familiar with how to do that, please follow the steps here:

Deploying Django + Python 3 + PostgreSQL to AWS Elastic Beanstalk

The only change is that you should select the Application Load Balancer instead of the Classic Load Balancer as WebSockets is only natively supported by Application Load Balancer. This will get your application up and running on Elastic Beanstalk.

Next Steps:

- Provisioning a Redis Instance for message broker.

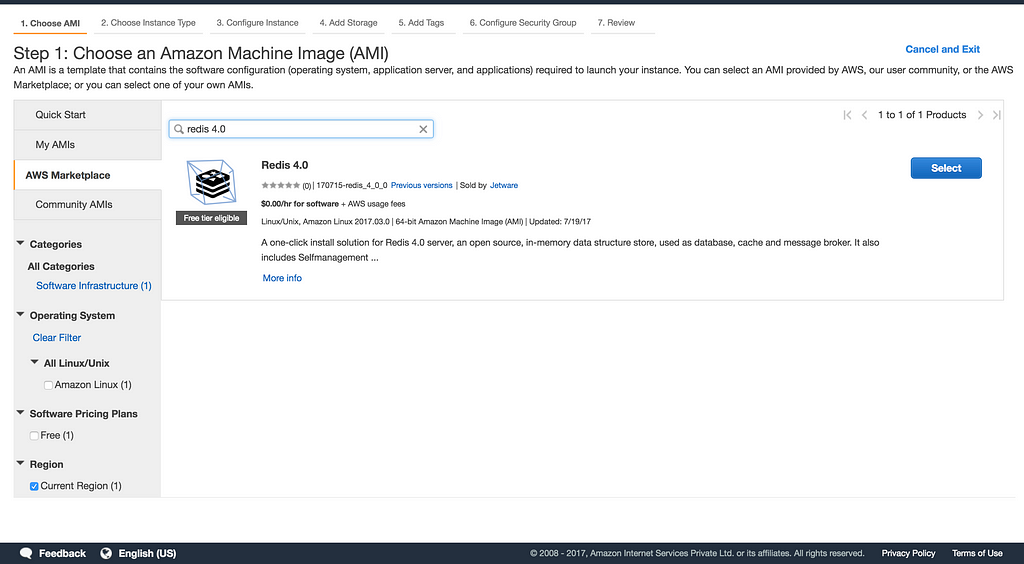

Sign in to your AWS console and go to EC2. Click “Launch Instance” on the top and select AWS Marketplace on the side menu.

Search for Redis 4.0

Selecting Redis from AWS Marketplace

Selecting Redis from AWS Marketplace

After this, follow through the next steps. Please be sure to store the ssh-key (pem file) for the instance. After that, click “Review and Launch”. This will get your Redis instance up and running.

Now ssh into your Redis instance and open redis.conf file.

sudo nano /jet/etc/redis/redis.conf

Change the address from 127.0.0.1 to 0.0.0.0 and port from 1999 to 6379

Save and restart the using:

sudo service restart redis

You can check everything is correctly configured by running netstat -antpl

This command should show Redis running at 0.0.0.0:6379.

After this, select the instance from EC2 Dashboard, and in the menu below, select it’s security group (this should look something like Redis 4–0–170715-redis_4_0_0-AutogenByAWSMP). Add a new inbound rule with following info:

Type: Custom TCP Rule, Protocol: TCP, Port Range: 6379, Source: 0.0.0.0/0

You don’t need to add the above if it already exists or you can just modify the existing line if the port number is different. Now, grab the Public DNS of your Redis instance from EC2 Dashboard and save it for reference.

Now in your settings.py file for production, change your redis host and port to use the newly created Redis instance.

CHANNEL_LAYERS = { "default": {"BACKEND": "asgi_redis.RedisChannelLayer",

"CONFIG": {"hosts": ["redis://(<The Public DNS of the Redis instance>, 6379)"],

},

"ROUTING": "<your_app>.routing.channel_routing",

}

}

Please change <your app> to the name of your app. This will configure your Django application to use the Redis instance we created.

Now, we should run our daphne server as a daemon, and configure our Application Load Balancer to forward all requests to Daphne.

Running Daphne Server and a worker process as daemon:

In your app directory, open .ebextensions folder, create a new file called daemon.config with the following contents:

files: "/opt/elasticbeanstalk/hooks/appdeploy/post/run_supervised_daemon.sh": mode: "000755" owner: root group: root content: | #!/usr/bin/env bash

# Get django environment variables djangoenv=`cat /opt/python/current/env | tr '\n' ',' | sed 's/%/%%/g' | sed 's/export //g' | sed 's/$PATH/%(ENV_PATH)s/g' | sed 's/$PYTHONPATH//g' | sed 's/$LD_LIBRARY_PATH//g'` djangoenv=${djangoenv%?}# Create daemon configuraiton script daemonconf="[program:daphne] ; Set full path to channels program if using virtualenv command=/opt/python/run/venv/bin/daphne -b 0.0.0.0 -p 5000 <your app>.asgi:channel_layer directory=/opt/python/current/app user=ec2-user numprocs=1 stdout_logfile=/var/log/stdout_daphne.log stderr_logfile=/var/log/stderr_daphne.log autostart=true autorestart=true startsecs=10

; Need to wait for currently executing tasks to finish at shutdown. ; Increase this if you have very long running tasks. stopwaitsecs = 600

; When resorting to send SIGKILL to the program to terminate it ; send SIGKILL to its whole process group instead, ; taking care of its children as well. killasgroup=true

; if rabbitmq is supervised, set its priority higher ; so it starts first priority=998

environment=$djangoenv [program:worker] ; Set full path to program if using virtualenv command=/opt/python/run/venv/bin/python manage.py runworker directory=/opt/python/current/app user=ec2-user numprocs=1 stdout_logfile=/var/log/stdout_worker.log stderr_logfile=/var/log/stderr_worker.log autostart=true autorestart=true startsecs=10

; Need to wait for currently executing tasks to finish at shutdown. ; Increase this if you have very long running tasks. stopwaitsecs = 600

; When resorting to send SIGKILL to the program to terminate it ; send SIGKILL to its whole process group instead, ; taking care of its children as well. killasgroup=true

; if rabbitmq is supervised, set its priority higher ; so it starts first priority=998

environment=$djangoenv"

# Create the supervisord conf script echo "$daemonconf" | sudo tee /opt/python/etc/daemon.conf

# Add configuration script to supervisord conf (if not there already) if ! grep -Fxq "[include]" /opt/python/etc/supervisord.conf then echo "[include]" | sudo tee -a /opt/python/etc/supervisord.conf echo "files: daemon.conf" | sudo tee -a /opt/python/etc/supervisord.conf fi

# Reread the supervisord config sudo /usr/local/bin/supervisorctl -c /opt/python/etc/supervisord.conf reread

# Update supervisord in cache without restarting all services sudo /usr/local/bin/supervisorctl -c /opt/python/etc/supervisord.conf update

# Start/Restart processes through supervisord sudo /usr/local/bin/supervisorctl -c /opt/python/etc/supervisord.conf restart daphne sudo /usr/local/bin/supervisorctl -c /opt/python/etc/supervisord.conf restart worker

Please change <your app> to the name of your django app. This basically creates a script and places it in /opt/elasticbeanstalk/hooks/appdeploy/post/

so that it executes after the application deploys. Now this script, in turn creates a supervisord conf script, which is responsible for running the daemon processes and managing the supervisord . (Again, please feel free to take a minute to grasp this :’) )

The final step..

Now we have our Redis set up, daphne and worker process running, now all we need to do is to configure our Application Load Balancer to forward the requests to our Daphne server which is listening on port 5000 (Please check the config script of the daemon processes).

Create a new file in your .ebextensions folder called alb_listener.config and place put the following code in.

option_settings: aws:elbv2:listener:80: DefaultProcess: http ListenerEnabled: 'true' Protocol: HTTP aws:elasticbeanstalk:environment:process:http: Port: '5000' Protocol: HTTP

Please be careful of the spaces as this is in YAML syntax.

Redeploy your app and Viola! your Django Channels app is up and running on AWS Elastic Beanstalk.

If you have any questions, please feel free to ask in the comments! Any recommendations for future blog posts is also welcome, if you like this one, that is. :’)

Thanks for reading!

Setting up Django Channels on AWS Elastic Beanstalk was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.