Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Abstract

L1 chain infrastructure has been the center of the crypto narrative. Technically, L1 chains have been making breakthroughs in consensus mechanisms, programmability, and scalability. Even so, L1 chains today still have development bottlenecks, such as performance that is not yet comparable to Web2 infrastructure and insufficient demand for applications. In the first half of 2022, starting with the Cosmos network, a number of new L1 chains were in the spotlight with regard to heated topics surrounding “modularity” and “cross-chain”. In the second half of the year, the three new L1 chains derived from the “Diem” series, Aptos, Sui and Linera, prevailed. This article discusses the innovations and advantages of the new “Diem” L1 chains in terms of technology and ecosystem from the following five aspects.

● The three main features and advantages of the new Move language;

● Parallelized solutions;

● The innovation and difficulty of consensus mechanisms;

● Exploratory development of tokenomics;

● Possible trends of dedicated L1 chains or dApp chains.

Through the analysis, we can conclude that (1) smart contract programming language is crucial to security, parallelization and developers; (2) parallelization is the right path for L1 chains in the future; (3) most consensus mechanisms are based on modifications of BFT, but there is hardly any room left for further improvement; (4) token economic model has always been the booster of L1 chain development; new L1 chains must address the problem of balancing the relationship between network, users, verifiers and developers ; (5) dedicated L1 chains need to develop technology according to specific application scenarios and improve user experience. From the above conclusions, we obtain a glimpse of the next generation L1 chain paradigm, especially in the parallelization and virtual machine aspects. For the remaining issues that Diem’s derivatives cannot solve, we could keep an eye on new progress being made in terms of technical features, tokenomics and ecosystem development.

1. Overview of L1 Chain Technical Development

As the core infrastructure of the crypto industry, each development in the L1 chain landscape could have a profound influence on the whole industry. The technical development of L1 chains has been the main narrative of the first three bull markets; it is inevitably boosted by capital and demand. The breakthrough of L1 chains is mainly manifested in three aspects: 1. consensus mechanism; 2. programmability; and 3. scalability.

In terms of consensus mechanism, we have witnessed both the crowning moments of chain glory with POW led by Bitcoin, and the new era of chains with POS after the Ethereum merge. For Bitcoin and Ethereum, although POW mechanism ensures certain level of decentralization, problems such as waste of resources and low processing efficiency come alongside. The year 2012 catalyzed the birth of POS, where the problem of excessive POW energy consumption can be solved by staking, which is also adopted by most new L1 chains. Apart fom POS, other innovative consensus mechanisms have been created to improve efficiency of block generation and maintain network security, such as Solana’s POH and Avalanche consensus mechanisms.

Programmability refers to building the application layer of the blockchain with smart contracts. Bitcoin network’s scripting language is non-Turing-complete, which disables complex applications from being built; whereas Ethereum adopted virtual machines and the Solidity programming language to deploy smart contracts. This was one of the fundamental reasons for last bull market. However, due to Solidity’s lack of support for concurrency, low security and other shortcomings, many blockchains have started with Rust or constructed new programming languages, such as Move, to provide a more secure, friendly and scalable development language.

In terms of scalability, blockchains have been hampered by the impossibility trilemma; the key lies in modularity. Modularity serves as a tool for a system to be divided into multiple individual sub-modules, which could be eliminated or reassembled at will. Modularity encompasses two concepts: modular frameworks and tools for L1 chains, and modularized L1 chains. The three-tier framework of blockchain contains a settlement layer, an execution layer and a data availability layer. Based on the modular framework, some teams have already completed the development of these modular tools and components, which are engaged extensively in the construction and development of existing L1 chains, including Cosmos and Polkadot ecology. There are three main forms of modular separation implementation paths: (1) shared security; (2) Layer2/execution layer; (3) separation of data availability layer. These are also the mainstream directions of L1 chains in scaling technology at present.

2. L1 Chain Development Bottleneck

Although L1 chain has been on the rise, the main problem of current L1 chains is the poor performance that distances it from Web2 infrastructure. Inadequate performance of L1 chains will trigger two serious problems: 1. restricting the ceiling for the overall development of ecological applications; 2. bringing poor user experience, which is mainly reflected in the short-term congestion and expensive fees of some L1 chains. The poor performance in the execution layer is demonstrated by in the fact that EVM can only be serial, and concurrent execution of multiple transactions is not feasible, capping the data capacity on-chain; the solution is parallel computing. Parallel computing is already a rather mature technology in the Web2 space, but it is just starting out in the blockchain field. In addition to parallel computing, Ethereum has transferred some of the execution layer functions to Layer2.

Performance is mainly determined by the algorithms; it measures the level of efficiency, economy and security of consensus reaching process in the consensus layer. Currently, POS consensus is commonly adopted by most L1 chains, hence, efficiency, in this case, it mainly refers to the number of communications between nodes and the duration until final confirmation. Consensus reaching in a distributed system is a classic topic that far predates the birth of the blockchain; productivity is high, such as PBFT, HotStuff, DUMBO, Algorand, all of which could achieve relatively high TPS theoretically. Innovative consensus mechanisms and final finality algorithms are quite challenging work for building L1 chains, and have not caught up with existing projects, not to mention achieving exponential performance improvements merely by some modifications.

The data layer contains functions for data storage, interactions in account and transaction as well as overall security. The Bitcoin network is the most secure and decentralized blockchain network available with each node being a full node storing all block data, where the compromise is that only 7 transactions can be processed per second with the block size of 1M, while Ethereum has a contract layer and an application layer, whose blocks require more space; it remains the tricky problem with Ethereum. Although Layer 2’s Rollup solution is capable of achieving compression of data, the amount of data that needs to be uploaded increases as the size of the application increases. There are two most sought-after solutions that have been discussed: 1. remove some functions from the data layer to other L1 chains, such as transferring the handling of data availability to Celestia; 2. on-chain scaling, i.e., sharding, but truly seamless sharding would be technically challenging to implement.

In addition, bound by the impossible triangle of blockchain, security will be compromised while performance is improved. The two main security issues in L1 chains are mostly reflected in: 1. nodes; 2. smart contracts. With an insufficient number of nodes and low decentralization, the network would be vulnerable to attack. When it comes down to the security of smart contracts, the underlying blockchain, specifically the programming language and virtual machine, are the major concerns.

In terms of ecological applications, most L1 chains are far from having niche applications or achieving monopoly. Users are also switching back and forth between different chains based on incentives; the only one that inherently retains users by applications per se is Ethereum. This phenomenon is particularly explicit in the bull-bear transition, where funds prefer to stay on Ethereum during a bear market. Other L1 chains are comparatively advantageous in terms of full scale of eco-applications and lower on-chain fees.

3. New L1 Chain Model from Diem

Innovations of new L1 chains almost always stem from consensus mechanisms, programmability and scalability for the purpose of performance improvement. The outstanding new L1 chains are undoubtedly the new projects inherited from the discontinued project Diem — namely, Aptos, Sui and Linera, which have progressed in terms of programming language, consensus algorithm, modular architecture and transaction parallelization, moving towards an upper level of performance and security. By studying the three new L1 chains, it may shed some light on the L1 chain paradigm of the future.

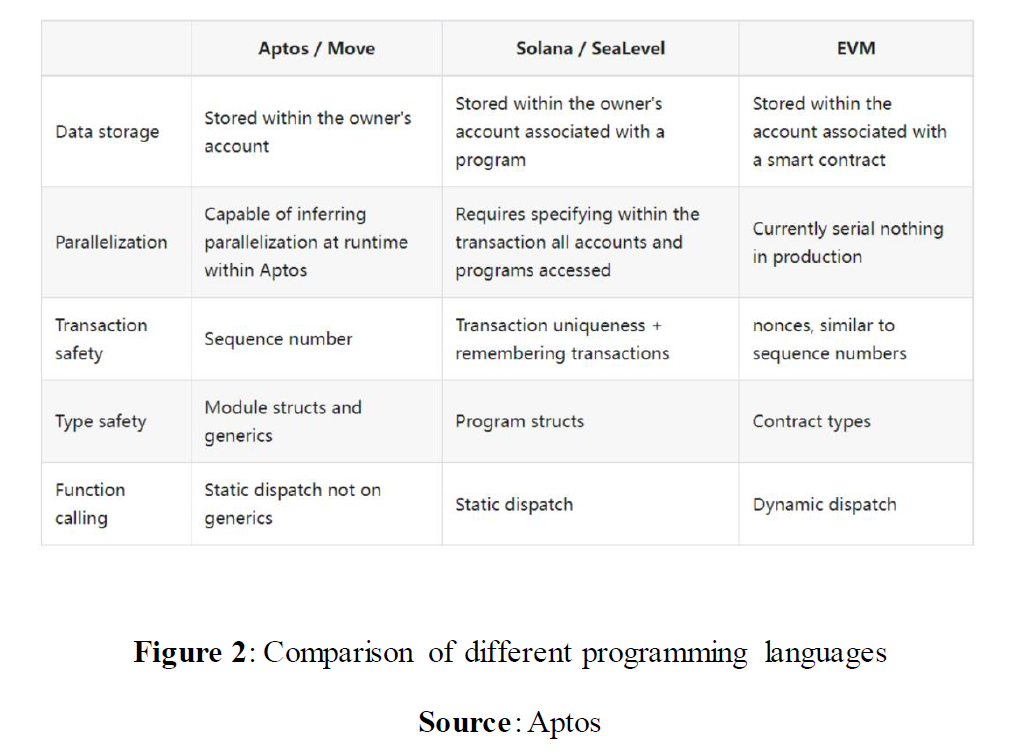

3.1 The Smart Contract Security Revolution

In the blockchain world, programming language, as the formal language that defines computer programs, is the basic vehicle to achieve all the functions and systematic goals. After Solidity and Rust, Move, a new generation programming language bred from Diem, is back in the spotlight due to the strong debut of the avant-garde L1 chain projects, Aptos and Sui; Move was once hailed as the most suitable language for blockchain. The reappearance of Move seems to be suggesting that programming languages are creating a new battlefield for L1 chain competition. The chart below briefly compares the characteristics of the three programming languages; innovations in security, parallelization, and development friendliness of the Move language are also detailed and analyzed in the following.

(1) Security

Move outperforms previous programming languages in terms of asset security, in large part because targeted improvements are made based on achievements from previous projects. We have discussed the security features in detail in our past report, Move: innovations and opportunities, and a summary of the report is as follows.

Move provides comprehensive security screens for smart contracts at several levels: language design, virtual machine, contract invocation and contract execution. Move specifically defines a new resource type for digital assets to distinguish them from other data, and combines the strong data abstraction feature of Module to ensure that assets will not be created out of thin air, copied arbitrarily or discarded implicitly, which greatly elevates the level of security. At the same time, Move is designed to support only static calls, and all contract execution paths can be determined at compile time, fully analyzed and verified, so that some security vulnerabilities could be revealed in advance, thus reducing the probability of system downtime. Moreover, it introduces formal verification tools, which greatly reduce the cost of manual code security audits.

(2) Parallel processing

Solidity does not support concurrent processing; yet, Move aims to alter this feature from the ground up. Since it creates a special definition for digital assets, it distinguishes them from ordinary values in terms of data type, and by defining different properties for various resources, independent transactions can be identified with the assistance. Combined with the multi-threaded execution engine, transaction data can be operated and processed simultaneously, improving the efficiency of system operation.

(3) Development friendliness

According to market feedback, Move is rather developer-friendly: its main purpose is to lower the security threshold for development so developers of smart contracts can focus on business logic. Meanwhile, as Move is evolved from Rust and Solidity, discarding unnecessary “dross” in the design of both, its complexity is relatively low. Therefore, the overall switching cost for developers is not high. According to Move practitioners, developers with experience in Rust and Solidity programming will only take 1–2 days to be adept at Move; for developers without experience in smart contract programming, it only takes 1–2 weeks to learn Move from scratch.

Both Aptos and Sui coagulated core features of Move. While retaining the security and flexibility of Move, specific improvements are made by each of them, optimized the storage and address mechanisms, thereby improving network performance and reducing transaction confirmation time. Details are as follows:

3.1.1 Icing on the cake: the introduction of adapter layer, an overarching foundation for parallelization

Taking data ownership as an example to illustrate that Aptos’s data is stored in the owner’s account, i.e., the owner has full control over the account, where the basic storage function resides. This is undoubtedly a direct transformation of Move’s native ownership system, which well reflects the nature of user transactions as a transfer of assets ownership.

In addition, Aptos has made some special designs based on its unique characteristics. It introduced the adapter layer to extend the additional functionality of the core MoveVM, including parallelism via Block-STM that enables concurrent execution of transactions without any input from the user, massive storage in accounts, tables for storing keys, and decoupled fine grained storage that decouples the amount of data stored in an account affecting the Gas Fee for transactions associated with the account.

3.1.2 One-of-a-kind: removing global storage to reduce transaction latency

Compared with Aptos’s inheritance of Move, Sui has made some changes to the Move core, especially in terms of global storage and key ability. The differences are elaborated in the following points.

(1) Remove global storage operator

In core Move, users can access and call modules or resources directly via global storage operators such as move_to, move_from, etc. When a new object is created, the data is usually stored directly into the corresponding on-chain address. However, considering the very limited storage space on chain, Sui removes the global storage operator and stores the newly created assets or modules into a specific Sui storage. Therefore, when a user needs to access an object in the system, he or she cannot rely on Move’s original global storage operation directly. Rather he must first pass the object through Sui and access it afterwards.

(2) Set an ID for the object

Objects in Move can be injected with an index (key) attribute to be stored in the data structure as a key-value. However, since Sui removes the global storage, minor adjustments are made for objects with key attributes. If an object were to be indexed, it must have the key string — ID. ID consists of two components: object ID and sequence number, which create an ID for the content of current transaction and record the number of counters generated by the transaction using anti-conflict hash functions, hence ensuring the originality of assets.

(3) Construction of different object types

Sui provides 3 types of objects as foundation for parallelization and consensus mechanisms:

● Shared objects: Can be read, written, transferred, destroyed; such objects are involved in smart contracts

● Single owner objects: Can only read/write, similar to a simple transfer.

● Immutable objects: Read only

3.2 The inevitable path to parallelization

Most current mainstream blockchains engage EVM as the execution engine. When processing transactions, EVM executes one transaction at a time, keeping all other transactions on hold until the transaction is completed and updated to blockchain state before executing the next transaction in turn. This pattern of execution is one of the major bottlenecks in network throughput.

Besides having more advanced hardware to speed up the execution, the main solution to such a problem is parallel computing. A similar scenario once appeared during the development of modern computers. While most computers from 20 years ago had only 1 CPU core, CPUs with 4, 8 or even more cores are commonplace today. It is through more cores for parallel computing that the processing capacity of computers has been continuously improved over the years.

Parallel computing refers to the decomposition of an application into multiple pieces on a parallel machine, assigned to different processors, so that the execution of the subtasks can be processed in parallel by each processor in collaboration with each other, thus achieving the goal of speeding up the solution, or solving larger scale problems. In blockchain, parallel execution of transactions disrupts the paradigm of sequential execution of transactions by simplifying the connection of operations, sequencing, and dependencies of transactions to execute unrelated transactions simultaneously in order to enhance the system’s throughput. The parallel machine is the verifier/node in the blockchain.

On top of that, parallel computing can also reduce the cost of nodes and improve the decentralization of the system, as it disobeys the general diffusion law of computers to not engage in parallel computing if the same level of performance is desired, which could be more costly when using a more powerful single-core CPU. Aptos and Sui have both borrowed the idea of parallelized computing.

3.2.1 First shot of parallelization: transaction classification, multi-threaded synchronous execution

Aptos was designed with the idea of separating unrelated transactions and executing them by advanced execution engine and hardware simultaneously. This idea is closer to how current blockchains operate; this is demonstrated in the following 3 main methods.

● The simplest case: simultaneous execution of transactions in different threads that do not overlap in terms of data and accounts. For example, Alice transfers 100 XYZ tokens to Bob and Charles transfers 50 UVW tokens to Edward at the same time; the two transactions can be processed simultaneously.

● Slightly more complex case: If there are multiple requests pointing to the same data or account, the transactions will first be processed in parallel, only then will behaviors that are potentially subject to conflict be handled in the right settlement order (referred to as delta writes), and a result can therefore be confirmed. For instance, if Alice transfers the same token to Bob and Charles consecutively, the 2 transfers will first be processed in parallel, and a check will be conducted as to whether the overall change in Alice’s account is reasonable. If the balance is insufficient, the process will back trace to the former transaction and re-execute.

● Most complex case: Transactions can be reordered across one or more blocks to optimize concurrency of execution. For example, if an address receives multiple receipts or transfers at the same time, these transfers can be distributed across multiple blocks. This may cause delayed confirmation of transactions for some users, but it allows for greater concurrency for other transactions at large and improves the overall performance of the network.

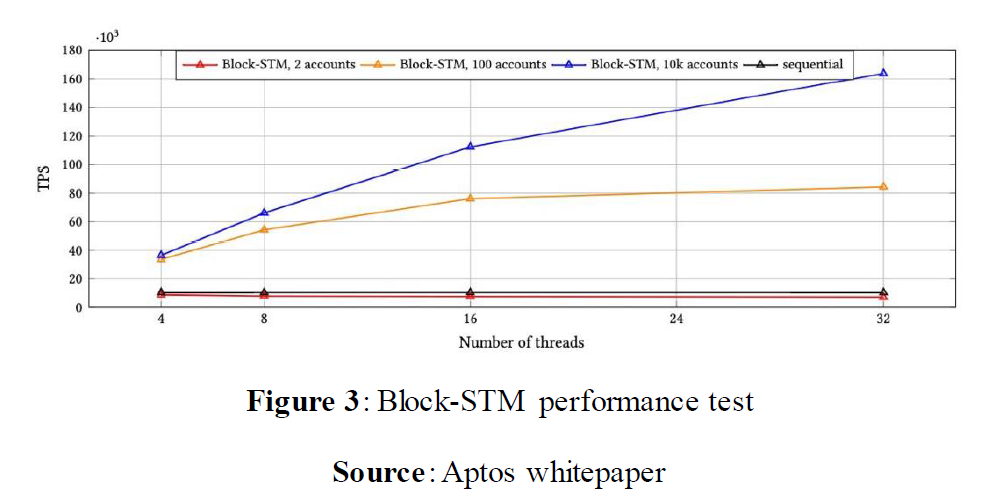

Aptos employs the Block-STM (Software Transactional Memory) parallel execution engine for transactions. Unrelated transactions are executed simultaneously on different cores on the same CPU, maximizing parallelism by multiple instruction streams and multiple data streams for transactions.

The Aptos whitepaper demonstrates the performance test of Block-STM concurrency control: with 10,000 accounts in presence, a TPS of 160,000 can be achieved with 32 threads, and the advantage of parallel execution is significant when a large number of accounts are competing for trading space.

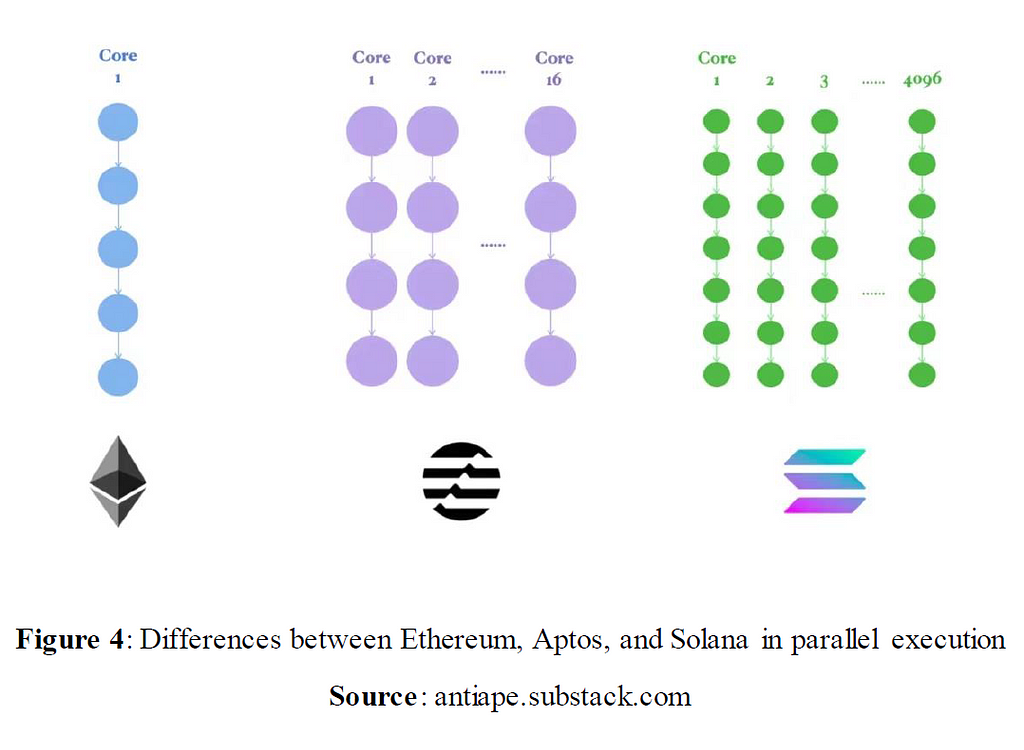

Let us now analyze the similarities and differences between Aptos, Ethereum, and Solana in terms of parallel execution. Some main opinions here have been cited from The Anti-Ape’s article, Ethereum -> Solana -> Aptos: the high-performance competition is on.

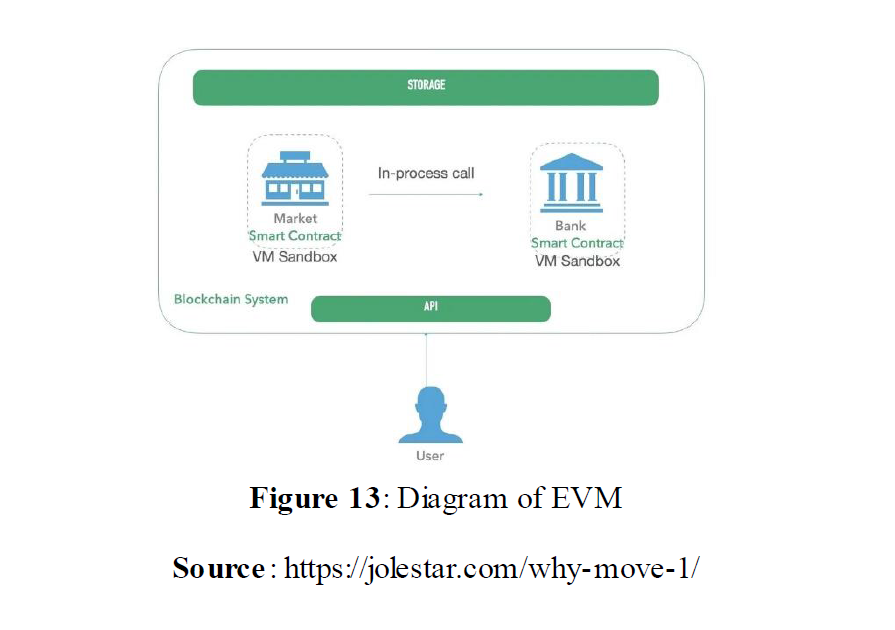

The Ethereum Virtual Machine (EVM) is single-threaded without parallelized processing; only 1 CPU core can be utilized for sequential order processing. It is critical to not confuse the CPU core of the EVM with the Ethereum Graphics Processing Unit (GPU) miner: the CPU of the EVM handles user transactions, such as money transfers and smart contract calls, whereas the GPU of the miner handles ETHASH, a cryptographic operation that competes for the bookkeeping rights of POW mining.

Solana deploys 4096 GPU cores to process transactions in pursuit of faster performance. This design allows Solana to maintain high throughput when the transactions are weakly correlated. It has a pitfall, however, in that it loses the ability to execute in parallel for transactions that are already logically sequential. For example, NFT minting transactions cannot be performed simultaneously on 4096 cores: NFT has a quota, and each NFT has a unique serial number. When minting simultaneously, transactions must be processed by order to avoid duplication. Solana’s 4000+ GPUs are hence unable to leverage parallel execution. In addition, GPUs are weaker than CPUs, which severely degrades the network performance and even led to Solana’s network outages.

Aptos integrates the concepts of both and implements parallel computing with 8-core and 16-thread CPUs, which both enhances the concurrency of transactions through multiple threads and copes with sequentially executed transactions with higher performance CPUs.

Can Ethereum then parallelize processing by upgrading the CPU configuration, for example, by also using a 16-thread CPU? Unfortunately not. Because the EVM is designed with only 1 CPU thread, and only by improving the EVM can the computational power of more CPU threads be brought into play. The ultimate goal of Ethereum is to make sharding + Layer2 a reality, which also promises high performance. Aptos also aspires toward sharding in the future, and if everything is smooth as expected, it would not be impossible to achieve an extremely high throughput of 500,000 TPS — akin to Alipay during its peak transaction period on November 11.

3.2.2 All-in: Further segmentation of transactions, enhancing concurrency by DAG

Sui is similar to Aptos in the idea of parallel computing, but Sui has more detailed improvements to support parallelized processing of data. First, it abandons the traditional chain structure of blockchain and adopts the data structure of DAG (directed acyclic graph), which inherently possess the characteristic of high concurrency. Secondly, Sui subdivides the resources into different objects on top of the resource properties of Move language, and conducts parallelized processing with the object. With the two combined, Sui is capable of achieving high concurrency and high performance.

In Sui’s improved version of the Move language, objects can be viewed as assets like banknotes. Each object has a list of properties, including the address of its owner (or shared ownership property), read/write properties, transferability properties, dApp/in-game functionality, etc. Most simple transfers only require changing the ownership property of a particular object, e.g., Alice transfers 100 USDT to Bob and the language only needs to change the owner of the 100 USDT from Alice to Bob. All unrelated transactions can be processed at the same time. The processing order is naturally presented on the data structure of the DAG, and there is a universal order planning for the whole landscape.

The DAG data structure reserves room for many branched chains in the network at the same time, rather than just a single chain. As long as each branch can be fully aware of the traffic of the corresponding object (validation), nodes only need to validate transactions without multiple rounds of communication to vote on a particular transaction. This leaves room for more concurrent transactions per unit of time and reduces the burden on the verifier side in the consensus process.

Sui displayed excellent performance during the test phase. In March 2022, the TPS of Sui Authority node running on a Macbook Pro (8-core M1 chip) with unoptimized single-core operation reached 120,000; a linear growth in TPS is desirable if the number of CPU cores is increased.

3.2.3 Comparison of two parallelization solutions

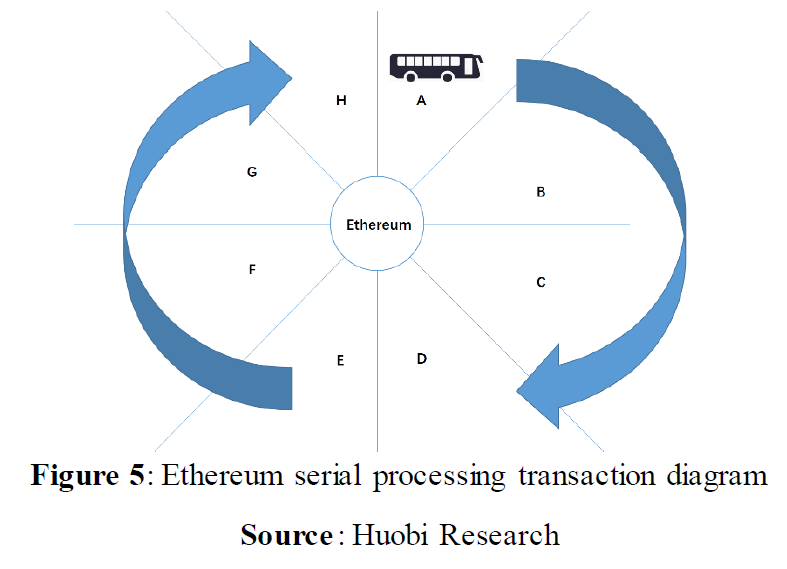

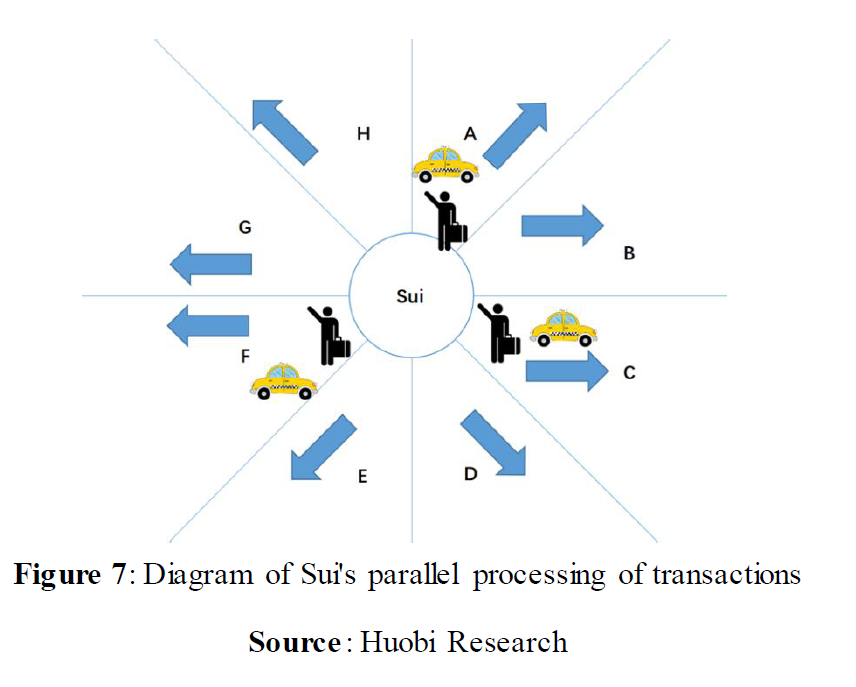

What are the differences between the design of parallelized processing in Aptos and Sui? The example below compares the similarities and differences in parallelization between Ethereum, Aptos and Sui. Assuming each network is a city, and each city has 8 points of interest. A user submitting a transaction can be viewed as arriving in the city via a flight, and a confirmed transaction is deemed as being when the user arrives at his desired point of interest from the airport.

Ethereum’s practice is to load a bus with passengers until the vehicle is full. The bus will circle around the 8 points of interest and drop all passengers off one by one.

Aptos has the ability to complete the same task by starting off with eight buses, and passengers with different destinations can go straight to their desired destinations without any prior stops. Due to more advanced hardware, the Aptos buses also faster than the Ethereum buses, which saves even more time.

Sui’s approach, meanwhile, can be viewed as taxi cab pickups, where passengers can catch a cab and depart immediately, eliminating the time needed to wait for the bus to fill up before departing. Although cabs are presumably faster, the drawbacks cannot be ignored. All transactions in Sui still require the signatures of a sufficient number of verifiers in order to be written onto the ledger. Sui’s leader verifier needs to collect signatures from other verifiers for each transaction, which denotes additional, incremental workload compared to Aptos, whose leader verifier only collects signatures for the proposed block. The additional workload in Sui may offset some of the advantages in throughput and latency.

Both Aptos and Sui contain feasible ideas, and their advantages and disadvantages are briefly compared in the table below:

It is still too early to come to a conclusion as to which solution is more promising. First, both remain in the test stage (unlikely to take a long time if the mainnet of Aptos is online leading up to the publication of this article), and it is still necessary to capture enough data along the running time for better evaluation. Second, the overall on-chain environment is currently barren, and their claimed processing power is perfectly adequate. They all outperform current high-performance L1 chains as long as regular downtime is not a concern.

One last thing to be noted is that parallelized computing cannot solve all problems by simply adding the number of cores and processing by classifications and categorizations. Aptos, Sui and other future L1 chains are by no means just payment networks, they will also have to carry many dApps and smart contracts. The existing smart contracts are only suitable for serial execution, such as AMM and NFT minting. The ultimate power of parallel computing can only be unleashed when smart contracts approximate parallel computing on algorithms. Developers need to modify existing smart contracts or rewrite a new batch, where the process involves overwhelming workloads — and more critically — a transition in thought patterns. L1 chains with parallel computing require time to reveal their full capability; the industry could be dramatically shaken up once the moment comes, and the paradigm could be brand new from head to toe. This will become apparent over the course of time.

3.3 The Ceiling of Consensus Algorithms

Innovation in consensus algorithms is in fact more challenging than expected. The earliest BFT consensus is applicable to the scenario of consensus reaching in distributed systems, especially in the case of Possible node failures and misconduct, dating back to 1982. The main issue with BFT is that it requires multiple rounds of communication, and the communication overhead is proportionate to the square of the number of nodes. The Bitcoin network is operated under the Satoshi Nakamoto consensus, which has two features: (1) limiting the number of proposals in the network by proof of work (hash time + cost) and (2) the longest chain principle is in place to ensure ultimate confirmation and security in probability (economic game to constrain attackers). The Satoshi Nakamoto consensus requires an extremely high level of costs, miner performance, and sustained online status of nodes to sustain the network.

In terms of consensus mechanisms, there are three innovative projects worth mentioning. The first is Solana’s PoH (Proof of History) consensus which establishes a world clock across the network. This allows transactions to be sorted by their local times to ensure the consistency of the ledger. The PoH consensus did result in a breakthrough in Solana’s performance, with TPS reaching 2000–3000. Since hackers can predict and therefore attack the next block producer, PoH is somewhat vulnerable. The second is Avalanche’s Avalanche Consensus, a test network capable of reaching 4500 TPS in performance. The Avalanche consensus is one of BFT consensuses, but it has no leader node, and each node is equal to each other. Each node randomly samples surrounding nodes to form its own results, and the system reaches consensus in a rather short time eventually. The third is the Tendermint consensus, which can also be deemed as an upgraded version of BFT, applicable to partially synchronized networks with two-stage voting where each round of voting is subject to expiration. The innovation represented by the Tendermint consensus is to simplify the switching process of the Leader by sacrificing time. It is not a coincidence that most of the leading consensus algorithms are evolved from BFT, such as HotStaff and Polkadot’s GRANDPA.

Three criteria can be adopted to measure the quality of a consensus protocol: safety, liveliness, and responsiveness. These three metrics are explained here:

● Safety: All honest verifiers will confirm the proposal and execute it. The Bitcoin network, for example, uses algorithms to defend against attacks.

● Liveliness: There will always be proposals in the network. This part includes how Leader nodes are switched, how expiration is defined, etc.

● Responsiveness: The confirmation time of the block is only related to the network latency, and the nodes will always respond to the consensus. The Tendermint consensus, for example, needs to wait for a fixed period to build a block. The responsiveness is poor even when the network is in a good condition.

Diem’s consensus comes out of HotStuff, a three-stage voting BFT consensus where nodes do not need to communicate with each other in each round, and only need to submit their votes to the master node. The DiemBFT consensus has made considerable progress, including the generation of memory pool. The latter is equivalent to adding an actual storage layer that is similar to Solana, improving the performance of the system on a large scale. It was also inherited in Aptos and Sui. Narwhal and Tusk were invented by Sui from DiemBFT, which enables the system to reach consensus quickly in an asynchronous network environment. The following is the analysis of these two consensuses.

3.3.1 Fundamental changes in block structure

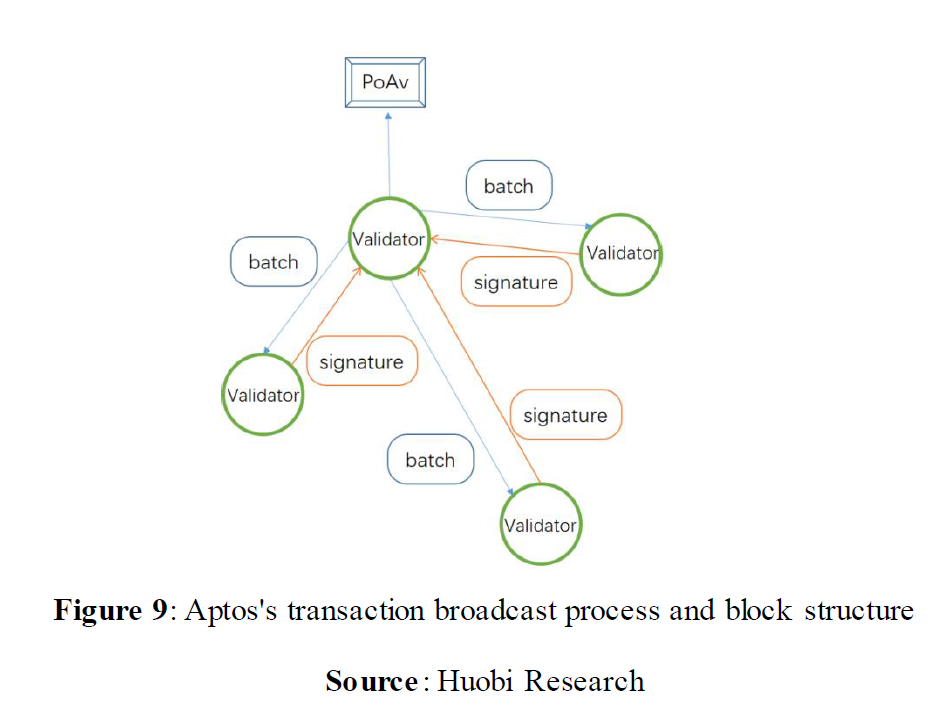

The Aptos consensus mechanism is a direct continuation of DiemBFT. The latter has a reconfiguration mechanism for the voting power of consensus nodes, where each verifier lacks equal voting power and is weighted based on equity, which is proportionate to the number of tokens it pledges. Meanwhile, DiemBFT shares transaction information with each other through a shared memory pool protocol, and conducts votes by rounds henceforth, similar to HotStuff.

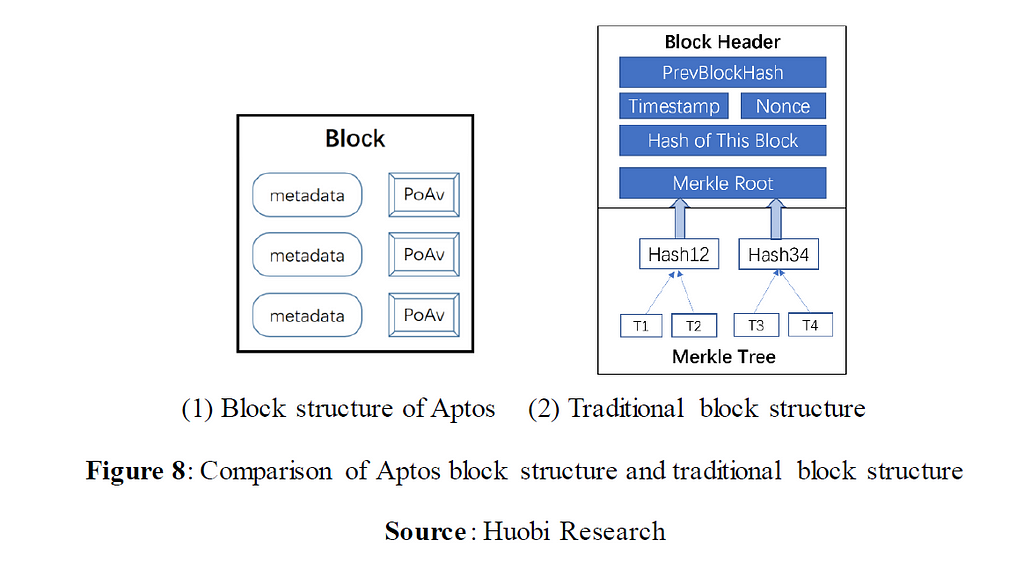

The process and content of Aptos’s consensus are somewhat different from that of the general blockchain. The transaction communication and the consensus phase are separate, and verifiers only need to reach a consensus on the metadata of the transactions (metadata). In other words, Aptos’s block contains only transaction metadata instead of actual transaction data. This is completely different from the traditional block structure, as shown in the figure below.

The following section explains how the consensus was reached.

After transactions are collected and verified by a verifier, a certain number of transactions or transactions within a certain period of time are packed as a batch, which gets transmitted repeatedly in verifiers’ network. The verifier cannot tamper with the batch after distributing it, and other verifiers accept and store this batch, while signing the summary of the batch and sending the signature back to it. When 2f+1 (weighted calculation, f being the weight of the false verifier) verifiers have signed the batch summary, the system generates a proof of availability (PoAv). PoAv guarantees that at least f+1 weighted honest verifiers have already stored this batch of transactions, so all honest verifiers can retrieve it before execution. (This assumes f number of false verifiers submitted signatures in the previous step, but later deleted the transactions which means at least f+1 honest verifiers still retain the transactions). As a result, the block can be generated without containing the actual transactions: the nodes proposing the block only need to sort and pack a few batches of metadata along with PoAv to produce a block. After other verifiers validate the PoAv and the block metadata criteria (e.g., proposal timestamp, block expiration time), they can vote on whether the block is legitimate or not.

Aptos evenly distributes the demand for bandwidth during the consensus phase over a longer period of time. This enables the consensus to be performed while using minimal bandwidth (because the consensus process requires a small amount of data for block metadata and PoAv to be transferred), resulting in high transaction throughput and minimal latency. The consensus in DiemBFT V4 is fast, typically requiring only two round-trips in the network, with a full round-trip time of less than 300 milliseconds. Since 2019, DiemBFTv4 has been extensively tested by dozens of operator nodes and multi-wallet ecosystems in multiple iterations, and by a total of 3 test networks of Aptos. Its stability should therefore be assured.

3.3.2 Combination of DAG structure and BFT consensus

Sui’s consensus mechanism, as the jewel in the crown, is indeed a breakthrough in both innovation and performance improvement. Although both Aptos and Sui are variants of HotStuff BFT, Sui modifies the transaction memory pool in DiemBFT, setting up transaction broadcasting directly in it. Also, a global random coin named Tusk is in place to achieve asynchronous consensus. Consensus has guaranteed Sui with faster transactions, low latency, and better scalability, and thanks to the innovation in consensus, Sui can more than outperform Aptos.

(1) Advantage of DAG structure in concurrency

Sui does not follow the concept of blocks, but rather transactions, where nodes vote on the “vertices” of transactions, which are similar to the root of a Merkle tree and contain references to previously associated transactions. Sui consolidates the advantages of object type classification and DAG to create faster transaction validation. The nodes do not need to reach consensus in a DAG, but to prevent double-spending, the network also needs nodes to participate in the verification and signing of transactions, which is infeasible without conjunction with some simple consensus mechanisms.

● Creating conditions for parallelization: DAG, as a class of data structure, can solve the efficiency problem by combining with blockchain. The chain structure allows only one chain to exist in the whole network, resulting in the concurrent processing of block generation which is infeasible. After the introduction of DAG structure, multiple blocks can be packed in the network in parallel.

● Causal relationship speeds up the consensus process: In Sui, the causal relationship of transactions is a critical feature, which is similar to the Merkle Root in a block and the backbone of the DAG structure, being the reference for consensus voting.

(2) When DAG meets BFT

The POS mechanism is running in Sui, whose consensus mechanism is a hybrid protocol that revolves around the forementioned three objects i.e. DAG+BFT. Several companies have started implementing this type of consensus, including Aptos, Celo, and Somelier, but the specific implementations differ. DAG+BFT consistency broadcast is used by Sui for single-owner objects, which delegates the transaction confirmation directly to the transaction per se, which releases the duty of block sorting and packing from verifiers. Ordinary DAG requires a total order, where the nodes could verify simply by following the paths of the object in the memory pool i.e. it traces all previous transactions related to the object, including signature, timestamp, and hash value to verify.

For shared objects, Sui sees a variant of BFT as Narwhal+Tusk, where Narwhal constructs a memory pool and Tusk is a random coin algorithm to select the leader node, which is similar to Alogrand’s verifiable random number for the sake of operation under asynchronous settings. Sui arranges orders by causal relationship in the memory pool with the help of DAG, laying the foundation for reliable communications. The nodes will then submit the supreme “vertices” to the leader node, and perform BFT consensus.

(3) Consensus process

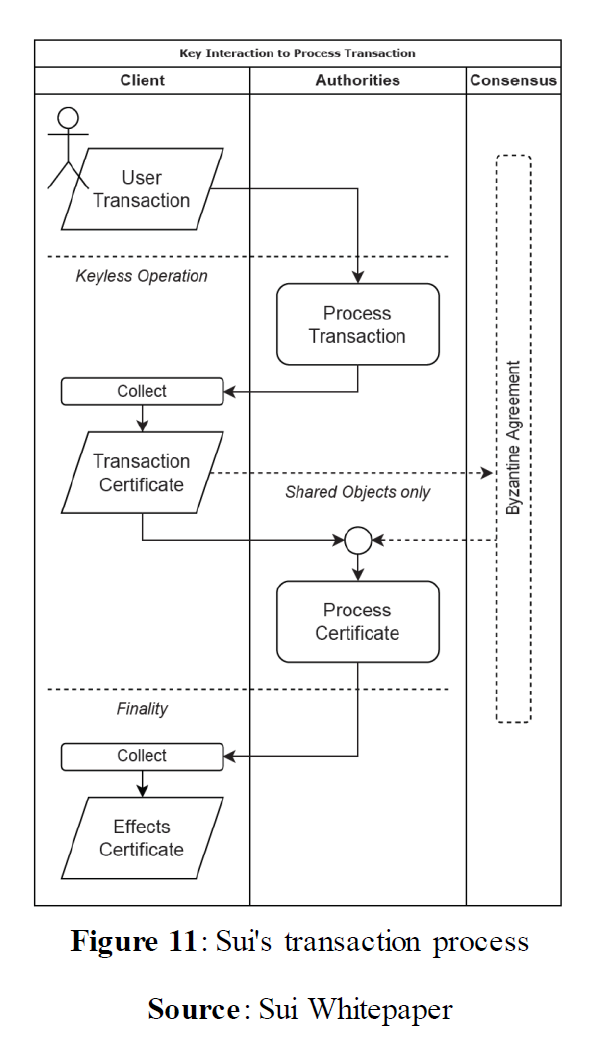

Two roles are assigned in Sui’s consensus mechanism: Clients and Authorities. Clients are full nodes that do not participate in consensus; they collect transaction feedback and respond to master nodes, and produce transaction certificates and effects certificates based on consensus. Authorities act as nodes participating in the consensus; they need to verify and sign transactions. Signed transactions will be returned to the client, who collect the signed transactions and issue the transaction certificates (DAG process). Here, the client is equivalent to a full node client, whose completed transaction certificates will be sent back to Authorities once more, and if the transactions involve shared objects, BFT consensus is required. Authorities are the verifier nodes, for which a consensus must be reached for transactions. For single-owner object transactions that do not require consensus, a signature from Authorities is also required. Transaction processing for shared objects is slightly more complex. The node needs to sort the objects and other associated objects, then execute the transactions and aggregate the signatures to form an execution certificate. Once the consensus node has a quorum of signatures (2/3), i.e., finality is reached, the client can collect the execution certificate and create the effects certificate.

(4) Memory pool constructed by Narwhal

DiemBFT has several alterations from Hotstuff, one of which is the creation of a memory pool, a feature absent in Hotstuff. Narwhal’s memory pool differs from DiemBFT in that Diem’s memory pool is only used for transaction pool sharing and periodic sending. Narwhal, on the other hand, positions the broadcast of transactions in the memory pool, which is equivalent to separating the network communication layer from the consensus logic. Nodes only sort and vote on proposal hashes. Two benefits stand out:

l The consensus layer can complete the work of consensus faster, the broadcast does not occupy the consensus communication channel, and the memory pool directly completes the broadcast of the block.

l Scale-out is achieved where nodes can add hardware to improve performance, and Narwhal can boost the performance in a linear manner (similar to sharding). Each verifier could horizontally scale and accumulate transaction throughput by acquiring more hashpower.

From Aptos and Sui, the two new L1 chains derived from Diem, it is evident that current research on consensus mechanism has been validated. Innovations have evolved on top of the classical consensus base, and there are two divisions for future development: 1. To replace the current block structure and content format with a lightweight version in the participation of the consensus process; 2. To promote parallelization in every possible way, especially in transaction sorting and broadcasting. In the future, characteristics of HotStuff are more likely to be seen in the evolution of consensus mechanism; the maturity of the current mechanism is unprecedented after all. As for the memory pool, which is a buffer zone preserved for transactions in line, the role will be modified and enriched.

3.4 Game theory veiled under economic models

Tokenomics is becoming increasingly pivotal in blockchain projects, which could bolster projects with a technical advantage. Every parameter setting and modification of the token economy is critical to the project and the community. For L1 chain, a token economy model can be measured from two aspects: (1) whether it could capture more economic value; (2) whether it could balance the relationship between the network, users, developers and validators (miners). To reach a balance is somewhat difficult; that is probably the reason established projects are either slow to issue tokens or have been continuously improving their token economy.

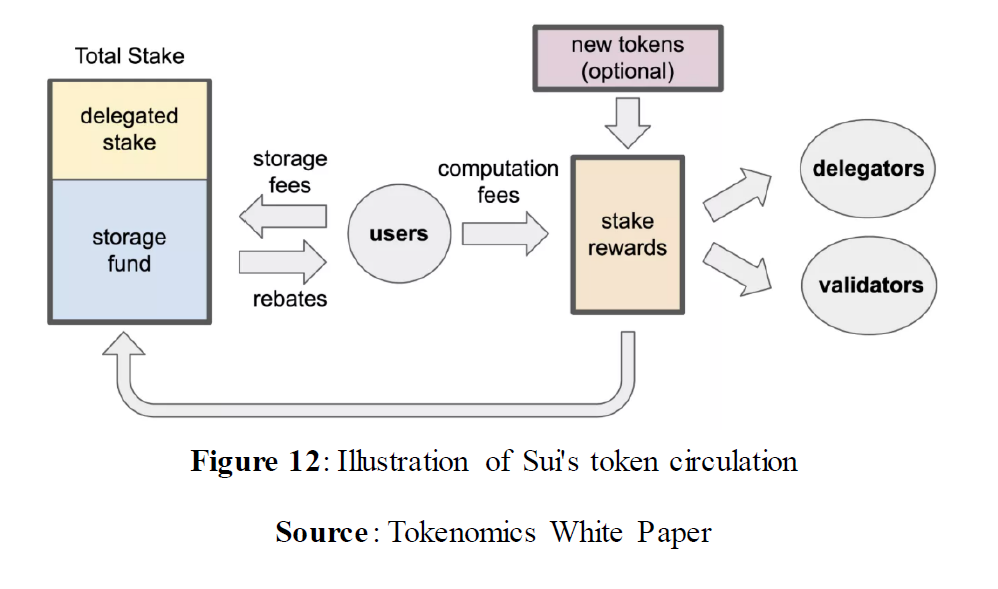

Among the new L1 chains from Diem, only Sui has a published token economy, which is actually more of an exploration of the economic model. Gas fees in Sui’s token economy include transaction fee and storage fee, premised on two aspects: (1) it preserves the advantages of the Ethereum economic model; (2) it proposes a new storage fee solution.

(1) Gas fee

Similar to Ethereum, users need to preset a gas limit, which is the maximum gas budget, before paying. The execution will continue until the budget is consumed, and will be aborted if the budget is exhausted. In addition, Sui also follows EIP-1559, where the protocol sets the basic fee (in units of gas per $Sui) that is algorithmically adjusted at each epoch threshold period (at the beginning of each epoch, validators are asked to submit the lowest price they are willing to process a transaction, and the protocol sets a 2/3 ratio of the price as a reference for each epoch. Validators who submit a low price and who have processed a transaction at a low price will be rewarded with a higher price and vice versa), and the transaction sender can also include an optional tip (denominated in $Sui)

Gas fee = (Gas Used * Base Fee) + Tip

Gas fees include both hashpower and storage costs, and Gas prices should be relatively low in the Sui network. Two determinants will have impact on verifiers’ offers when they submit gas prices: computational rules motivate verifiers to comply with offers submitted during the gas quote, while allocation rules incentivize verifiers to submit low gas prices.

(2) Storage fee

Users also need to calculate and pay storage fees in advance. This approach is similar to the gas fee, and also intended to prevent network congestion and potential attacks from some invalid storage transactions. Users must pay for current execution and future storage. However, the fee model is difficult to calculate, the demand for storage is not estimable, and the cost therefore becomes unstable.

To solve this problem, Sui introduces the concept of a storage fund for redistributing past transaction fees to future validators. When the on-chain demand is high, the storage fund can be imposed as a subsidy to the verifier. Three characteristics of the storage fund are revealed: 1. The fund is paid by past transactions; 2. The storage fund will be deposited into the treasury to generate revenue, which will be awarded to the verifier. The fund is lucrative such that the verifier can borrow the storage fund, which becomes the equivalence of the principal; 3. The mechanism of the storage fund incentivizes the user to delete stored data to be eligible for a discount on the storage fee; 4. The storage fund does not belong to the principal.

The economic model of storage fees is a new attempt after Filecoin, which requires users to find suitable miners to store data by themselves. However, Sui still has drawbacks, such as being driven by profits whereby operating nodes will try to delete old data, and there is no way to guarantee whether the nodes will stick to the rules of the protocol. However, adjustments will be made on an ad-hoc basis.

While Sui’s token economy is focused on the construction of a gas fee model, the staking rewards for verifiers, how to conduct on-chain governance, and how to incentivize protocols and developers are no less critical. We look forward to further developments from Aptos and Sui. As on-chain storage for universal L1 chains is valuable to a certain extent, a reasonable fee structure for storage could: 1. Better capture explicit token value; 2. Relieve the on-chain storage pressure — similar to the future of token economy development for L1 chains: 1. Sustainable business model for validators/miners; 2. Capture of protocol value and flexible participation by appropriate governance; 3. More rigorous gas fee model; 4. More application scenarios for tokens, including peg with on-chain algorithmic stable coins.

3.5 Linera’s path to a dedicated L1 chain

As the multi-chain ecosystems of Cosmos and Avalanche further develop, more L1 chains will decide to become dedicated chains, or create a new L1 chain directly for an application with sufficient users. Examples include dydx, WAX, etc. Although dedicated L1 chains are less demanding in terms of performance, they are more proximate to application scenarios and user growth.

Among the L1 chains from Diem, Linera is more inclined to becoming a dedicated L1 chain. Linera’s main application scenario is payment processing, connecting the real world with the Web3 world, which may fulfil Facebook’s vision of Diem becoming a payment processing tool. In this case, Linera must technically solve the following challenges of:

(1) Low latency

Mathieu, the founder of Linera, believes that there is a strong demand for low-latency blockchain in the market, and that more Dapps need low latency to respond instantly to user actions, such as retail payments, micropayments for gaming apps, self-hosted transactions, and connections between blockchains.

Due to the complex coordination between validators and memory pool consumption, confirmation in the current blockchain typically takes several seconds. To meet the inelastic demand for low latency, Linera is unlike Aptos and Sui. It removes memory pools entirely to minimize interactions between verifiers, which can greatly speed up simple operations such as payments. The Linera blockchain expects most account-based operations to be confirmed within fractions of a second.

(2) Linear Scale

Linear Scale is a technical idea from Web2: dating back to 2000, a horizontal expansion of “linear scale” occurred in the Internet, where database resources could be provided based on instant throughput and more nodes(hardware) could be added dynamically so that enterprises need not worry about website downtime in the event of high concurrency, or lie idle most of the time.

Current blockchains prioritize a “sequential” execution model that allows user accounts and smart contracts to interact arbitrarily across a series of transactions, but sequential execution hinders linear scaling (vertical). Linera will develop and promote a new execution model that is compatible with linear scale so that operations on various accounts could be completed simultaneously in different execution threads. In this way, execution can always be extended by adding new processing units to each validator.

Linera, a legacy from FastPay and the Zef protocol, will likely become a low-latency, high-transaction-confirmation payment chain that is commensurate to the Web2 Internet payment system, while inheriting the anonymous payment features of the Zef protocol. As for FastPay, it can be deployed across a variety of environments, either as a settlement layer for native tokens and cryptocurrencies in an independent manner, or as a sidechain for another cryptocurrency, or as a high-performance settlement layer on the side of an established Real Time Gross Settlement System (RTGS) to settle legal retail payments. On the whole, Linera can be used not only as a payment chain, but most likely also as a payment sidechain for other L1 chains or embedded within other Web2 Internet products to provide decentralized instant payment services in the future.

Dedicated L1 chains can often provide a better interaction experience and higher security level than universal L1 chains, e.g., dYdX announced its departure from Starkware and chose to develop a new chain based on Cosmos SDK; it is likely Rollup could be sufficient to meet dYdX’s high requirements for smooth trading as a futures trading DEX in the near future, but even if Rollup’s performance prove more than sufficient, compared to competing with hundreds of dAPPs for a block space, an exclusive blockchain could further improve the trading experience, and there are uncountable ways to improve the block’s security.. In the future, Linera, as a dedicated L1 chain with fewer applications on the chain and with most transactions being payments in the block, is likely to achieve lower transaction latency, higher transaction confirmation and a higher level of security than Aptos and Sui in a simple and segmented environment.

4.Exploration of New Next-gen L1 Chain Paradigm

From the above analysis of the new L1 chains from Diem, the innovation of L1 chain technology seems to be the main narrative. According to research, the ecological development strategies of the new L1 chains from Diem can be summarized according to the following: 1. lowering the threshold for users, which is reflected from the wallet application, where users could register directly with emails; 2. attracting developers based on the Move language, which facilitates developers to create various tools and frameworks for applications. The L1 chains from Diem signal a new era for current blockchain infrastructure: (1) greater pressure for competition among L1 chains, mainly in technology and performance. Since then, L1 chains have passed the time embarking merely by endorsements from institutions or celebrities, but more on technical teams and backgrounds; (2) technology and operation is equally significant, i.e., in every aspect of Aptos, from the favor of capital to the rapid support of ecological applications. However, some issues remain thorny, such as low barriers to entry and bona-fide satisfaction of decentralized users.

Starting from the Diem family of L1 chains, the futureof the next-gen L1 chains is unclear.

(1) Parallelization is the new paradigm of future L1 chains

Parallel computing is slightly stricter hardware requirements-wise, and miners/validators can withstand this increase of cost without seriously affecting the degree of decentralization. Yet, parallelized computing will substantially improve the overall performance of the blockchain and the stability of the system, which expands the boundaries of the impossible triangle. Meanwhile, consensus-based innovation will be difficult. Within the next 4 years, Aptos and Sui will be the benchmarks in terms of performance for L1 chains, and they are nearly invincible. In terms of ecological applications, the next bull market will likely not appear without the parallelization of AMM and NFT minting. AMM is the foundation of DeFi, and NFT may play a more significant role in more scenarios; a bull market will certainly be accompanied by an increase in trading density and various applications, and performance should not be the barrier to progress.

(2) Evolution of virtual machine

EVM is an isolated, determinable sandbox environment created by a chain node for smart contracts, where multiple contract programs are running in different virtual machine sandboxes within the same process. In this case, calls between Ethereum contracts are in fact calls between different smart contract VMs within the same process, and security relies on the isolation between smart contract VMs.

The Move virtual machine, on the other hand, supports parallel execution. Calls between contracts are centrally placed in a sandbox, and in this architecture, the security of the contracts is isolated mainly by the built-in security of the programming language, i.e., each asset based on Move is natively scarce and unique; corresponding access control properties are active rather than solely relying on the VM for isolation.

(3) Attempts on dedicated L1 chains

Currently, some applications with considerable user base still have the idea of forming new chains. For example, dYdX in the financial sector. Some dedicated L1 chains may solve technical problems. For example, Celestia provides a Layer2 data availability layer. Most of these new paradigms start from a technical point of view, but technology per se is meaningless apart from the overall development of infrastructure. Besides, the overall development of L1 chains rely heavily on whether the macro economy is healthy enough for capital to continuously be injected. The degree of strictness in regulation of the crypto industry in various countries may also pose a threat to developers, nodes and private L1 chains, and this is also a prominent criterion to determine the future of L1 chains.

References

1. Ethereum -> Solana -> Aptos: the high-performance competition is on, https://antiape.substack.com/p/cf40447d-d674-4454-9e09-f003517de6de?s=r

2. Announcing Sui, https://medium.com/mysten-labs/announcing-sui-1f339fa0af08

3. https://www.defidaonews.com/article/6775866

4. Built for Speed: Under the Hoods of Aptos and Sui, https://medium.com/amber-group/built-for-speed-under-the-hoods-of-aptos-and-sui-3f0ae5410f6d

5. How Sui Move differs from Core Move, https://docs.sui.io/learn/sui-move-diffs

6. Move on Aptos, https://aptos.dev/guides/move-guides/move-on-aptos/

7. Move: A Language With Programmable Resources, https://diem-developers-components.netlify.app/papers/diem-move-a-language-with-programmable-resources/2020-05-26.pdf

8. https://jolestar.com/why-move-1/

9. Linera, https://linera.io/

10. LibraBFT, https://tokens-economy.gitbook.io/consensus/chain-based-pbft-and-bft-based-proof-of-stake/librabft

11. FastPay: High-Performance Byzantine Fault Tolerant Settlement, https://arxiv.org/pdf/2003.11506.pdf

12. Introducing Linera: Bringing web2 performance and reliability to web3, https://medium.com/@linera/introducing-linera-bdb809735552

13. Zef: Low-latency, Scalable, Private Payments, https://static1.squarespace.com/static/62d6e9b8bf6051136f934527/t/630b0ec13ee5da4defbb6b8c/1661669058381/zef.pdf

14. Narwhal and Tusk: A DAG-based Mempool and Efficient BFT Consensus, https://arxiv.org/pdf/2105.11827.pdf

15. DAG Meets BFT — The Next Generation of BFT Consensus, https://decentralizedthoughts.github.io/2022-06-28-DAG-meets-BFT/

16. Sui whitepaper, https://github.com/MystenLabs/sui/blob/main/doc/paper/sui.pdf

17. Sui tokenomics, https://github.com/MystenLabs/sui/blob/main/doc/paper/tokenomics.pdf

About Huobi Research Institute

Huobi Blockchain Application Research Institute (referred to as “Huobi Research Institute”) was established in April 2016. Since March 2018, it has been committed to comprehensively expanding the research and exploration of various fields of blockchain. As the research object, the research goal is to accelerate the research and development of blockchain technology, promote the application of the blockchain industry, and promote the ecological optimization of the blockchain industry. The main research content includes industry trends, technology paths, application innovations in the blockchain field, Model exploration, etc. Based on the principles of public welfare, rigor and innovation, Huobi Research Institute will carry out extensive and in-depth cooperation with governments, enterprises, universities and other institutions through various forms to build a research platform covering the complete industrial chain of the blockchain. Industry professionals provide a solid theoretical basis and trend judgments to promote the healthy and sustainable development of the entire blockchain industry.

Contact us:

Website:http://research.huobi.com

Email:research@huobi.com

Twitter:https://twitter.com/Huobi_Research

Telegram:https://t.me/HuobiResearchOfficial

Medium:https://medium.com/huobi-research

Disclaimer

1. The author of this report and his organization do not have any relationship that affects the objectivity, independence, and fairness of the report with other third parties involved in this report.

2. The information and data cited in this report are from compliance channels. The Sources of the information and data are considered reliable by the author, and necessary verifications have been made for their authenticity, accuracy and completeness, but the author makes no guarantee for their authenticity, accuracy or completeness.

3. The content of the report is for reference only, and the facts and opinions in the report do not constitute business, investment and other related recommendations. The author does not assume any responsibility for the losses caused by the use of the contents of this report, unless clearly stipulated by laws and regulations. Readers should not only make business and investment decisions based on this report, nor should they lose their ability to make independent judgments based on this report.

4. The information, opinions and inferences contained in this report only reflect the judgments of the researchers on the date of finalizing this report. In the future, based on industry changes and data and information updates, there is the possibility of updates of opinions and judgments.

5. The copyright of this report is only owned by Huobi Blockchain Research Institute. If you need to quote the content of this report, please indicate the Source. If you need a large amount of references, please inform in advance (see “About Huobi Blockchain Research Institute” for contact information) and use it within the allowed scope. Under no circumstances shall this report be quoted, deleted or modified contrary to the original intent.

Post-Diem: An Outlook for Next-gen L1 Chains was originally published in Huobi Research on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.