Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Photo by Dhaval Parmar on Unsplash

Photo by Dhaval Parmar on Unsplash

Last year has been a very exciting year for devs like us.

A whole lot of promising up-and-coming technologies have made their place in the spotlight.

Maybe even so much that it’s hard to keep up.

To stay sane I guess we all have our favorites to focus on .

My team and I are big fans of everything related to the JAMstack, headless CMSs & static site generators.

So let’s keep moving this way and pushing new boundaries with some of the great tools we’ve explored in the last few months.

In this post I’ll show you how to:

- Set up Grav CMS as a headless CMS.

- Install React-powered static site generator Gatsby.

- Create a source plugin to query the API with GraphQL.

- The result? A small demo shop powered by Snipcart.

Sounds heavy?

Bear with me, it’s gonna be fun.

Let’s start by quickly introducing these tools.

What is Grav CMS?

Simply put, Grav is a modern open-source flat-file CMS. It actually won the CMS Critic award for “Best open source CMS” in 2016.

In this case, I’ll use it solely for content management, decoupling the backend from the frontend, or, as we like to call it, as a headless CMS.

Why bother doing so you may ask?

Mainly because it’s a great way to mix the backend capacities of the CMS with some of the amazing frontend JS frameworks out there (React in our case).

For more info, we’ve already covered all the benefits of working with headless CMS in another blog post.

I wanted to try Grav this time because of its nice and extensible admin panel which makes it a great choice to manage a simple website.

It’s also a very easy to install, easy to learn flat file CMS that is a great alternative to heavier systems like WordPress or Drupal.

React static site with Gatsby.. and GraphQL

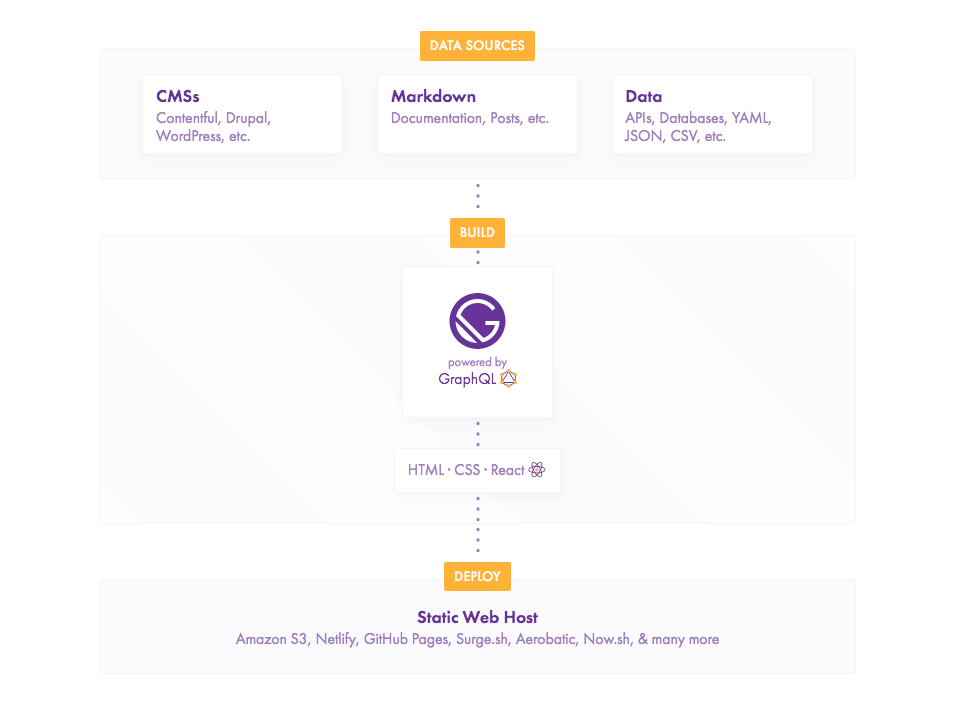

After I’ve got my decoupled CMS running, I’ll go on strapping the static site generator Gatsby on top of it.

Gatsby is the most popular static site generator powered by React. I’ve already played with it a little bit, but this time I’ll try to push it even further.

Mostly because I think it’s awesome and deserving of the exploration.

Here I want to use one of Gatsby’s great features which allows us to bring our own data from databases, SaaS services, APIs and, as in our current case, headless CMSs.

This data is pushed directly into our pages using GraphQL.

In our first blog post on this new technology we stated that GraphQL is probably more than just a trendy tool and that it’s here to stay.

It really seems to be the case as it’s probably going to get even bigger in 2018.

It’s nice to see it getting implemented with great tools such as Gatsby and I’m really pumped to give it another try today.

Okay, this is gonna be a long one, so without further ado, let’s get to work!

Grav as headless CMS tutorial

Grav installation

Grav is very easy to install. I used composer to do so. Simply open a terminal and type this command:

composer create-project getgrav/grav snipcart-grav-headless

Then, we want to start a web server, I chose to go with the built-in PHP development web server. Note that I am on Windows.

cd snipcart-grav-headless php -S localhost:8000 .\system\router.php

This will start a web server on your machine with Grav running. You can open http://localhost:8000 in a browser and you should see it in action.

Grav CMS as headless setup

We now have a working Grav instance. The first thing we’ll do is to add their awesome admin panel.

One thing I really like about Grav is that everything is a plugin, even their admin panel. ;)

We’ll install it via their own package manager.

php .\bin\gpm install admin

Once the installation is completed, open your browser to http://localhost:8000/admin. You will have a small form to fill to create your initial user, then you'll be logged in.

We’ll also add another plugin, this plugin will be responsible to serve the content we’ll add in Grav in JSON. We’ll then be able to use this data in our Gatsby application, later in the tutorial.

To be honest, I don’t think Grav CMS is widely used this way yet. I’ve got a hard time finding a plugin that does the job and that had a bit of documentation.

I started by looking at feed from the Grav team, but, it wasn't exporting all the data I needed. This plugin is really intended to generate a feed file for RSS readers and things like that.

The one I took is page-as-data. Although it does not seem to be maintained anymore, it does the trick. It generates a JSON file containing everything I need to use Grav as a headless CMS.

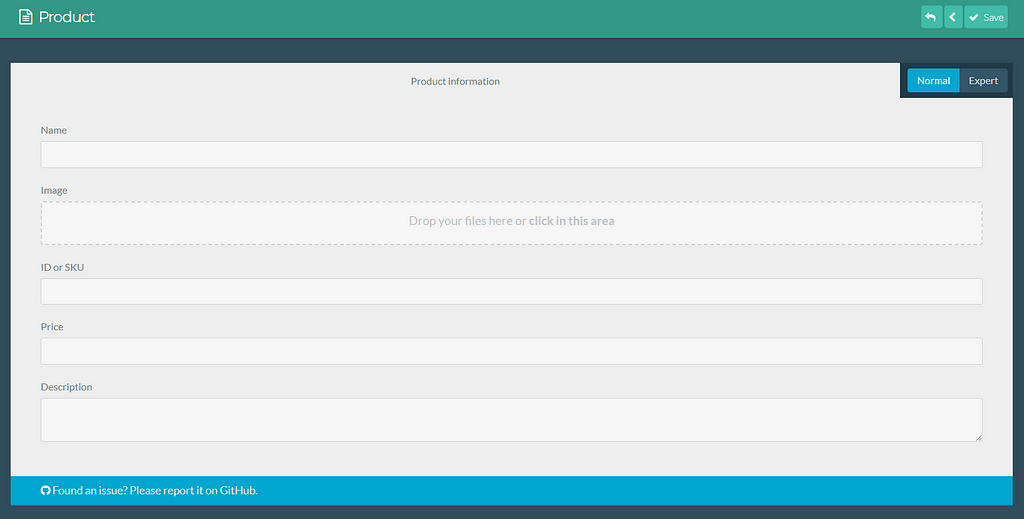

Defining products in Grav

The last step will be to define our product blueprint. A blueprint in Grav allows you to define the structure of the content. In our case, we’ll create one that will define what is a product with Snipcart required options.

For this step, I prefer to open the folder in VS Code (or any other editor) and edit it manually. You’ll need to go to your themes folder. In my case, I kept the default one as I won’t really use Grav to display anything.

So I had to create the file in: /users/themes/antimatter/blueprints.

This new blueprint will give us a new “content type” in Grav CMS, with some default fields that will keep product information within Grav.

Adding content in Grav

We now have everything needed to make Grav work as we expect. We’ll add some content.

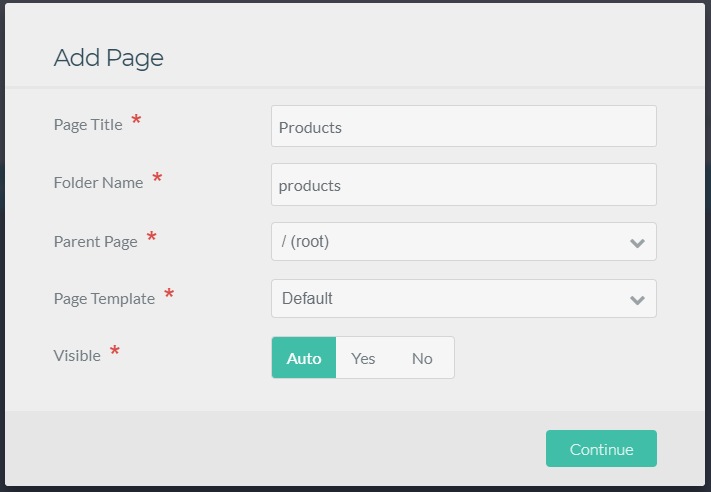

We’ll start by creating a new page which is going to be the parent of each product we’ll add.

Log into the Grav admin panel, the URL should be: http://localhost:8000/admin.

Go to the Pages section, and click on the Add button in the top right corner and create a new page:

We can keep the default Page template. As we won’t use Grav to render the content it doesn’t really matter. However, we’ll need to instruct the page about its content.

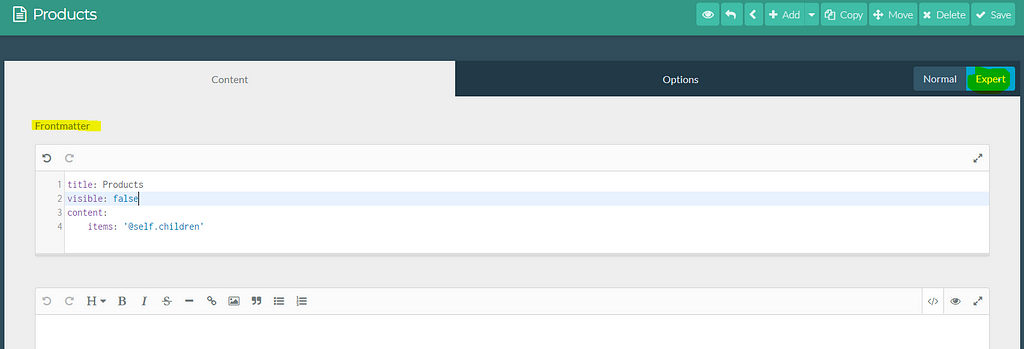

Open the page in Expert mode to get access to the frontmatter and paste this:

Once the page is created and configured, we’ll define the products.

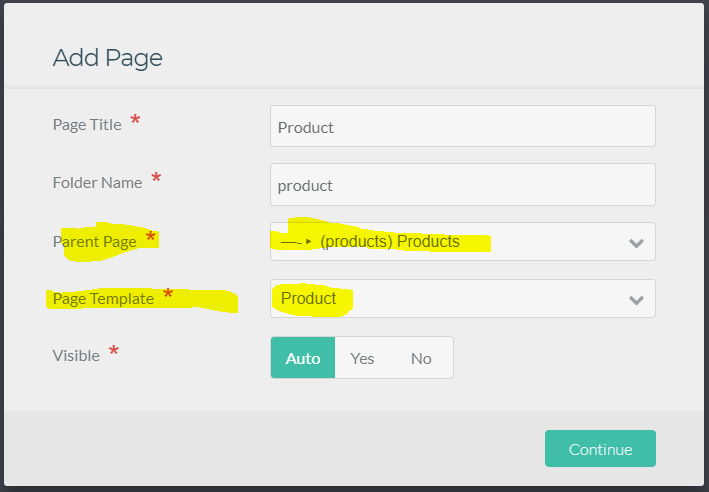

Click on the Add button again:

Make sure to select the correct parent page and template.

Once the page is generated, you’ll get a form, which uses the fields we defined in our blueprint earlier in the post.

You can set the product attributes there and hit the Save button. Make sure you are in Normal mode, not Expert.

Now that we have come content, we can open this URL in the browser: http://localhost:8000/products?return-as=json.

The page-as-data plugin will catch this request and return a JSON object containing our data. This is exactly what we're going to use as the data source in our Gatsby project.

Gatsby setup using GraphQL schema

Installing Gatsby

Our first move is to install Gatsby. Their documentation is well done and you should refer to it if you want more information.

Make sure you have the classic NodeJS stack installed and have access to npm and then open a command line.

We’ll install the gatsby-cli package, this package will expose a couple of commands related to Gatsby.

npm install --global gatsby-cli

Then, we’ll scaffold our new project:

gatsby new gatsby-site

This will clone the necessary files and install the default starter.

Creating a source plugin to query our API

In order to get access to our data in Gatsby, we’ll need to craft a source plugin. The plugin allows to “source” data into nodes, a concept built into Gatsby. These nodes will generate a GraphQL schema that will be able to query in our components.

I have to say, this is a very nice approach. I love the fact that I can use GraphQL in Gatsby in such a fun and simple way.

Start by creating a pluginsfolder at the root of your workspace. You could also work with a standalone NPM package if you'd prefer, but I decided to use the simplest way for this example.

My tree looks like this (note that I ran npm init in the folder to create the package.json file):

You can consider gatsby-node.js as the index.js of the package. This is where the plugin code will start.

Our plugin will basically make an HTTP call to our /products endpoint we've built with Grav. Then, it will use the response payload and constructs the nodes that will be used by Gatsby to generate our GraphQL schema.

We’re going to use two packages for this. The first one being axios, a very well known HTTP client in the Node community.

Then, gatsby-node-helpers which will be very useful to generate nodes. A node in Gatsby contains, amongst other things, some internal data that must be hashed. Using the helpers will save us a lot of code.

npm install --save axios gatsby-node-helpers

Our Gatsby plugin must export a function named: sourceNodes, this function will call our API and create nodes.

Add a file named gatsby-node.js in your project if you did not already, and add this code in it:

I think the code is quite straightforward and simple so I won’t explain it step by step.

Once it’s done, we’ll have to let Gatsby know about our plugin.

Open gatsby-config.js file. This file should be in your workspace root. In the plugins array, add 'grav-headless-plugin'.

My config file looks like this:

You can then launch your Gatsby development server:

gatsby develop --port 8001

Gatsby comes with a pre-configured GraphiQL.

It’s a very clean GraphQL client that enables you to execute queries directly in your browser. Navigate to http://localhost:8001/___graphql in your browser.

You can then run this query:

This will return a JSON object:

This data comes from Grav and is now available on your Gatsby site!

Awesome isn’t it?

Generating the site

The first thing we’ll do is to add Snipcart dependencies.

To do so you’ll need a Snipcart account (forever free in test mode).

Open layouts/index.js file and add these lines in the <Helmet> component:

This will add required files to your site and Snipcart will work when we’ll need it. :)

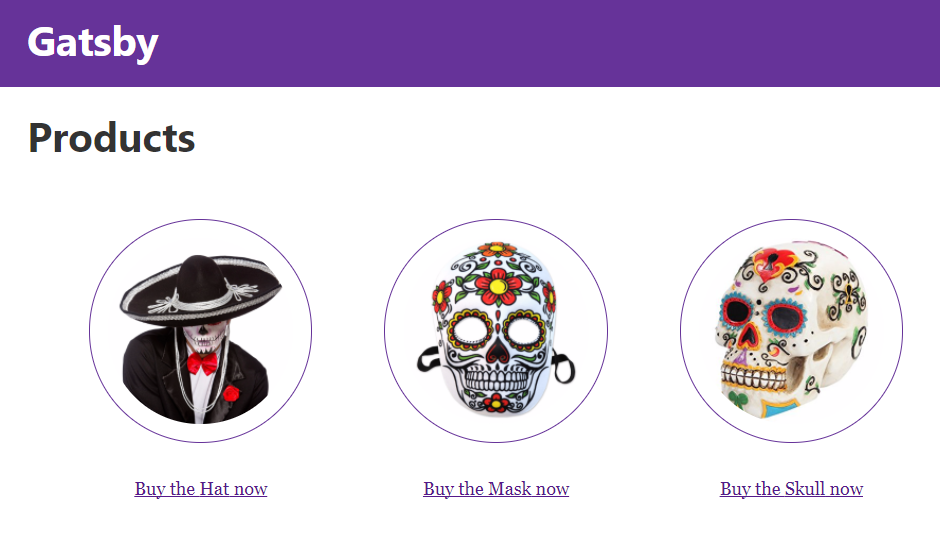

As we want to create a static site, we’ll need to make a page per product. Luckily, Gatsby has it covered and exposes some methods we can use to do so.

Basically, we’ll query all of our products via GraphQL, then we’ll generate a page dynamically for each product using a generic component.

To do so, open gatsby-node.js file at the root of your workspace (not the one from the plugin we made). This is where we'll generate the pages.

We want to run this GraphQL query and create a page for each result:

In the gatsby-node.js file, we can export a function that will create these dynamic pages. The function is named createPages.

exports.createPages = async ({graphql, boundActionCreators}) => { }Copy the two lines above. Notice that we inject graphql that will be used to query our datastore and boundActionCreators that will be used to build the page.

We’ll first get our products:

With these results, we’ll create the pages automatically. For this, we’ll need to require two dependencies, path which comes with Node and slash to deal with Windows file system correctly.

You’ll have to install this one via npm.

npm install slash --save-dev

Include these two dependencies in the top of your file:

const slash = require('slash') const path = require('path')Now we’ll use the createPage method available in boundActionCreators to generate the pages:

As you can see, we get all the products, then for each of them we create a page. We pass the product ID to the context, which will be available in the Product component data.

My whole gatsby-node.js file looks like this:

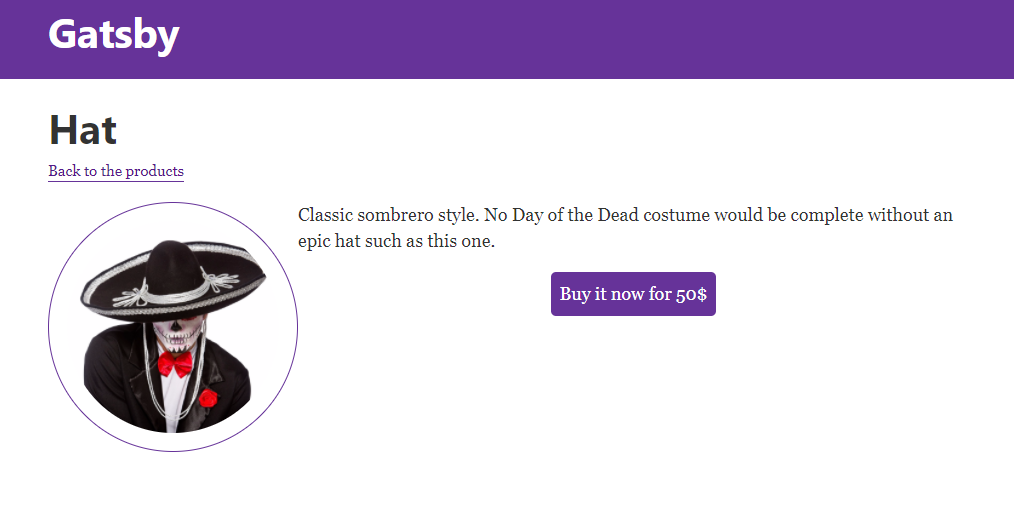

Speaking of Product component, this is what we'll do next. This component will be used to render each product page.

Create a file named product.js in your src/components folder.

This file will contain the Snipcart buy button and general information about the product. I won’t dig too much into React and styling things, but here’s my component code:

Note that we use GraphQL there too to fetch the product by its ID. The id value comes from the value we set in the context while we were in gatsby-node.js file.

I used CSS modules to manage my components CSS. I suggest you read this excellent tutorial on the matter.

We also imported the Link component. You'll want to use it to add link between pages generated by Gatsby.

Once your site is loaded, React router will take care of the routing and it will create a very fluid experience. No full reloads, only speed!

If you hit this page (make sure that you have your Gatsby dev server running with gatsby develop): http://localhost:8001/products/hat-hat you should see an awesome product page.

Notice the Back to products link? This is intended to redirect to our products page that does not exist yet.

Let’s craft a page for it, in the pages folder, create a new file named products.js:

As we did earlier, we again use GraphQL to query our products. Products will then be available in component data object and we'll be able to render each of the products.

If you go to http://localhost:8001/products you should now have an awesome product listing page. :)

Live demo & GitHub repo

See the open source repo on GitHub.See live demo here!

See the open source repo on GitHub.See live demo here!

Closing thoughts

I had a blast doing this tutorial!

I enjoyed the simplicity of Grav, it took me only a couple of minutes to get it running. The hardest part was to find the plugins I was going to use it as a headless CMS and to be honest, the plugin I used in the demo is kind of limited.

I think Grav might be a good solution for a very basic scenario, but is not as advanced as other options we looked at in previous posts such as Directus or GraphCMS.

That being said, I’m well aware that it is not the way most people use Grav. ;)

For the frontend part with Gatsby, it’s been a real delight to use once again. This really is an awesome piece of software. The documentation is on point and it’s very well executed.

The GraphQL implementation makes the whole experience even more special.

I haven’t touched to React a lot, only with a few tutorials a while ago. Still, Gatsby was easy to learn and made me want to dig deeper into React.

If you’ve enjoyed this post, please take a second to share it on Twitter. Got comments, questions? Hit the section below!

I originally published this on the Snipcart Blog and shared it in our newsletter.

Build a Gatsby & GraphQL App on Top of a Headless CMS was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.