Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

I think all of you saw Google Image Search and asked yourself “How it works?”, so today i will give you an answer on this question and we will build simple script for making image search right inside your console.

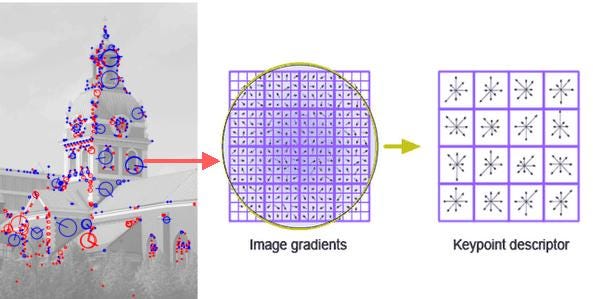

Image featuresFor this task, first of all, we need to understand what is an Image Feature and how we can use it.Image feature is a simple image pattern, based on which we can describe what we see on the image. For example cat eye will be a feature on a image of a cat. The main role of features in computer vision(and not only) is to transform visual information into the vector space. This give us possibility to perform mathematical operations on them, for example finding similar vector(which lead us to similar image or object on the image)

Ok, but how to get this features from the image?There are two ways of getting features from image, first is an image descriptors(white box algorithms), second is a neural nets(black box algorithms). Today we will be working with the first one.

There are many algorithms for feature extraction, most popular of them are SURF, ORB, SIFT, BRIEF. Most of this algorithms based on image gradient.Today we will use KAZE descriptor, because it shipped in the base OpenCV library, while others are not, just to simplify installation.

So let’s write our feature exctractor:

Most of feature extraction algorithms in OpenCV have same interface, so if you want to use for example SIFT, then just replace KAZE_create with SIFT_create.

So extract_features first detect keypoints on image(center points of our local patterns). The number of them can be different depend on image so we add some clause to make our feature vector always same size(this is needed for calculation, cause you can’t compare vectors of different dimensions)Then we build vector descriptors based on our keypoints, each descriptor has size 64 and we have 32 such, so our feature vector is 2048 dimension.batch_extractor just run our feature extractor in a batch for all our images and saves feature vectors in pickled file for further use.

Now it’s time to build our Matcher class that will be matching our search image with images in our database.

Here we are loading our feature vectors from previous step and create from them one big matrix, then we compute cosine distance between feature vector of our search image and feature vectors database, and then just output Top N results. Of course this is just a demo, for production use better to use some algorithm for fast computation of cosine distance for millions of images. I would recommend to use Annoy Index which is simple in use and pretty fast(search in 1M of images is taking about 2ms)

Now just put it all together and run

You can download this code from my githubOr run it right away in Google Colab (free service for online computation even with GPU support): https://colab.research.google.com/drive/1BwdSConGugBlGzPLLkXHTz2ahkdzEhQ9

Conclusion

When you run this code you will see that similar images are not always similar as we understand it. That’s because this algorithms is context-unaware, so they better in finding same images even modified, but not similar. If we want to find context similar images then we should use Convolutional Neural Network, and the next article will be about them, so don’t forget to follow me :)

Follow & Clap :)

Feature extraction and similar image search with OpenCV for newbies was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.