Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

An exploration of human-machine trust (image by Daryl Campbell, CCO, Sensum)

An exploration of human-machine trust (image by Daryl Campbell, CCO, Sensum)

In the ever-accelerating march of big data and AI, we are sharing increasingly detailed and personal data with our technology. Many of us already freely publish our thoughts, our current location, our app-usage stats… a raft of personal information, handed to companies in the hope that they will give us something useful or entertaining in return.

Increasingly we are sharing our physiological data too, whether we’re aware of it directly or not, such as heart rate through fitness trackers, or facial expression through a tsunami of ‘puppy-dog’ filtered selfies. The promise in return is that our apps, software, vehicles, robots and so on will know how we feel, and they will use that empathy to provide us with better services. But as technology starts to get really personal, and autonomy gives it a ‘life’ of its own, we have to take a fresh look at what trust and ethics mean.

OK, I admit, this topic is way too big for a brief overview. Interviewing experts for this story melted my head. Their ideas were simultaneously amazing and terrifying. My aim here is simply to highlight a few things you may wish to consider in earning the trust of your customers while also trying not to fuck up society and end the human race.Also, I’m a hopeless, reckless optimist. If I wasn’t I would have left the startup world years ago. So I will leave the doomsaying to other people. There is plenty of it about and plenty more to come, because bad news sells. Scary is sharey. And robots can be scary.

Whenever an organisation convinces its users to share intimate information, such as their physiological state, their location, or imagery from their surrounding environment, there is an inherent contract of trust between the two parties. That contract is a fragile and valuable thing that is easy to lose or abuse, and very difficult to rebuild once it’s broken. In most cases, we probably don’t even think about it when we sign up for new services because we’re more concerned about what they can do for us, than what they might do to us. But our relationship with technology deserves serious thought as AI and connected devices become increasingly ubiquitous.

It only takes one dickhead to ruin the party.

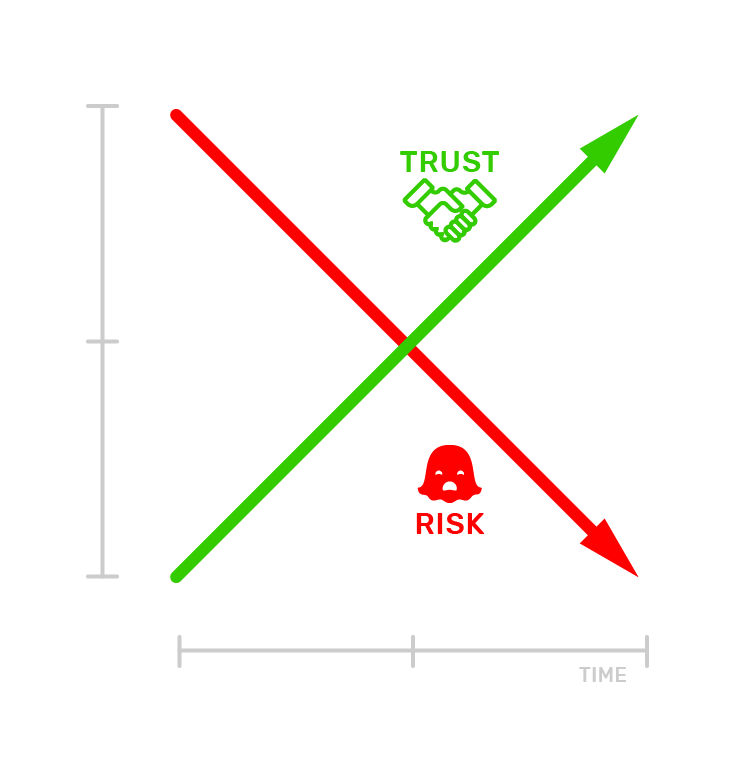

The Trust-Risk Relationship

One of the first things to consider when calculating how much trust you will need to establish with your users is risk. You could say there is an inverse relationship between the size of the risk and the level of trust you start out with.

Take the example of how much you would trust an autonomous car. In this case it is life-and-death. If the machine crashes, you won’t just be able to press ctrl-alt-delete and get on with your day. So we can say that the initial trust gap is huge.

That said, what if it was an aeroplane rather than a car? Currently, people tend to trust driving more than flying, in spite of the statistical fallacy in doing so. Cars have sat for a long time in a sweet-spot of high desire and trust. We feel more familiar with cars. This might help autonomous vehicle developers gain customers but we can’t guarantee that the public mindset won’t change as we go autonomous. If one autonomous vehicle in a million causes a fatal accident it will be global news, while millions of people a year are already killed by human drivers with little mention in the press.

So…

- Educate your users. Be transparent about what your technology is taking from them and what it does with that information. Be open about the inherent risks to them.

- Prioritise user-experience design from Day One, to understand and mitigate where the trust issues appear in the journey of interaction with your product or service.

Origins of the Trust Bias

In unfamiliar or high-risk scenarios, we are probably biased towards distrust in the first place. But in familiar social relationships we lean towards trust. We give the other actor the benefit-of-doubt on our first interaction. This might make evolutionary sense: while we need to be cautious to avoid a fatal mistake, we also benefit from efficiencies in developing relationships by going with the flow. But if you push your luck you will have a lot of work to do to overcome that betrayal.

So…

- Design your service to appear amenable from the initial user interaction. Hold their hand through the first steps in the process.

- The more novel your service, the more work you need to do to get your users comfortable with it.

- Err towards open. Don’t push your luck with your users’ trust, you could balls it up for the rest of us.

Trust as an Emergent Property of Empathy

Us humans are consummate mind-readers. We are always trying to imagine the other person’s worldview, and considering how to respond to it. This empathic processing is what companies like ours (Sensum) are modelling in software and devices to enhance human interactions with them.

In trying to guess another person’s perspective, we make snap judgements about the extent to which we can trust them. Our trust assumptions in any given situation or relationship are based on factors such as:

- Historical performance: how has the other actor behaved in the past?

- Tribalism: are they part of my group? Are they a familiar type of character?

- Current activity: what are they doing right now?

If we know the other actor well, or know that they know us well, and understand the context of our situation, we are more likely to assume greater trust in the interaction. Seen this way, it can be argued that you need empathy before you can have trust.

The ability to judge another entity and adapt that judgement to the context of the situation on the fly is a kind of emotional intelligence that has many advantages. Machines at this stage have only rudimentary capabilities in this space. They can appear uncaring by being too distant, or annoy us by ‘acting’ too empathic and involved without having the requisite understanding of the trust relationship (think: Microsoft’s Clippy).

This presents a paradoxical design challenge. On the one hand, trust can be nurtured by presenting the user with an interface that behaves consistently so they always know what to expect of it. However, a highly empathic system should be able to adjust its behaviour to suit the user’s situation, mood, character, etc., choosing when to be more or less intrusive. Machines could provide an option to set our preferences for how empathic or human we want them to be from one interaction to the next. But this is inefficient. They should already know how to behave — assessing our state-of-mind and the surrounding context, and acting appropriately. That’s what a good friend would do without a thought.

As suggested by theories such as ‘thin-slicing’, the snap-judgement we make on first encountering another entity can set the tone for the lifetime of our relationship with it. After that, efforts to adjust that image may only have a minor effect. I attempted to do my own mind-reading by including a swear-word near the start of this story, judging Medium readers to be comfortable with that kind of thing. These are the kinds of tricks we employ all the time to win each other over.

No matter how empathic we are able to make human-machine interaction, we have to consider how ‘human’ we want our machines to appear to us. Too closely imitating human behaviour could unveil a whole new Uncanny Valley for human-machine interaction.

So…

- Manage expectations from the start. If you can’t provide an incredible service, don’t brand it in a way that implies you can (think: the Fail Whale from Twitter’s clunky early days, or Google’s perpetual beta strategy).

- Grow familiarity and confidence between the user and your system by designing it to behave consistently.

- Then, gradually introduce more varied, dynamic levels of empathic response.

- Add context information into the mix, not just human feelings or behaviours, when training systems to be empathic. Eg. location, weather conditions, time of day, etc. More on that in our recent story on sensor fusion.

How human-like should our tech be for us to trust it? (Image courtesy: Flickr user, smoothgroover22)

How human-like should our tech be for us to trust it? (Image courtesy: Flickr user, smoothgroover22)

Teaching Machines to be More Human

Consider the different relationships that we can apply empathy, trust, morality and other human social heuristics to:

- H2H: human-to-human

- H2M: human-to-machine

- H2X: human-to-(everything)

- M2M: machine-to-machine

- M2I: machine-to-infrastructure (eg. street signs, buildings, etc.)

- M2X: machine-to-(everything)

Each member of the network in a given situation must consider not only their own psychological metrics, but also those of the rest of the group. And this could apply just as much to human interaction with machines as within the machine relationships with each other. Thus the application of traditionally ‘human’ heuristics, such as empathy, trust and morality, will extend to the M2M interactions, with which we may have little involvement.

When the interactions are solely machine-to-machine, attributes like trust and empathy may be very different to what we’re used to. Electronic social interactions might be cold, algorithmic, inhuman processes. But then again, we have to ask ourselves how different that is to our own actions, when you view them from an evolutionary perspective. We feel ‘emotional’ responses to stimuli around us. Those emotions evolved to drive us to act in an appropriate manner (run from it, attack it, have sex with it, etc.). We say that we avoided a dark alley because we felt scared, or pursued a relationship because we fell in love. Our bodies tell us, ‘if this happens then do that’.

If x then y.

Our emotions are Nature’s algorithms.

So…

- Explore what rules and interactions you can design from the start to make your systems behave socially.

- Apply your rules not just to human-machine interaction but to all possible relationships in the network.

Getting Along in our Empathic Future

Building heuristics for social behaviour isn’t new. It has already been done in systems from communication networks to blockchains. And those algorithmic heuristics have evolved over time, adapting to new opportunities or increased complexity. Autonomous systems are learning by themselves, from their environment and experience, just like we learned over millions of years, but at blinding speed. Soon we may not even be able to understand the languages and actions of the machines that we originally created.

Machines will get better at understanding and serving us as they spend more time learning about us. Therefore we can expect a transition period as we gradually hand over more control to autonomous systems such as vehicles and virtual assistants.

Before our technological creations leave us behind in a runaway evolutionary blur, we must consider how to teach them empathy and morality. But machines have a fundamentally different baseline for concepts such as morality. The costs and benefits of actions such as killing another machine aren’t the same as they are between animals in nature. Social interactions are fuzzy, not only in their logic but also their application, so they may be very difficult to engineer. Perhaps we could start by applying a ‘do unto machines as you would have them do unto you’ rule.

The age of empathic autonomy is commencing, and my guess is that we would all benefit from the same precept my colleagues and I wrote down as Rule Number 1 at Sensum: don’t be a dick.

Special thanks to Dr Andrew Bolster (Lead Data Scientist at Sensum) and Dr Gary McKeown (School of Psychology, Queen’s University Belfast) not only for providing much of the thinking behind this story but also blowing my tiny little mind in the process.

How to Engineer Trust in the Age of Autonomy was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.