Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

How I’m Learning Deep Learning — Part II

Learning Python on the flyPart of the How I’m Learning Deep Learning Series:

Part I: A new beginning.Part II: Learning Python on the fly. (You’re currently reading this)Part III: Too much breadth, not enough depth.Part IV: AI(ntuition) versus AI(ntelligence). Extra: My Self-Created AI Master’s Degree

Rather than watch from the sidelines and watch the AI takeover unfold, I decided to become a part of it.

This series is mainly about sharing what I learn along my journey.

TL;DR

- Submitted my first Deep Learning project, I passed!

- Started learning about Convolutional Neural Networks

- Learned about TensorFlow and Sentiment Analysis

- Went back to basics and started studying Python on Treehouse and basic calculus on Khan Academy

- Next month: Project 2 and Recurrent Neural Networks

Part 1 started off with me beginning the Udacity Deep Learning Foundations Nanodegree, as well as beginning to learn Python through Udacity’s Intro to Python Programming.

That article ended at week 3. I’m now at week 6.

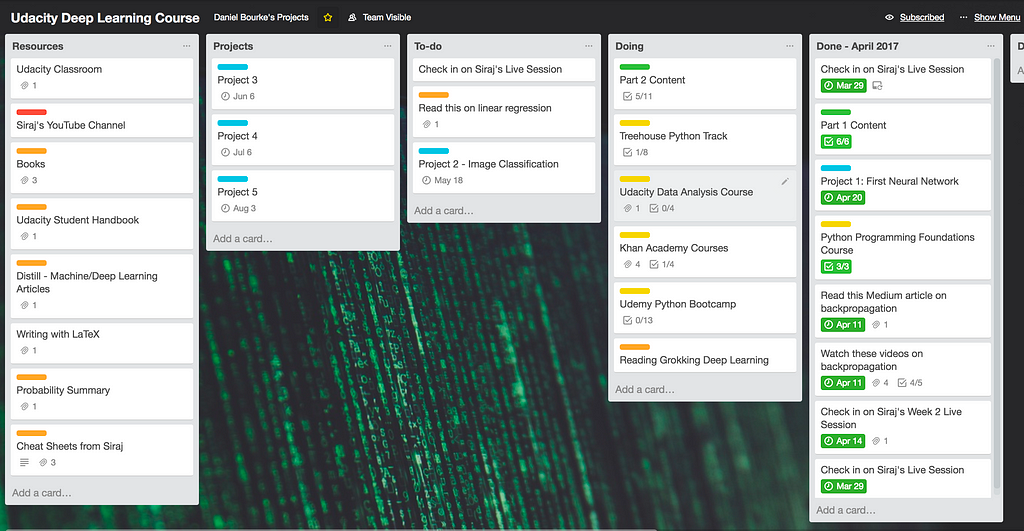

How I’m tracking

How I’m using Trello to stay on top of things.

How I’m using Trello to stay on top of things.

So far, it has been challenging but the amazing support outlets have been incredible.

Most of the troubles I’ve run into have been because of my own doing. They are mostly due to my minimal background in programming and statistics.

I’m loving the challenge. Being behind the 8-ball means my learning has been exponential. I’ve gone from knowing almost zero Python and calculus knowledge to being able to write partial neural networks in six weeks.

None of this came easy. I have been spending at least 20–30 hours per week on these skills and I’ve still got a long way to go. This amount of time is due to my lack of prerequisite knowledge before starting the course.

Week 3

With a lot of help from the Udacity Slack Channel, I managed to complete my first project. The project involved training my own Neural Network to predict the number of bikeshare users on a given day.

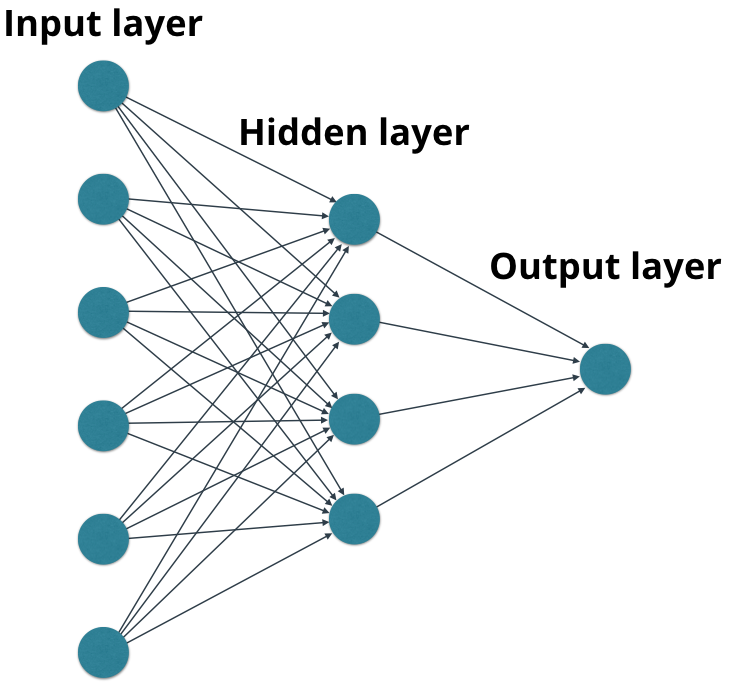

It was really fun to see the model improve when adjust parameters such as the number of iterations (amount of times the model would try to map the data), the learning rate (how fast the model learns from the training data) and the number of hidden nodes (number of algorithms the input data flows through before becoming an output).

Source: Udacity

Source: Udacity

The above image shows an example of a Neural Network. The hidden layer is also known as hidden nodes. In Deep Learning, there can be hundreds, if not thousands of these hidden layers between the input and the output, in turn creating a Deep Neural Net (where Deep Learning gets its name from).

In between waiting for my project to be graded and continuing on with the Deep Learning Foundations Nanodegree, I deciding to continue on with some other courses that Udacity offers.

I finished the Intro to Python Programming course and started the Intro to Data Analysis course. I haven’t made much progress on the Data Analysis course but this is one of my goals for the coming month.

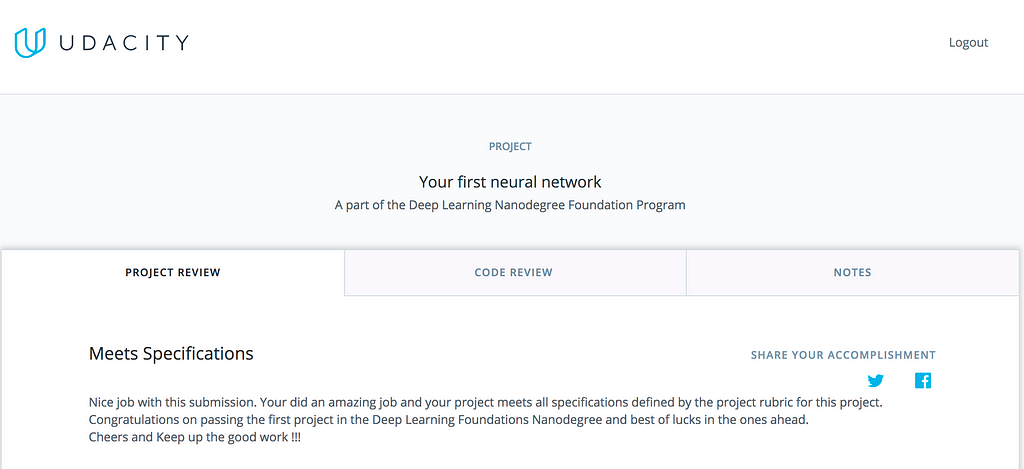

Three days after submitting my project, I received feedback. I passed! I cannot explain to you how satisfying this was.

Passing my first Deep Learning project! :D

Passing my first Deep Learning project! :D

Three weeks after asking what the refund process for the course was (see Part 1), I had made it over the first major milestone of the course.

I had made the right choice to stay on.

Shout out to the Slack channel for all their amazing help!

Convolutional Neural Networks

A couple of days after receiving feedback for my first project, I began Part 2 of the Nanodegree, Convolutional Neural Networks.

Convolutional Neural Networks are able to identify objects in images. They’re what power features such as Facebook’s automatic tagging feature for your photos.

For a more in-depth look at Convolutional Neural Networks, I highly recommend you check out Adam Geitgey’s article. In fact, check out his whole series while you’re at it.

Part 2 started with a video from Siraj Raval on how to best prepare data for Neural Networks.

Siraj may be perhaps the most fun instructor I’ve ever seen on YouTube. Even if you’re not into DL, ML or AI (you should be), his videos are fun to watch.

Just like humans, machines work best when the signal is deciphered from the noise. Preparing data for a Neural Network involves removing all the fluff around the edges of your data so that the Neural Network can work through it much more efficiently.

Remember, machine learning can only find patterns in the data that humans would eventually able to find, it’s just much faster at doing it.

A (very) simple example:

You want to find out trends in test scores for a specific grade 9 class in a specific school.Your initial dataset contains the results of every high school in the country.It would unwise to use this dataset to find the trends for your specific class.So to prepare the data, you remove every single class and year except for the specific year 9 class you want to test on.

For more on data preparation, I suggest this video by Siraj:

Week 4

I started this week building and learning about Miniflow, my very own version of TensorFlow (I’ll get to TensorFlow later). The goal of this lesson was to learn about differentiable graphs and backpropagation.

Both of these words are jargon and meant nothing to me before the lesson.

I find things easier when I define them in the simplest of terms.

Differentiable graph: A graph that a derivative can be found (from a function). Using this derivative makes it easier to calculate things in the future.

Backpropagation: Moving forward and backwards through a Neural Network calculating the error between the output and the desired output each time. The error is then used to adjust the network accordingly to more accurately calculate the desired output.

I was having difficulty with some of the sections in this class. I was getting frustrated. I took a break and decided to swallow my ego, I had to go back to basics.

Back to Basics

If you’re ever having trouble with a concept, go back to basics rather than trying to push through. If you keep allowing the sunk-cost fallacy to win, you may get there in the end but it may be more harmful than good. I’ve been guilty of pushing through towards completing goals for many stressful hours. I didn’t want to let this happen with this course.

The math aspect of the classes was troubling me the most. Don’t take my troubles as a reason to be scared though, it didn’t take me long to be brought up to speed by the lovely people at Khan Academy.

I watched the entire series on partial derivatives, gradient and the chain rule. After this, everything in the Miniflow lessons started to make a lot more sense.

After completing the Khan Academy courses, not only did I gain a better understanding, the learning process was far less frustrating.

In the spirit of returning to basics, I purchased a couple of books on DL. The first was Grokking Deep Learning by Andrew Trask. Andrew is an instructor on the course and a PhD student at Oxford.

I’m about 50% through the first half of the book (the second half hasn’t been released yet) and it’s already starting to cement the knowledge I’ve already learned. It starts off with a large overview of DL, before digging a little deeper into integral concepts of Neural Networks.

I’d recommend this for anyone looking to start learning about Deep Learning. Trask states that if you passed high school math and can hack around in Python, you can learn Deep Learning. I barely tick either of those boxes and it’s definitely helping me.

I also recently purchased Make Your Own Neural Network after a recommendation in the Slack channel. I haven’t started this yet but I will be getting to it sometime within the next month.

Python

When the course started it said that beginner to intermediate Python knowledge was a prerequisite. I knew this. The problem was, I had none except for the Python Fundamentals Course on Udacity.

To fix this, I signed up to the Python Track on Treehouse. Apart from not asking for a refund, this was the best decision I’ve made in the course thus far.

All of a sudden, I’ve got another source of knowledge. I had a small foundation thanks to the Fundamentals course but now that knowledge was being compounded.

The community at Treehouse is just like Udacity, incredibly helpful. So helpful in fact, I’ve started to share my own answers to people’s questions about Python on the Treehouse forums.

From student to teacher in 5 weeks??

Learning more about Python on Treehouse allowed me to further understand what was happening in Deep Learning Nanodegree Foundations.

I’ve found that compounding knowledge is an incredibly powerful thing. In the past, I used to just keep trucking forward consistently learning new things rather than going over and reviewing what I’ve already learned.

If you try something way out of your comfort zone too quickly, you’ll reach a point of burn out very quickly.

Going back to basics has not only improved my knowledge but more importantly, given me the confidence to keep learning.

Week 5

Sentiment analysis was next. I watched lessons from Trask and Siraj.

Sentiment analysis involved using a Neural Network to decide whether or not a movie review was positive or negative.

I couldn’t believe that I was writing code to analyse the words in a piece text and then using that code to decide the meaning of it.

It’s funny thinking about how you’ll never be able to do something until you actually do it.

Siraj has another awesome video about sentiment analysis, I’d watch it just to see the memes.

Next up was TensorFlow. I geeked out hard to TensorFlow. Even the logo looks cool.

TensorFlow is an open source DL library developed by Google Brain. It’s used across practically all of Googles products. It was originally designed for ML and DL research but has since been used for a variety of other uses.

What excites me about TensorFlow is the fact that it’s open source. That means anyone with any idea and enough computing power can effectively use the same library as Google to bring their ideas to fruition.

I’m really excited to learn more about TensorFlow in the future.

Week 6

This is where I’m at now!

I’m having a blast. The classes are difficult at times but I wouldn’t have it any other way.

Often, the most difficult tasks are also the most satisfying. Every time I complete a class or a project that I had been working on for hours, I get an immense feeling of gratification.

What’s my plan for the next month?

I want to spend more time in review mode. I’ve learned A LOT.

Because so much of this is new to me, my intuition is telling me I should be going over what I’ve learned.

I’m going to try using flashcards (via Anki) to help to remember Python syntax and various other items.

By the time I write Part 3, I will have completed another project, read a couple of books and finished some more online courses.

You can watch what I’m doing by checking out my Trello board. I’m going to keep that as up-to-date as possible.

Back to learning.

Thank you for reading! If you’d like to see more works like this, hit the clap button and follow me. I appreciate your support.If you’d like to know more or have any advice for me, feel free to reach out at anytime: YouTube | Twitter | Email | GitHub | Patreon

How I’m Learning Deep Learning In 2017 -Part 2 was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.