Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

DeepDream explained (with code in PyTorch)

Previous posts:DL01: Neural Networks TheoryDL02: Writing a Neural Network from Scratch (Code)DL03: Gradient DescentDL04: BackpropagationDL05: Convolutional Neural Networks

Code can be found here.

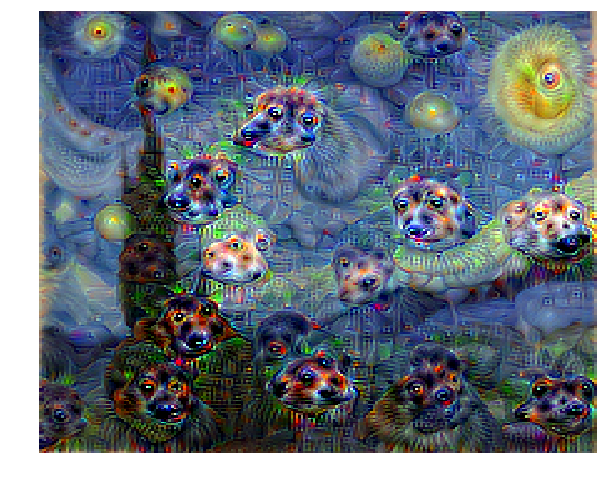

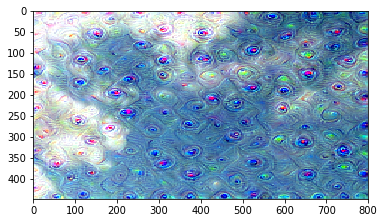

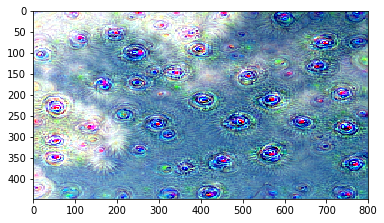

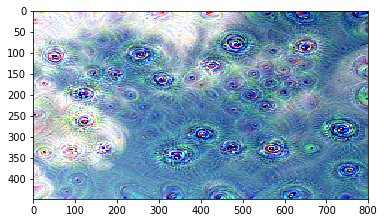

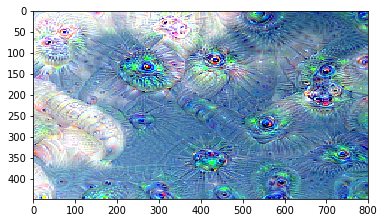

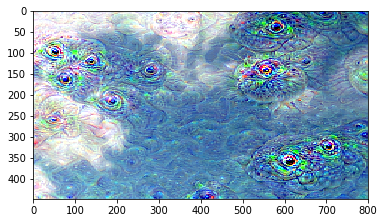

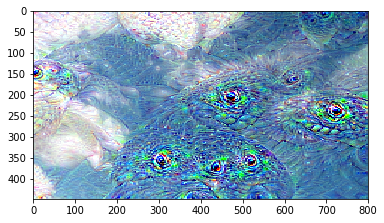

Image generated by my codeWhat is DeepDream?

Image generated by my codeWhat is DeepDream?

It is a fun algorithm to generate psychedelic-looking images. It also gives a sense of what features, particular layers of CNNs have learned. It is a way to visualize layers of pre-trained CNNs.

How does it work?

A base image is used, which is fed to the pre-trained CNN (it can even be random noise). Then, forward pass is done till a particular layer. Now, to get a sense of what that layer has learned, we need to maximize the activations through that layer.

The gradients of that layer are set equal to the activations from that layer, and then gradient ascent is done on the input image. This maximizes the activations of that layer.

However, doing just this much does not produce good images. Various techniques are used to make the resulting image better. Gaussian blurring can be done to make the image smoother.

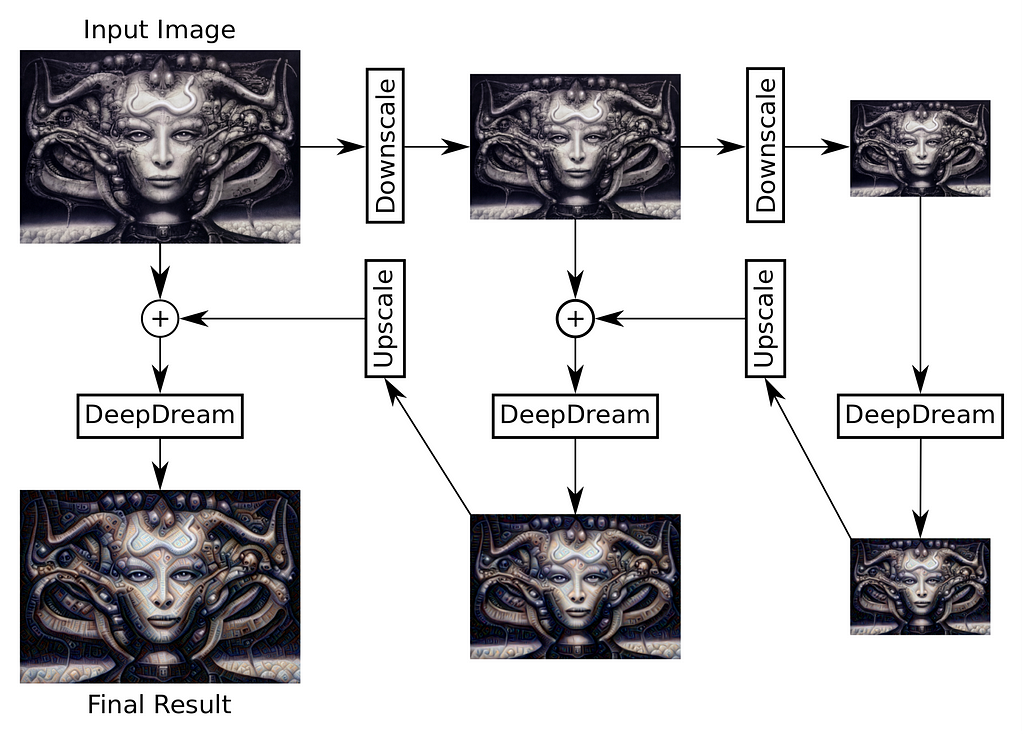

One main concept in making images better is the use of octaves. Input image is repeatedly downscaled, and gradient ascent is applied to all the images, and then the result is merged into a single output image. The following diagram will help get a better understanding of octaves:

Source: https://www.youtube.com/watch?v=ws-ZbiFV1Ms&t=35sCode?

Source: https://www.youtube.com/watch?v=ws-ZbiFV1Ms&t=35sCode?

I used VGG16 to create these DeepDream visualizations.

The code has mainly two functions :

- dd_helper : This is the actual deep_dream code. It takes an input image, makes a forward pass till a particular layer, and then updates the input image by gradient ascent.

- deep_dream_vgg : This is a recursive function. It repeatedly downscales the image, then calls dd_helper. Then it upscales the result, and merges (blends) it to the image at one level higher on the recursive tree. The final image is the same size as the input image.

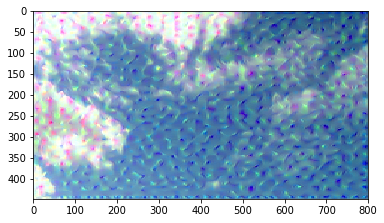

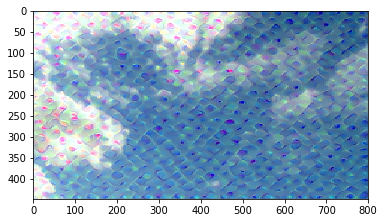

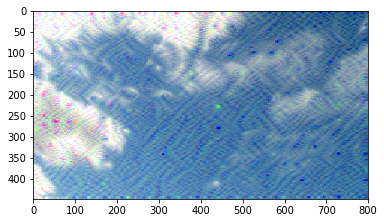

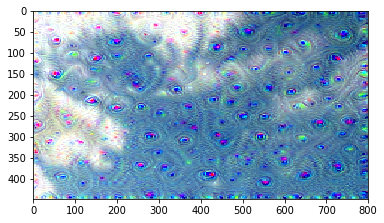

Output at various conv layers (from shallow to deeper layers):

Input imageNotice that the shallow layers learn basic shapes, lines, edges. After that, layers learn patterns. And the deeper layers learn much more complex features like eyes, face, etc.

Input imageNotice that the shallow layers learn basic shapes, lines, edges. After that, layers learn patterns. And the deeper layers learn much more complex features like eyes, face, etc.

If you liked this, give me a ⋆ on Github!

DL06: DeepDream (with code) was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.