Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Create an iOS app — like Prisma — with CoreML, Fast Style Transfer, and TensorFlow.

Table Of Contents:

- Intro & Setup

- Preliminary Steps

- CoreML Conversion

- iOS App

Intro

The basis of this tutorial comes from Prisma Lab’s blog and their PyTorch approach. However, we will use TensorFlow for the models and specifically, Fast Style Transfer by Logan Engstrom — which is a MyBridge Top 30 (#7).

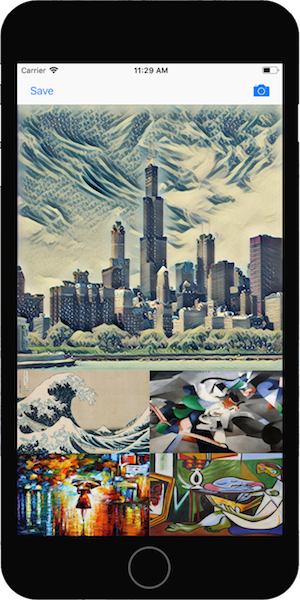

The result of this tutorial will be an iOS app that can run the TensorFlow models with CoreML. Here is the GitHub repo — this also contains all adjustments and additions to fst & tf-coreml.

What made this possible?

- Standford Research

- Fast Style Transfer

- Apple’s CoreML *Does not support TensorFlow

- Google releases TensorFlow Lite *Does not support CoreML

- Google releases CoreML support *Does not offer full support

- we make some adjustments and hack together a solution

Setup

Models: We’re going to use fst’s pre-trained models — custom models will work as well (you’ll need to make minor adjustments that I’ll note). Download the pre-trained models.

Fast Style Transfer: https://github.com/lengstrom/fast-style-transfer *clone and run setup if needed

TensorFlow: With fst, I’ve had the most success using TensorFlow 1.0.0 , and with tf-corml you’ll need 1.1.0 or greater *does not need to use GPU for this tutorial

TensorFlow CoreML: https://github.com/tf-coreml/tf-coreml *install instructions

iOS: 11Xcode: 9Python: 2.7

Preliminary Steps

We need to do some preliminary steps due to Fast-Style-Transfer being more of a research implementation vs. made for reuse & production (no naming convention or output graph).

Step 1: The first step is to figure out the name of the output node for our graph; TensorFlow auto-generates this when not explicitly set. We can get it by printing the net in the evaluate.py script.

After that we can run the script to see the printed output. I’m using the pre-trained model wave here. *If you’re using custom models, the checkpoint parameter just needs to be the directory where your meta and input files exist.

$ python evaluate.py --checkpoint wave.ckpt --in-path inputs/ --out-path outputs/

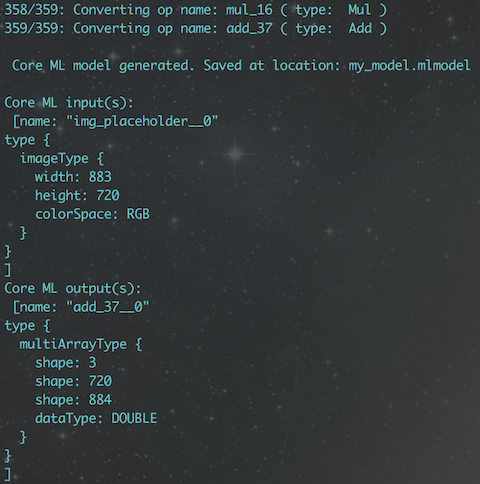

> Tensor(“add_37:0”, shape=(20, 720, 884, 3), dtype=float32, device=/device:GPU:0)

The only data that matters here is the output node name, which is add_37. This makes sense as the last unnamed operator in the net is addition, see here.

Step 2: We need to make a few more additions to evaluate.py so that we can save the graph to disk. Note that if you’re using your own models that you’ll need to add the code to satisfy the checkpoints directory condition vs. a single checkpoint file.

Step 3: Now we run evaluate.py on a model, and we will end up with our graph file saved. *When training models we probably used a batch size greater than 1, as well as GPU, however CoreML only accepts graphs with an input-size of 1, and CPU optimizations — note the evaluate command to adjust.

$ python evaluate.py --checkpoint wave/wave.ckpt --in-path inputs/ --out-path outputs/ --device “/cpu:0” --batch-size 1

Awesome, this creates output_graph.pb & we can move on to the CoreML conversion.

CoreML Conversion

Thanks to google, there is now a TensorFlow to CoreML convertor: https://github.com/tf-coreml/tf-coreml. This is awesome, but the implementation is new and lacking some core tf operations, like power.

Step 1: Our model will not convert without adding support for power, but luckily Apple’s coremltools provides a unary conversion which supports power. We need to add this code into the TensorFlow’s implementation. Below is a gist for 3 files you will need to additions to.

Step 2: create and run the conversion script

$ python convert.py

The actual CoreML converter does not provide the capability to output images from a model. Images are represented by NumPy arrays (multi-dimensional arrays) — which are the actual output and compiled to a non-standard type of MultiArray in Swift. I searched for help online and was able to get some code that evaluated a graph outputted by CoreML and then traverse it to transform the output types to images.

Step 3: Create and run the output transform script on the model (my_model.mlmodel) which was outputted above.

$ python output.py

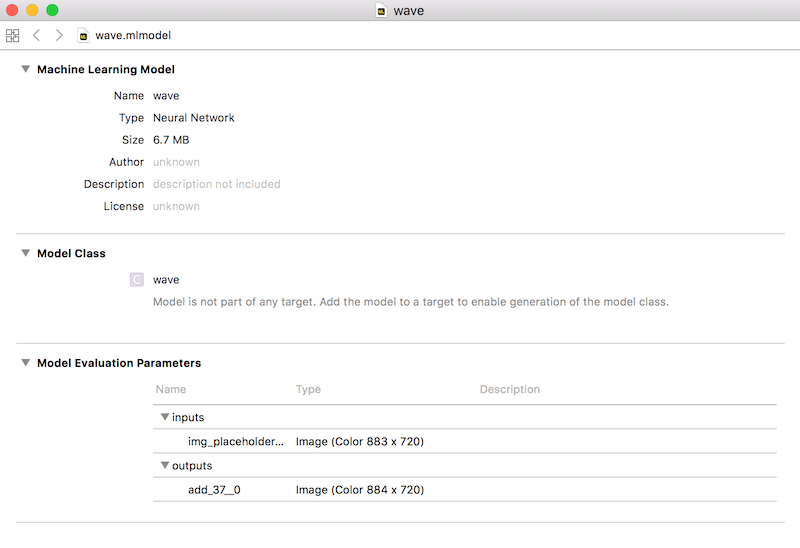

and ….. 🎉💥🤙 ….. we have a working CoreML model. *I changed the name of it to “wave” in the output script, also the image size corresponds to the input for evaluation.

iOS App

This isn’t so much an iOS tutorial, so you’ll want to work with my repo. I’ll only cover the important details here.

Step 1: Import the models into your Xcode project. Make sure you add them to target.

Step 2: After importing, you’ll be able to instantiate the models like so:

Step 3: Create a class for model input parameter, which is an MLFeatureProvider. *img_placeholder is the input that is defined in the evaluate script

Step 4: Now we can call the model in our code.

Final: The rest of the app is just setup and image processing. Nothing new or directly related to CoreML, so we won’t cover it here. At this point, you’ll have a good understanding of how everything came together and should be able to innovate further. I think there can be improvement on fst’s graph. We should be able to gut out over-engineered operations to make the style-transfer even faster on iOS. But for now everything works pretty well.

✌️🤙

DIY Prisma, Fast Style Transfer app — with CoreML and TensorFlow was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.