Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

It was a dark, grey winters evening and I found myself, as I had done way too many times in the past, staring at my computer screen endlessly scrolling through useless articles ranging from snowflaky self-help to brain melting tutorials.

Phew, that was a long sentence.

Anyways, for reasons I can’t remember I found myself reading this article: https://www.digitaltrends.com/cool-tech/japanese-ai-writes-novel-passes-first-round-nationanl-literary-prize/

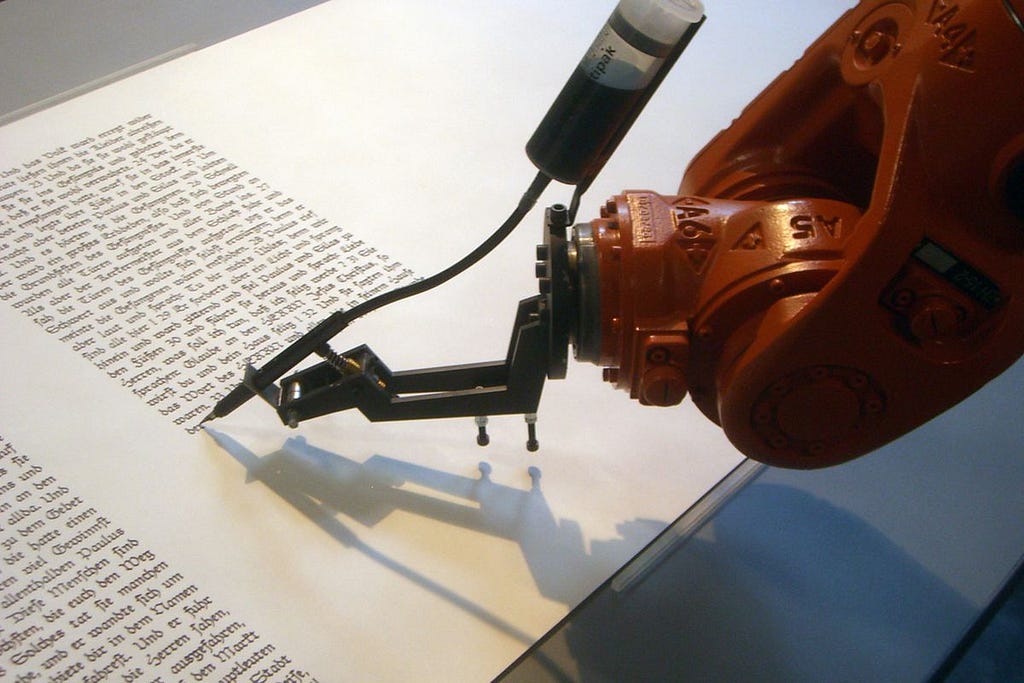

An AI wrote a novel, and whats more, it almost won a literary prize! So, not only was it not garbage, it was good enough to fool the experts! I was intrigued, and started googling away…

I found out that there actually is a National Novel Generation Month where people submit their best attempt at generating a novel that is at least 50,000 words. I looked at some of the code/outputs and was really intrigued. You can find it here: https://github.com/NaNoGenMo

And then as if I wasn’t frothing in the mouth from excitement already, I read this: https://github.com/zackthoutt/got-book-6

A guy wrote the next game of thrones book using deep learning! All sorts of thoughts raced through my head. I could see it now…

I was going to take some data and put it through my amazing algorithm. I was going to publish a novel. It was going to become a best seller. The next big fantasy series. I’d be doing pretty well with book sales. Netflix would then come and secure rights to my book. They would make a trilogy of movies. I’d get to meet Jennifer Lawrence. I’d be doing really well now. They would invite me for an interview on TV. The interviewer would gush at my work and tell me how she’s such a big fan and ask me what’s the secret to my, seemingly, superhuman ability to produce so many amazing novels. I’d give her a smug grin, look at the camera, and shout:

“Haha, you idiots! I just fed all the Wheel of Time books through an algorithm I stole from github from some random dude! You’re like the people in that Al Pacino movie where he makes that Simone software. You got Simoned!”

Pandemonium would break out. People would start questioning their reality. They would be afraid to pick up another book in case their favorite author turns out to be a machine.

I couldn’t wait. I set about executing my devious plan.

30 minutes later…

I gave up.

Yeah, turns out most algorithms are not that great at churning out good novels. In fact, they produce really incoherent, and kind of funny, outputs:

“i had inherited the company because of the future putting his brother in applause. parents was a stupid success, madam, now indeed, and female form in individual color. now the rant happened and closet, maiden, always won for any present, in six.”

I clearly wasn’t going to be impressing anyone, let alone Netflix and Jennifer Lawrence, with that prose. I was disappointed. I had no experience in deep learning and no resources to consume a ridiculous amount of data, so I could scarcely hope to beat these efforts made by so called experts.

If only there was genre where these random words could be interpreted as genius. And then it hit me. Poetry! What is poetry but a bunch of random words thrown together? It’s the equivalent of modern art in literature. Okay, I apologize to anyone who loves and reads poetry. I am sure it can be beautiful and meaningful. But it’s never really appealed to my unsophisticated tastes. I tend to prefer a plain worded fairy tale with swords. And dragons. And wise old men with long white beards.

But I was curious to try it out and see what kind of output I could get with poetry. So began my little project.

Data

The Data I decided to use was Walt Whitman’s Leaves of Grass. Even a plebeian like me can appreciate Walt Whitman! You can find this at Project Gutenberg which is a resource for non-copyright works or those that are now in the public domain: https://www.gutenberg.org/

I found the text file for Leaves of Grass and saved it down on my computer. Let’s call it data.txt.

Algorithm

I decided to use Zach Thoutt’s algorithm which he had used to generate the next book of Game of Thrones: https://github.com/zackthoutt/got-book-6

Where do I run this?

One thing you need to know about deep learning is that you need a lot of computing power to run the models. Ideally, you need a GPU resource to do the heavy lifting for you. I did not have a GPU in my possession but luckily I had dabbled (emphasis on the dabble) with deep learning before and signed up for Jeremy Howard’s course on it: http://www.fast.ai/

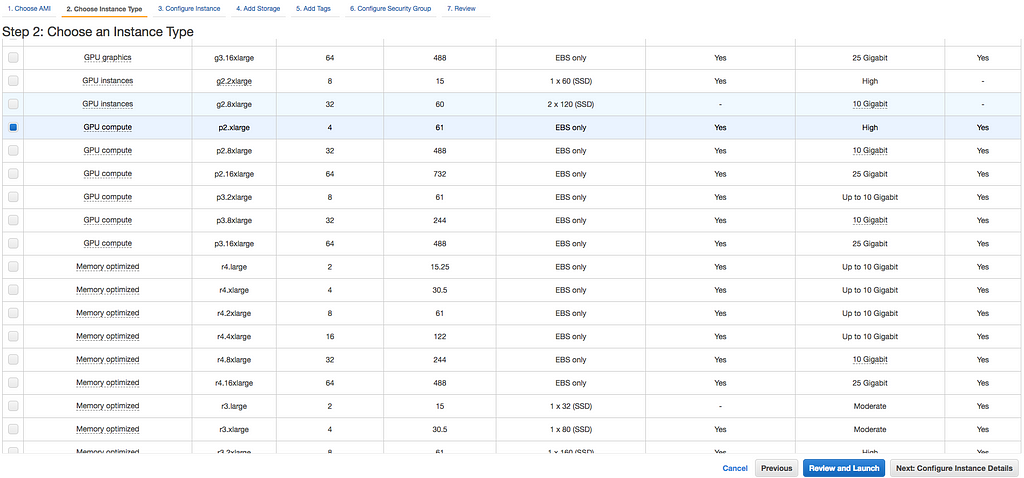

In the fast.ai course you run all your models on the cloud so you are not constrained by your machine. We setup an EC2 instance on Amazon Web Services (AWS) which has the GPU power behind it (this would be the P2 instance). You need to request Amazon to give you access to this; which you can if you are a member of his course.

Once you have your instance running, you then run your code in a Jupyter notebook hooked up to that instance. I felt that setting up all this to run your deep learning model was a pain for a layman like me so I am going to do a step-by-step walkthrough on how to do this. Now, there are many ways to do this. But I am just going to show you the way I did it. It might not be the best way. But it works.

Finally, the tutorial

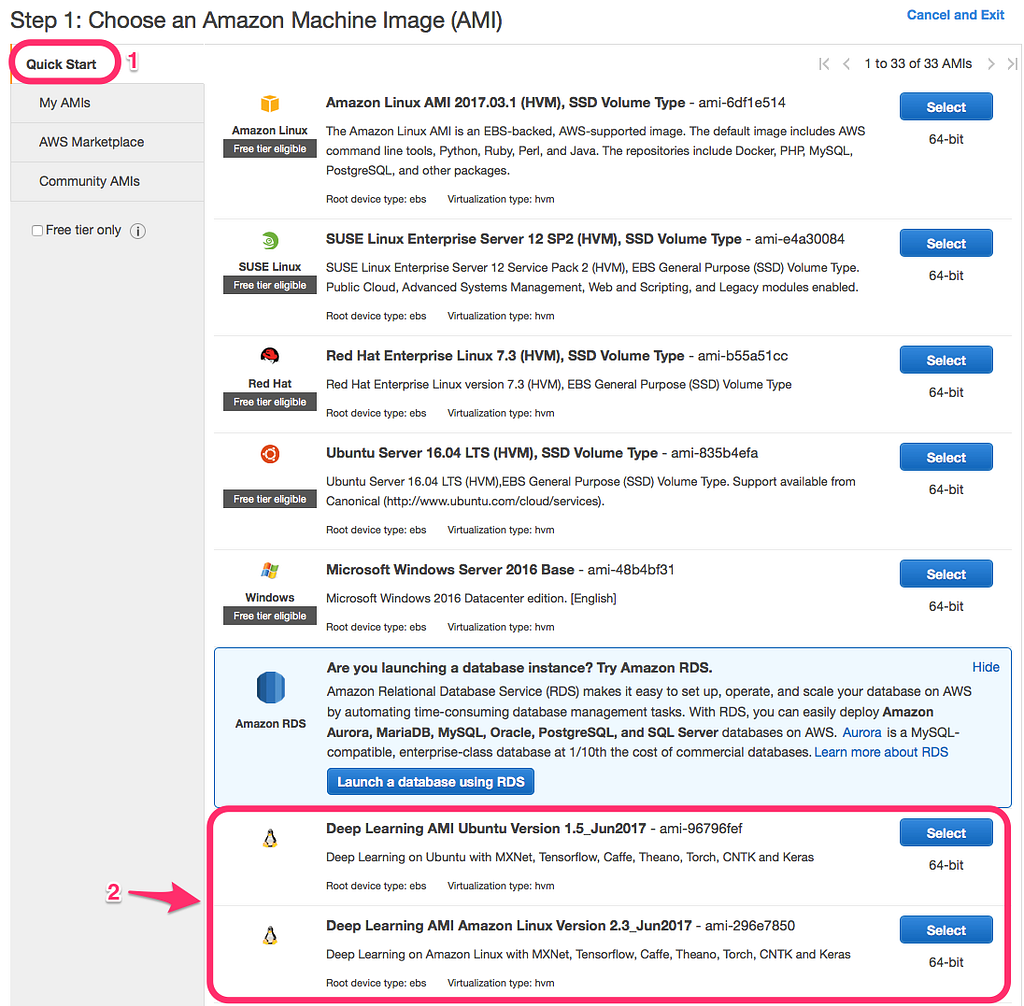

I am assuming you know how to start EC2 instances. For this, you want to use one of the AWS Deep Learning AMI. This way you do not have to worry about installing any of the deep learning packages. I selected the AWS Deep Learning AMI (Amazon Linux).

Now, select a p2 instance. This would probably be the p2.xlarge instance unless you’re a big shot who got approved for an even bigger instance.

Once you are okay with everything, launch your instance.

Now, open up a terminal and connect to your EC2. You know the drill: use the terminal in Mac or Putty in windows. You can find out how to SSH into your instance by right clicking on your EC2 instance and choosing the Connect option. The command will be similar to this:

ssh -i your-ec2-key.pem ec2-user@ec22–212–21–23–21.compute-1.amazonaws.com

You’re in! And everything is already setup because we selected the Deep Leaning AMI. Well, everything except for a few tiny things…

One of the libraries used for deep learning is Tensorflow. We want to use Tensorflow version 1.0.0 for this exercise. The reason is that the github code we will be using breaks on later versions. It’s probably a simple enough update to change the code to fix this but I am lazy and just want to run this ****.

We need to change our version by first uninstalling the existing Tensorflow package and re-installing 1.0.0.

#Uninstallpip uninstall tensorflow

# Install version 1.0.0pip install tensorflow==1.0.0

Then we need to optimize our GPU settings because Amazon for some reason does not do this automatically:

# optimize GPUsudo nvidia-persistencedsudo nvidia-smi — auto-boost-default=0sudo nvidia-smi -ac 2505,875

Another thing we need to do to insure that our deep learning algorithm actually uses the GPU on this instance is to set the library path for CUDA:

export LD_LIBRARY_PATH=/usr/local/cuda-8.0/lib64

Done! Now we are ready to start up Jupyter. You need to run this command:

jupyter notebook --no-browser --port=8888

Obviously, this is not straightforward as well. You need to now open up a new tab/window in your terminal. And run this command (with your key and EC2 ID) so you can run Jupyter on local host 8000:

ssh -i EC2key.pem -L 8000:localhost:8888 ec2-user@ec2-21-21-21-21.compute-1.amazonaws.com

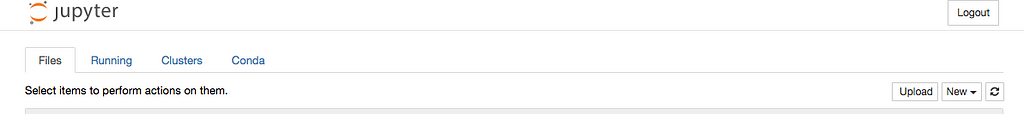

Okay! Now we are really ready to start coding aka copy pasting someone else's code. Open up localhost:8000 and you should see Jupyter starting up. You might need to authenticate to access it in which case you need to pass in a string in the URL. This can be found in the output in your terminal where you ran the Jupyter notebook command.

Now, we need to move the data inside our notebook. I am assuming you’ve been a great follower and have already saved down the data. We called it data.txt. You can easily move this into Jupyter by using the Upload button at the top right of your home page.

On your right there is a button titled Upload.

On your right there is a button titled Upload.

Now our data is in Jupyter, all we have to do is to use our stolen code on it!

You can grab the notebook which has the code here: https://github.com/zackthoutt/got-book-6/blob/master/got-book-generator.ipynb

Either copy paste it or move the whole notebook via the upload command into your Jupyter server. Change the reference in the code to your data

book_filenames = sorted(glob.glob("/data/*.txt"))Becomes:

book_filenames = sorted(glob.glob("data.txt"))And now you just hit run and go through each of the Cells from top to bottom!

Once you train your model (and you can play around with the hyperparameters), you can pick a word and generate a sample of Whitmanesque poetry. It should be saved under an outputs folder on your Jupyter homepage. Here is a sample:

i hear the new river-stripes of the western sky,the engulfed wanderings with them throughwhat thou side to the rest, lilacs out at the music, shouting, long, unbereav’d, henceforth in thy task music,here with seed mere purpose more without drop or century enough o people,

farther with india!centuries a bunting!

always me pleas’d and her time or land!corroborating for the sands of talk and pause toward him,the war wreck’d, the best of hand, then freely alone,endless along the seas,endowoutside aside and his faceof america’s forehead,manhattan in the north as all the golden ground,down through many a distant armies, standing for your brother?

Look at that. It makes no sense. But maybe it does? It’s genius is what I say. Now you too can become the next James Joyce and they’ll be trying to figure out what you meant for years to come.

Here’s another one:

rocks and foam of victory, time and crossing night,however rock the voice andgeneral quickly lovers,we stand no more playing out plan from your aims, libertad,all walk,in the ruins of the two the heroes slowlybusy with her — the odds of the rest prepared,, to its know puts herearound — touch,my comrade at mechanics withwoe,after i at lanes and space beautiful out so well — done

now the sun with all their turn there are in last,bright out of the interminable convulsive, journeying, thistheir spheres,for my hands back over the white hand in a swamp,i know as feudal voice, inseparably coarse and round hand is so,till i hear the carol wash’d cubic instruments, and bear close its hoarse steps in the frozen sentry,the builder, corner’d and desperate, an creatures,the shy and odour to, the sailors the arrogant small, and ringing the splashing rolls whirl sat,out and the real cover years overhead rising or tumbling city.

Doesn’t it bring a tear to your eye?

Also, if your model is taking a long time to run please make sure you are running it on your GPU. You can check this by running:

nvidia-smi

If it shows a message of No Running Process, even though your model is being trained, it means the GPU has not been hooked up properly. Again, make sure you ran this line:

export LD_LIBRARY_PATH=/usr/local/cuda-8.0/lib64

Hope this was helpful!

Please do share your results. I’d be curious to see what you got. :)

Bilal

How to write some Walt Whitman style poetry using Deep Learning was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.