Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

So this weekend, I revisited a project that I had sitting on the back burner. For this techsploration, I tried to procedurally generate music using a rudimentary implementation of a Markov chain. This project was actually inspired by the music of the Korean pianist Yiruma, and I decided to use this as a learning experience to read up on Markov chains.

In a nutshell, Markov chains are mathematical systems that track the probabilities of state transitions. They’re often used to model complex systems and predict behavior. They’re used in a lot commercial applications, from text autocomplete to Google’s PageRank algorithm. My first encounter with a Markov chain was actually in my high school software development class when a classmate built a chat bot using this concept. He took the log from our class Slack chat and fed it into a Markov chain. Using each word as a “state”, he mapped the probability of word transitions and generated text using those probabilities.

As an example of how this works, the word “I” tends to be followed by the words “am” or “are” more often than it is followed by the word “suppose”. Using our class Slack chat as training data, he generated a mapping of word transition probabilities and used that to string together words that were likely to be found after each other. It worked quite well, so I am going to apply this concept to Yiruma’s music. If you’re interested, you can read more about Markov chains here.

Picture from http://yiruma4ever.blogspot.com/

Picture from http://yiruma4ever.blogspot.com/

Due to the simplicity in their structure, I decided to use MIDI files for this project. MIDI files are simple files consisting of chunks which contain information about the music encoded in the file. An important distinction to note is that MIDI files are not audio files like MP3 files. Instead, they contain instructions on how to play tunes, which can be played by a variety of different computer generated instruments. Because of this, they are limited in that they can only store a certain range of notes and cannot store complex audio like vocal lyrics.

Usually, MIDI files contain one header chunk containing information about the “instruments” playing the sounds, followed by track chunks containing the notes played and their durations, velocities (volume), etc.

The first thing I did was try to find a Python library to dissect MIDI files. I settled on mido after doing a bit of research. When I first attempted to do this project a few months ago, mido was hilariously poorly documented, and there were syntax and logic errors in their example code, but it seems they’ve fixed that now. The library is pretty simple to use.

from mido import MidiFile

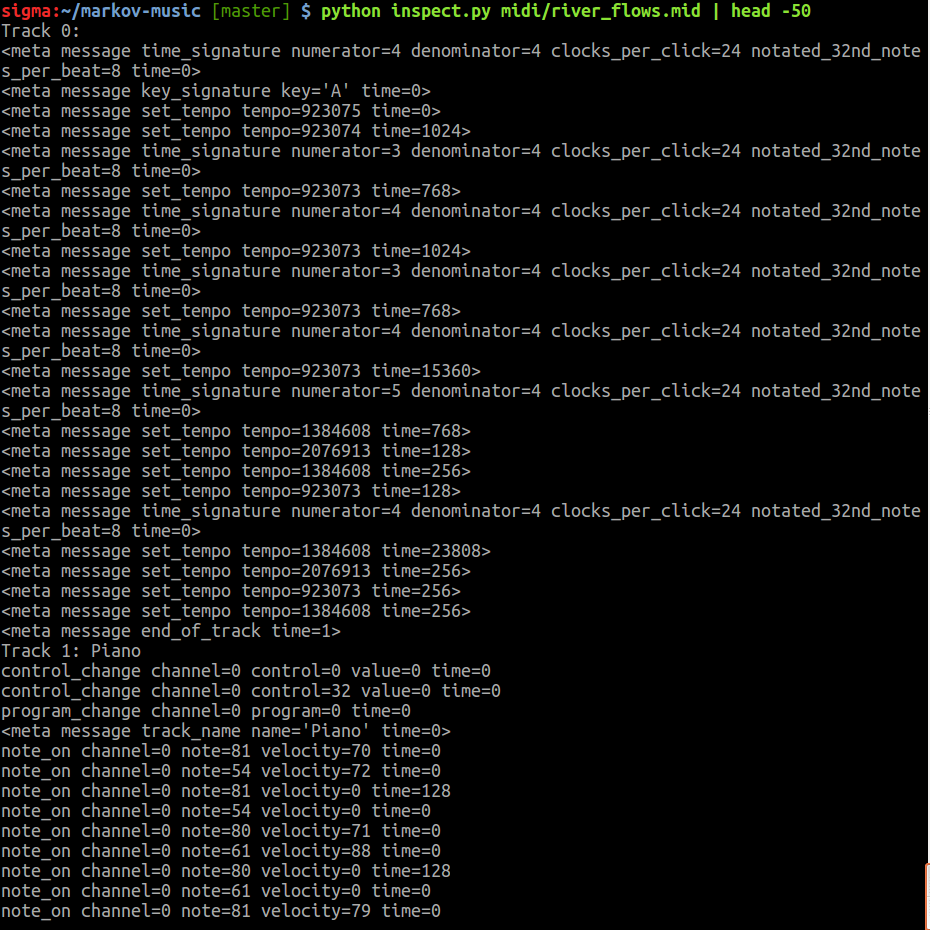

mid = MidiFile('song.mid')for i, track in enumerate(mid.tracks):print('Track {}: {}'.format(i, track.name))for message in track:print(message)That’s literally all the code you need to start reading the information (mido uses the term “messages”) in a MIDI file. The first thing I did was use this to write a basic script to just print out all the messages in a MIDI file. I downloaded a MIDI of one of Yiruma’s most famous compositions, River Flows In You, from here. Here’s what the MIDI contains.

River Flows in You by Yiruma dissected into MIDI messages

River Flows in You by Yiruma dissected into MIDI messages

I’ve omitted the rest of the MIDI messages for brevity, but you can understand the gist of how it works. Track zero contains a lot of metadata about the midi, including its time signature, tempo, etc. I’m still not 100% certain why there are multiple messages for setting the tempo and time signature, but since we don’t really care about the metadata too much, we’ll ignore it for now.

Track one is where the data for how to play the song is stored. “note_on” messages contain information on the pitch, volume, and time that a note is played, which is what we need. (Side note: the ‘time’ field actually represents the time AFTER the previous message that the current one should be played, so a time of 0 means that the note should be played at the same time as the previous message).

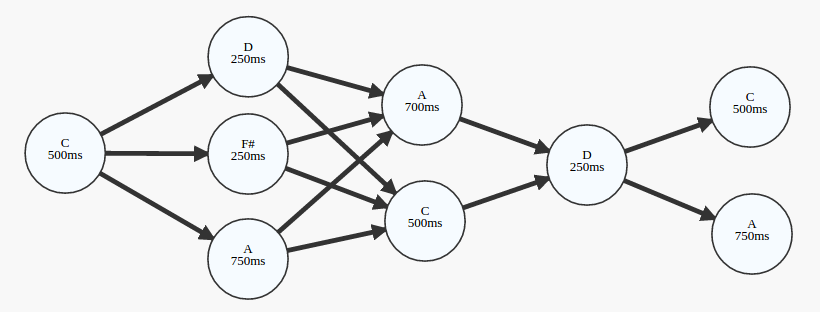

The next thing I did was write a script to isolate all the “note_on” messages in the tracks and group them into a graph of progressions. For each note, I rounded its duration to the nearest 250 milliseconds and grouped it with every note that was played at the same time that it was. Here’s a representation of that as a directed graph.

Song and notes converted to a directed graph

Song and notes converted to a directed graph

Below is a representation of the above graph as an adjacency matrix. We can represent it as such because we only care about the edges between the nodes and how often a particular note transitions to another note. For simplicity, we will only take into account the duration of the note being transitioned to and the number of times that note was transitioned to.

C (500 ms) | D (250 ms) | F# (250 ms) | A (750 ms)

C 0 2 1 1D 2 0 0 2F# 1 0 0 1A 1 1 0 1

Internally, I represented the graph in the Python code as a dictionary of dictionaries. The Python collections module was very helpful here since I used defaultdicts and Counters to keep track of the notes and their transitions.

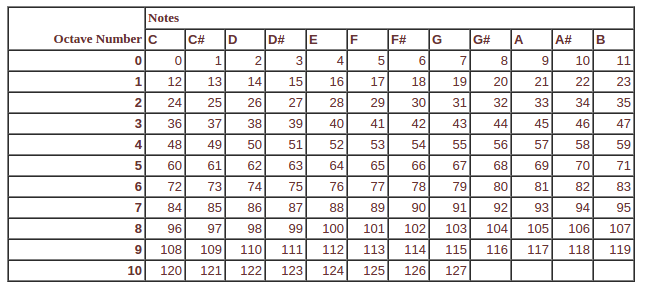

Unlike the above graphs though, we stored the notes as numbers instead since MIDI files store each note’s pitch as a number. The graph below shows the number that each note corresponds to.

http://www.midimountain.com/midi/midi_note_numbers.html

http://www.midimountain.com/midi/midi_note_numbers.html

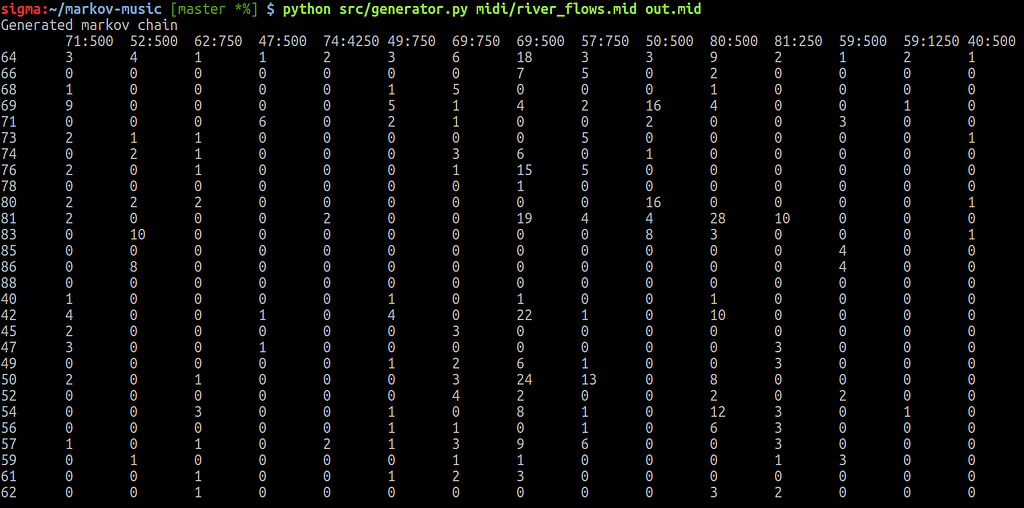

The image below shows what a small part of the adjacency matrix for River Flows In You looks like. The rest of the matrix is omitted for brevity. The numbers in the top row represent the note being transitioned to and its duration (formatted as note:duration). The numbers in the leftmost column represent the transitioned from. Each entry in the matrix represents the number of times a particular note transitioned to another specific note/duration.

A small portion of the adjacency matrix for River Flows In You by Yiruma

A small portion of the adjacency matrix for River Flows In You by Yiruma

Since River Flows in You is written in A major, it makes sense that there are a lot of transitions from note 81 (A6). It transitions to note 80, duration 500ms (G# in 6th octave) a total of 28 times. If you’re familiar with the composition, this is pretty much expected since this note transition forms a majority of the melody (here’s a recording by Yiruma himself if you haven’t heard the piece).

Coolios. Now to generate music, I’m going to start with a random note, look it up in the matrix, and select a random note that it transitions to, weighted toward the more frequent transitions. For simplicity, I’m only going to generate a single-note melody. Here’s a short 1–minute segment of a melody I generated from River Flows In You.

Here’s a short 30-second segment of a melody I generated using Chopin’s Concerto №1 in E Minor (Op. 11).

I’ve dabbled in music composition before, so just out of curiosity, let’s run one of my original compositions through this. Here’s a short 1-minute segment of that.

Well, it certainly doesn’t SOUND very good :/

This was an interesting project nonetheless. The next thing I’m going to try is coding in better heuristics for music generation that take into account music theory/harmonics. I’ll write a techsploration about that if I get to it, so stay tuned for that.

That’s all I have for now though. If you’re interested, here’s a link to the project repository. Thanks for reading!

Follow me on Twitter: @omgimanerd

Generating Music Using Markov Chains was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.