Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

All images are from the Lecture slides.

Self Driving Cars

(or Driverless Cars or Autonomous cars or Robocars)

Utopian View:

- How SDCs have a Chance to transform our Lives:

- 1.3M people die everywhere across the world due to car crashes.

- 35–40,000 in the US alone.

- Oppurtunity: To make design AI systems that save lifes.

- Autonomous Vehicles have the power to take away Drunk, Drugged, Distracted, Drowsy Driving.

- Eliminating Ownership:

- Increasing shared mobility.

- Saves money.

- Reduce in costs, makes them more accessible by reducing travel costs by an order of magnitude.

- Insertion of Software in Vehicles makes the experience a more personalised experience.

Dystopian View:

- Eliminate Jobs: Newer technology is always feared as it might take away the jobs that rely on prior technology. ex: Professionals in Transportation industry.

- The possibility of an Intelligent system failing is one we have to struggle with at a Philosophical, Technical, Ethical level.

- AI in popular culture (Not amongst engineers) may be biased or ethically grounded. What is the ethical grounding of the ‘black box’? Does it follow rules of our Society?

- Security: A vehicle working with Code, can be ‘hacked’

An Engineer’s view: We want to find the best possible ways to transform our society and improve lives.

Skepticism

- Our intuition about what is difficult and what is easy for Deep learning, AI is flawed.

- Humans are great at Driving. Our intuition should be grounded about what is the source of data, the annotations and algorithms

- We have to be careful when Making predictions and promises whether towards the Utopian or Dystopian View.

- A promise for future vehicles in 2+ years is doubtful.

- A promise for future vehicles in 1 year is skeptical

- A good test is testing the vehicles on public roads at scale.

- Another good challenge would be making them available for Purchase.

- A(n) (agreeable) prediction by Rodney Brooks:

- >2032: Driverless Taxi service in Major US with arbitrary pickup and drop, even in Geo restricted areas.

- >2045: The Majority of US cities wiill mandate these.

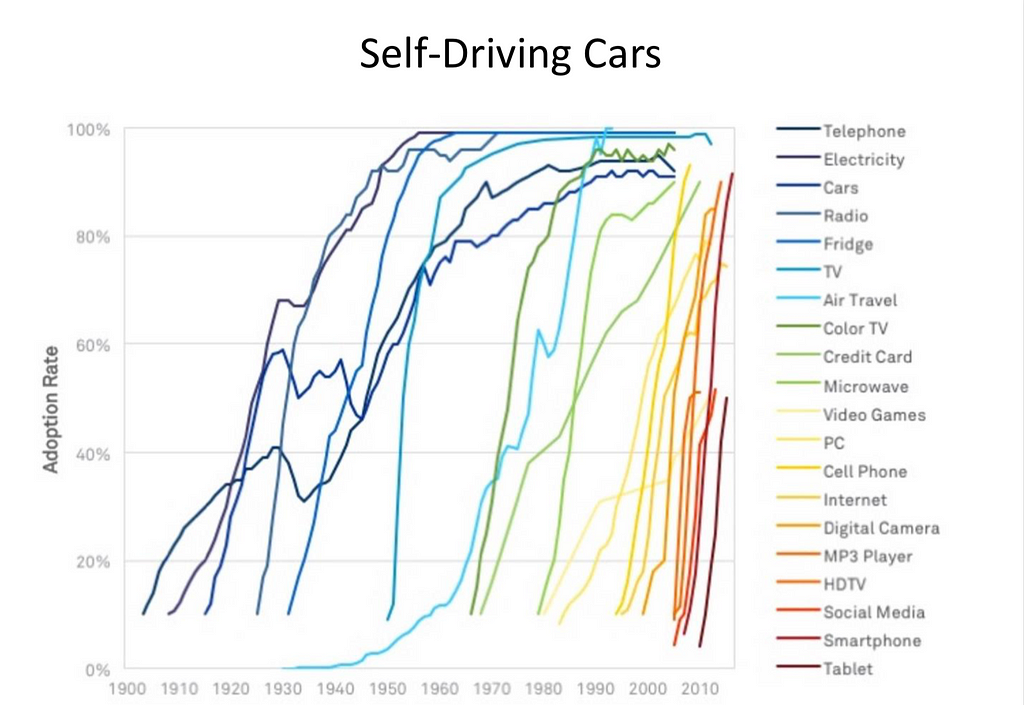

Plot of Technology adoption rate Vs the number of years:

- As a society we’re better at accepting newer technologies.

- Everything could change overnight.

Overview:

Different Approaches to Automation

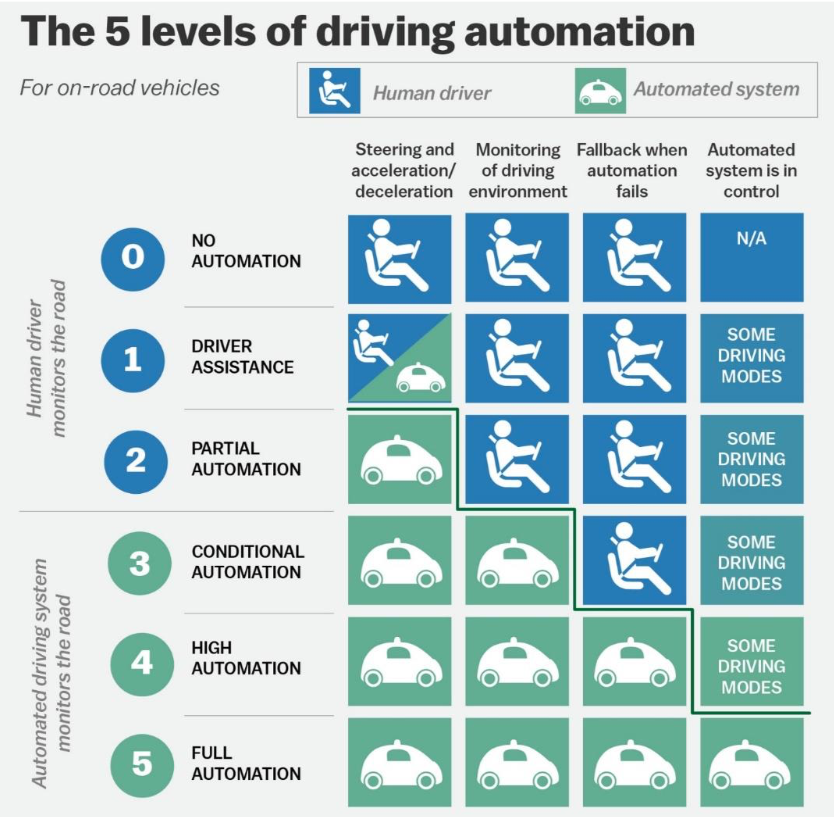

Levels of Autonomy (SAE J3016):

Levels of Autonomy (SAE J3016):

- A useful taxonomization for initial discussions, policy making and media posts.

- Not useful arguably, for designing the underlying system and the holistic system performance.

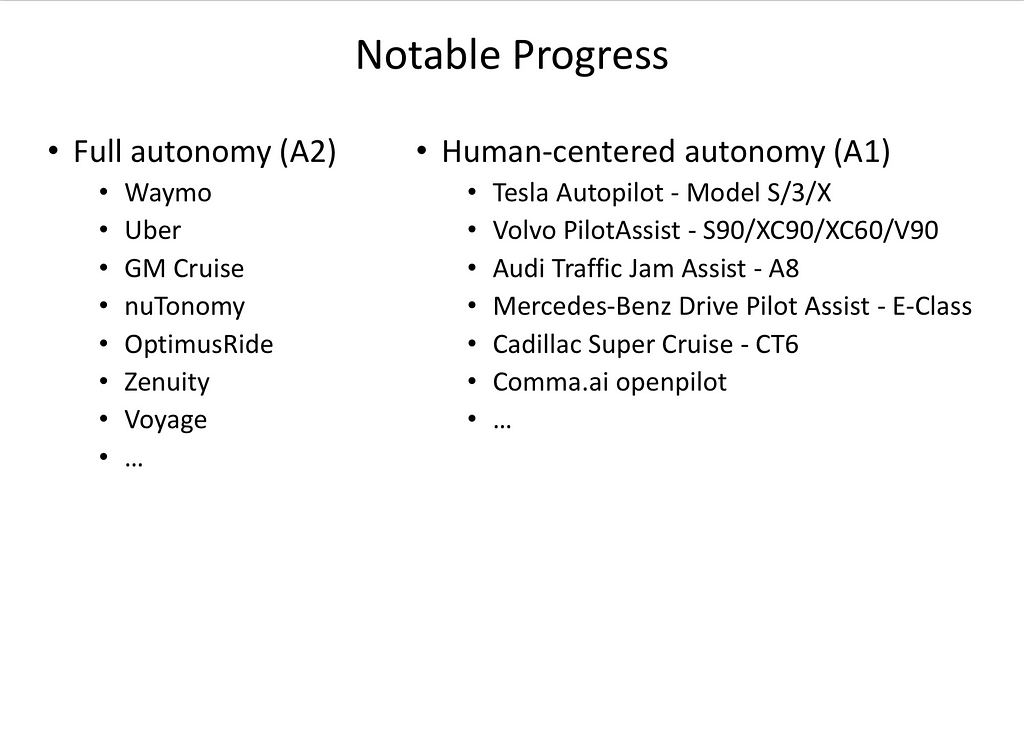

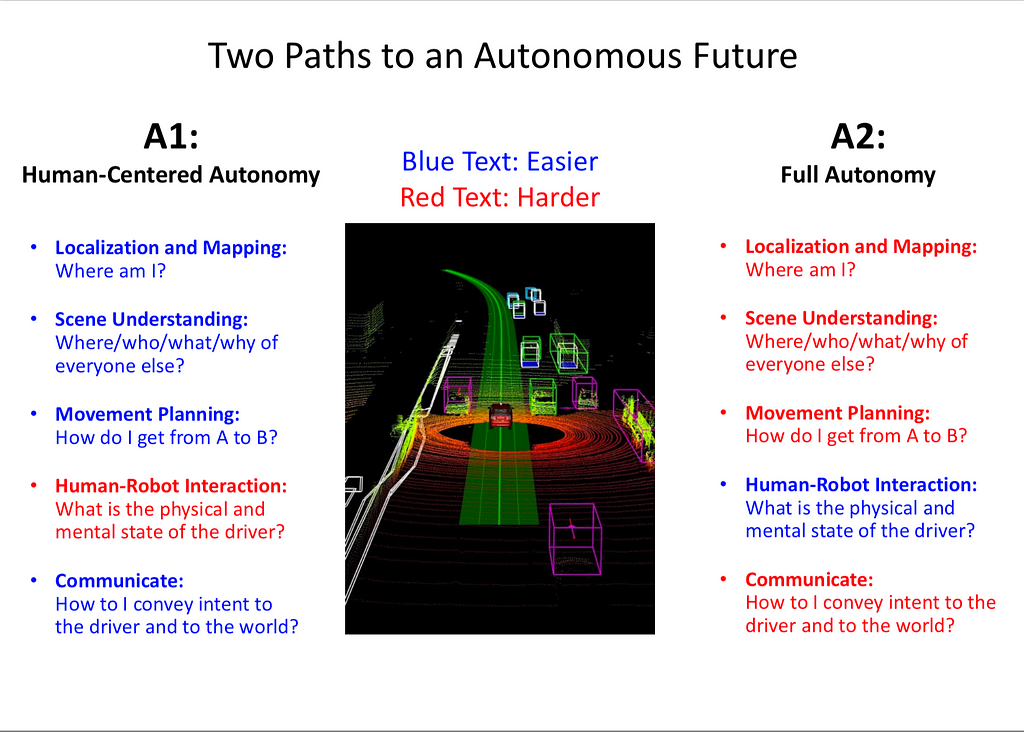

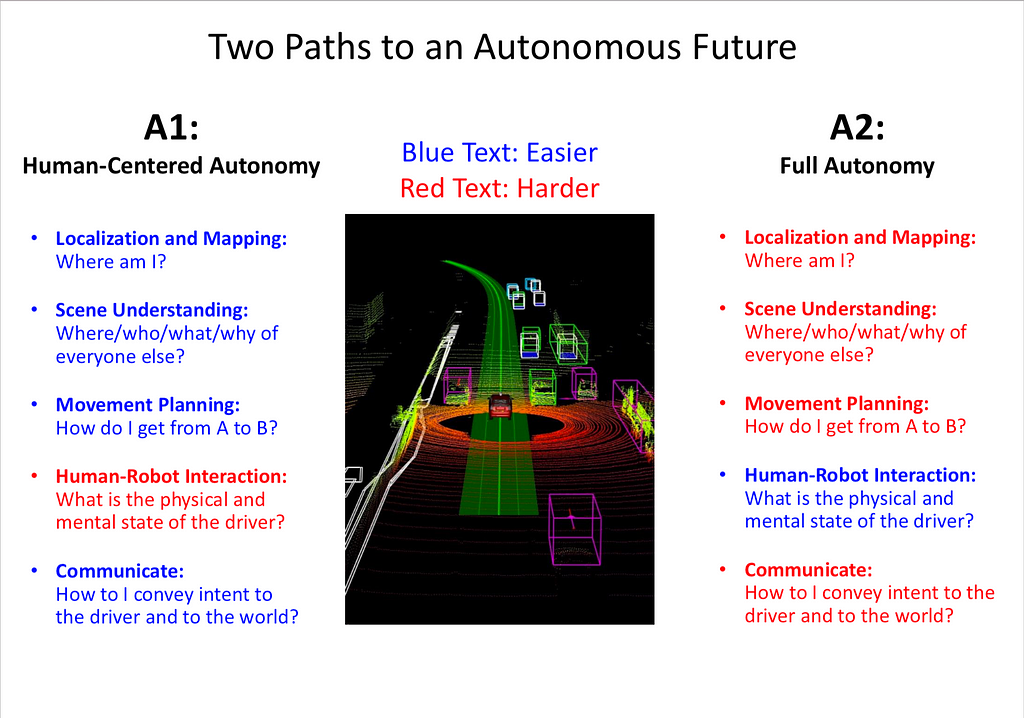

Beyond Traditional Levels: Two Types of AI Systems.

- Starting point: Every system to some degree requires a human. Human controls the vehicle and gets to engage the system.

- A1: Human-Centred Autonomy: Definition: When AI is not fully responsible. Human is resposible for behaviour.

- How often is it available?

- Is it sensor based?

- Number of Seconds provided to the Driver to take over, currently its almost 0 seconds. (Time to wake up and taking control)

- Taking over control remotely, support by a human that is not inside the car. For Human centred autonomy, human will be responsible. The assumption is the system will fail, humans will be needed to take over control.

- A2: AI is fully responsible. Legally speaking, the car designer is responsible for the behaviour.

- No teleoperation is involved.

- There should be no 10 seconds rule. That’s not good enough.

- Must find Safe Harbor.

- Allow the Human to take over as per choice. AI only forces over-ride when danger (ex: crash) is close.

L0: Starting Point. L1,L2,L3: A1: Human Centred. L4,L5: A2: Fully Autonomy.

Human Centred Approach: Criticism: When humans are given the system, they will ‘overtrust’ the system. The better the system become, the lesser the humans will pay attention.

Public Perception of What happens inside an Autonomous vehicle:

Engineer’s perception:

- A large number of vehicles on road are equipped with Autopilots which equals data, a lot of data.

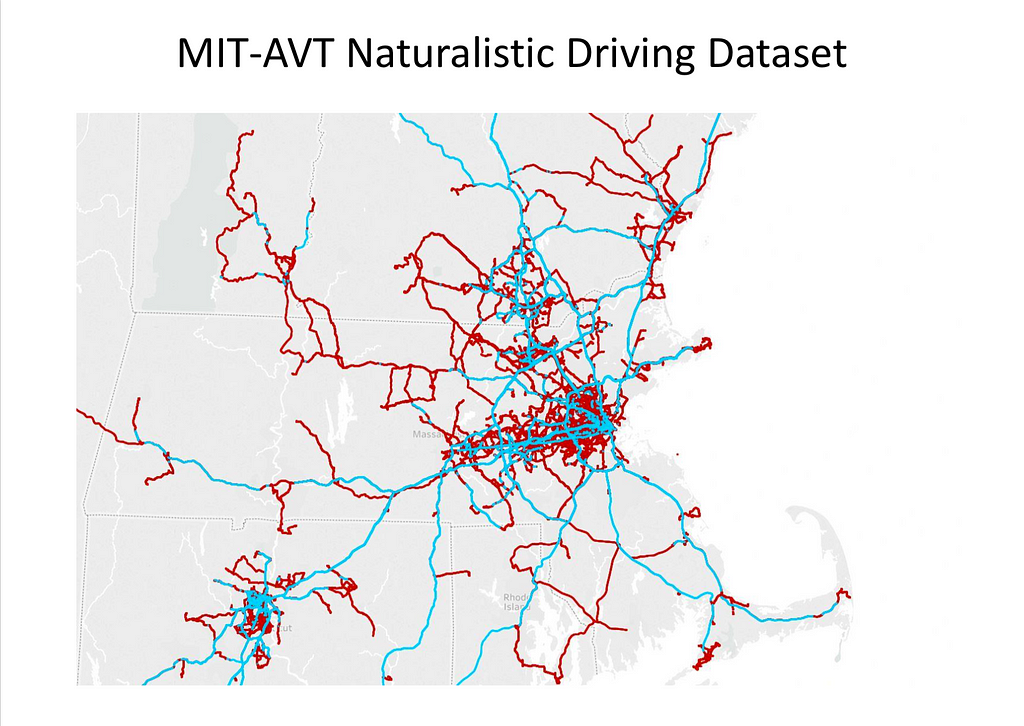

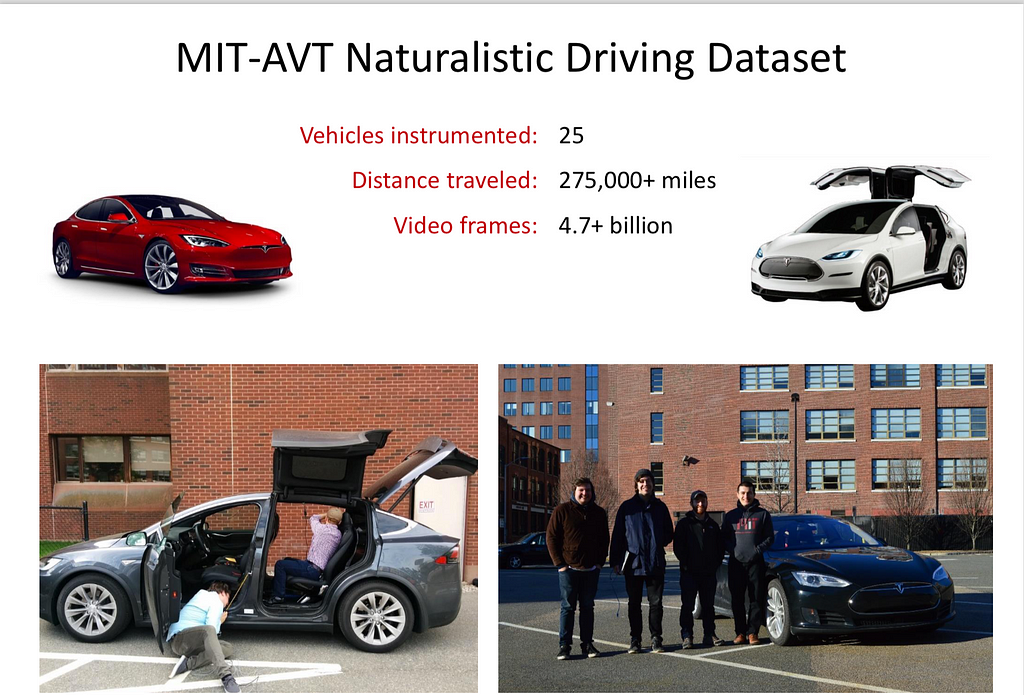

MIT-AVT Naturalistic Driving Dataset

- The dataset includes Video from 3 HD Cameras

- All data from GPS, IMU

- Data from CAN Bus

- Data from Inside the vehicle, conversations, level of attention, drowsiness, emotional state, body pose, activity, etcetra.

- 5B+ frames, actively annotated.

- Data from Highway trips:

- Utilize the data to understand human behaviour inside.

- Utilize the data to train perception and control.

- GPS Map: Red-Human driven miles. Blue-Autonomous miles.

- 33% miles driven autonomously! (Using Autopilot)

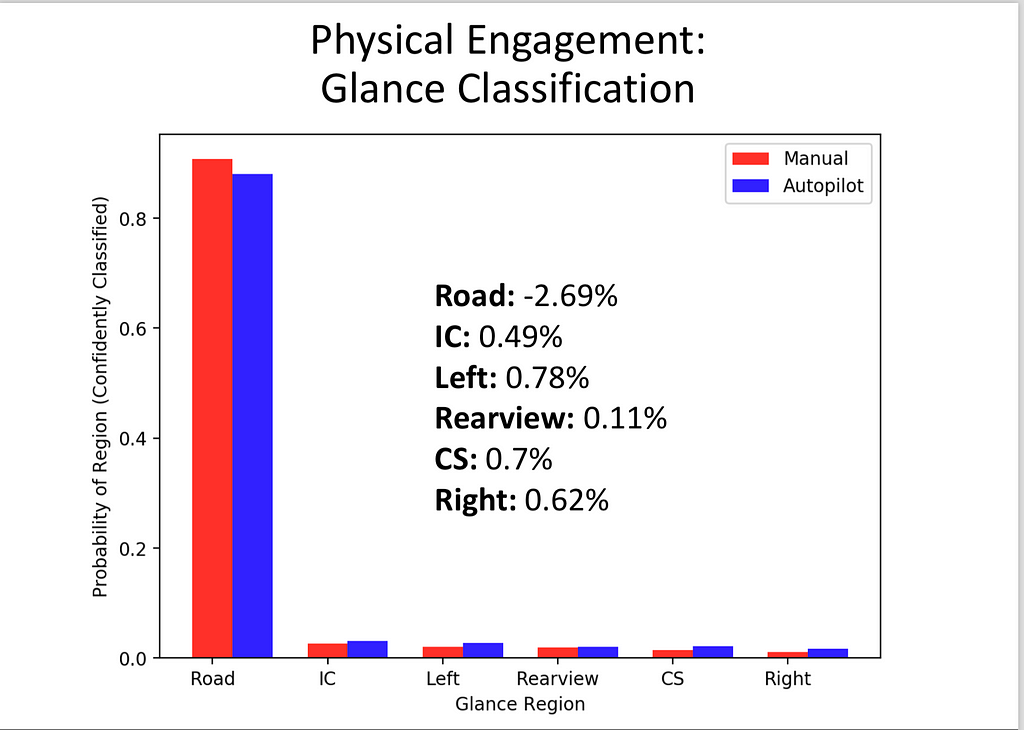

- Glance classification: Division of human attention when the AutoPilot is engaged Vs Manual Driving. Promise: Human Centred approach, the system is not overtrusted.

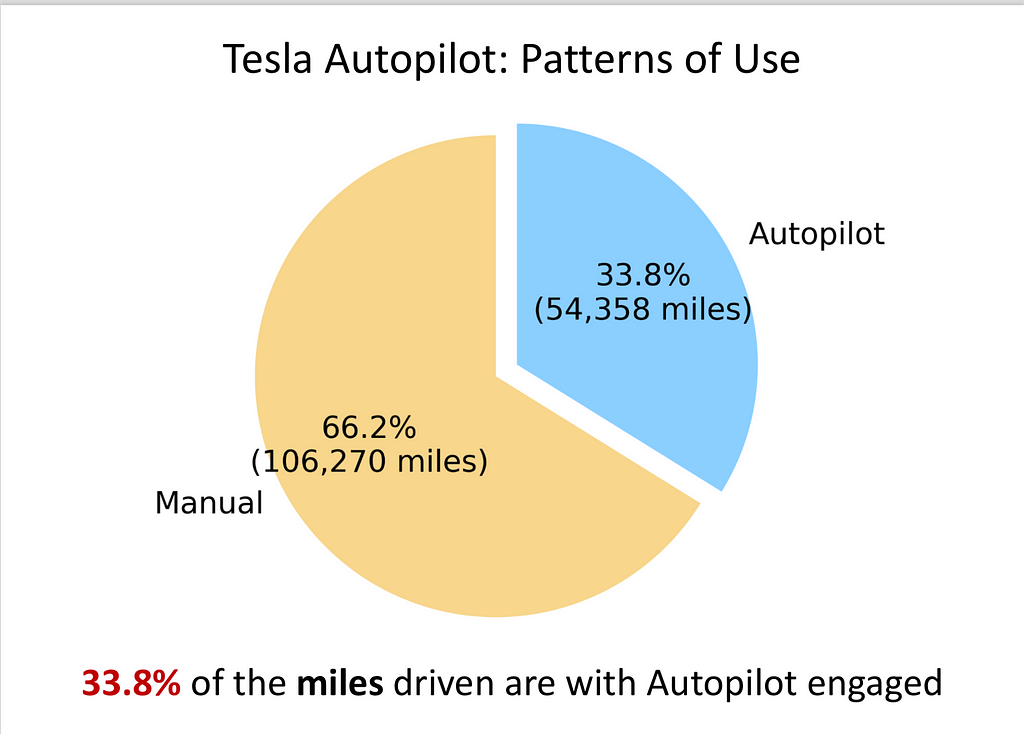

Tesla Auto-Pilot:

- Usage: Intensive (A high % of miles/hours)

- Road Type: Mostly Highways.

- Mental Engagement: 8,000 transfers of control when the Human was not comfortable. Takeaway: There is a risk.

- Physical Engagement: Attention in both cases remains the same.

- Takeaway:

- To trust the system, Let the system reveal its flaws. Know where it works, and it doesn’t.

- Check the System to its limits: In challenging environments.

Self Driving Cars: Robotics View.

- Wide Reaching: Number of Vehicles is huge.

- Profound: Transfer of Control to AI.

- Personal: Building a relationship, where the Human-Robot interaction is the key problem.

- The system should be more of a ‘Personal Robot’ Vs a ‘Perception-Control’ System.

- Flaws of Humans and machines must be transparent and communicated between the two.

- Takeaway: Enable the system in 90% of cases. By revealing the flaws, we allow room for humans to take control when needed.

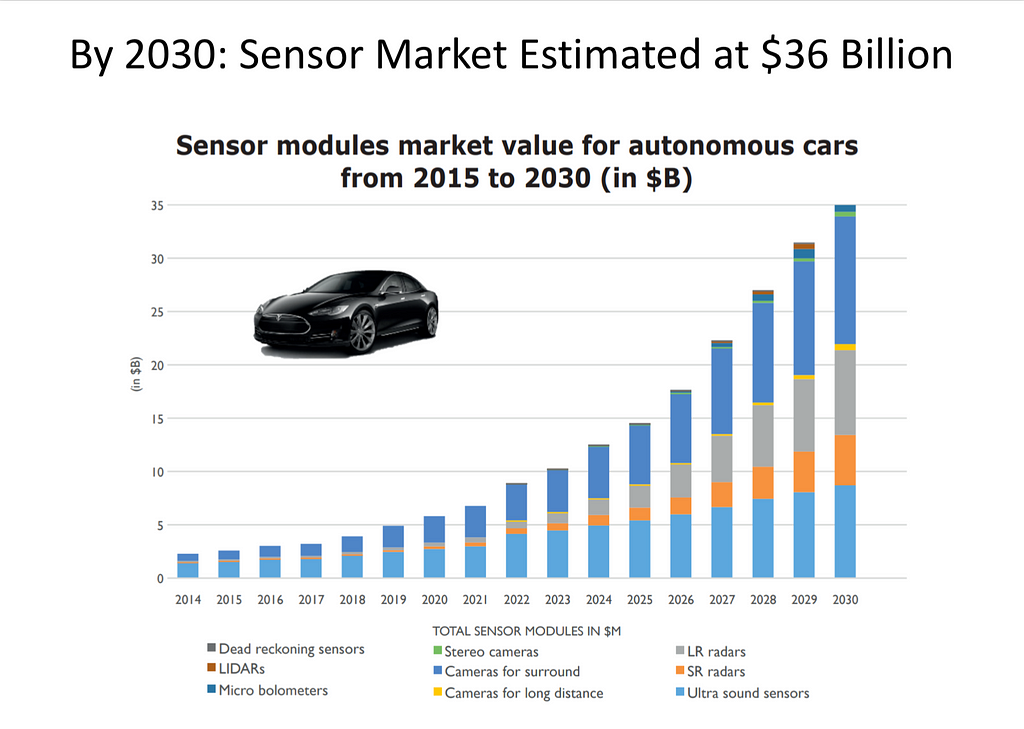

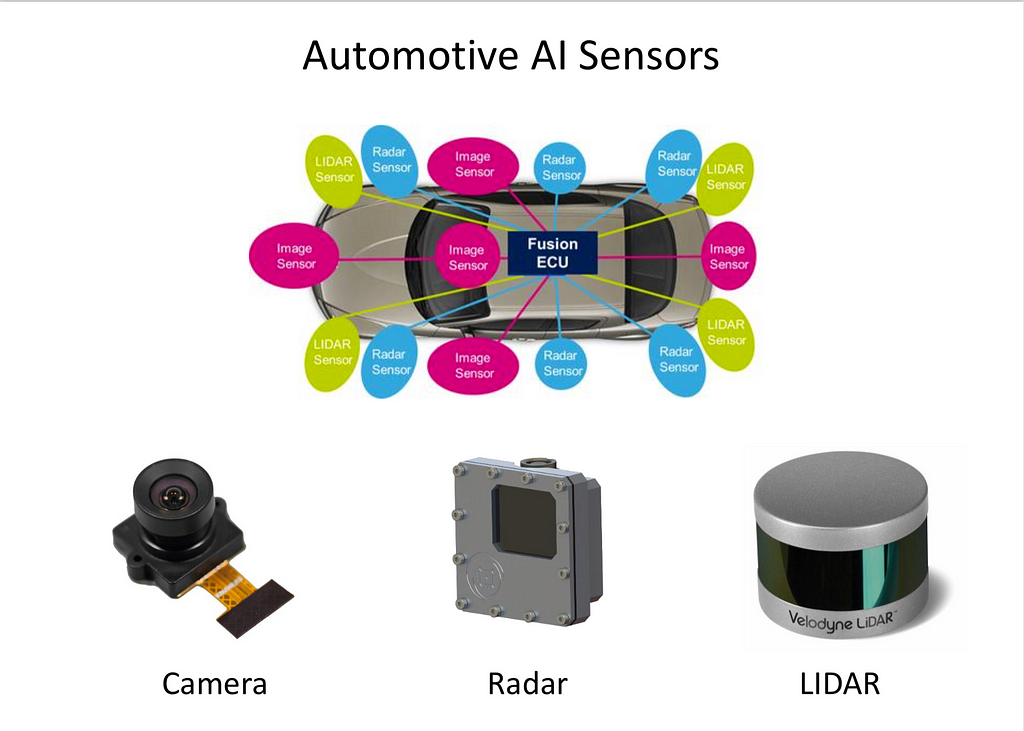

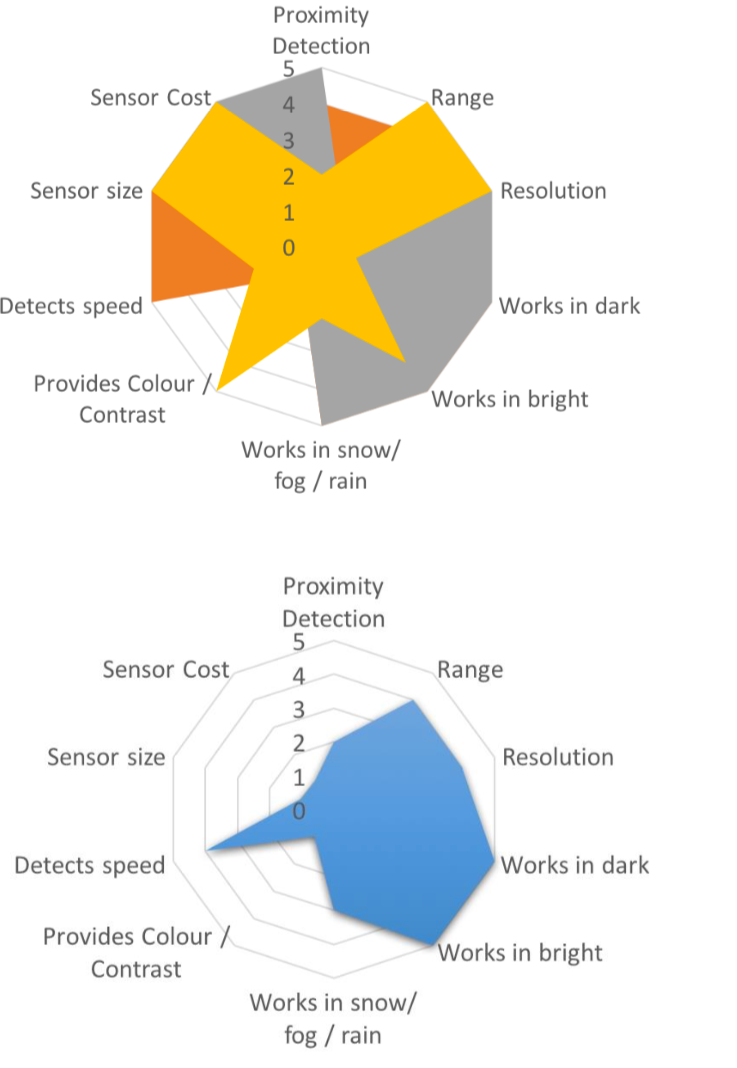

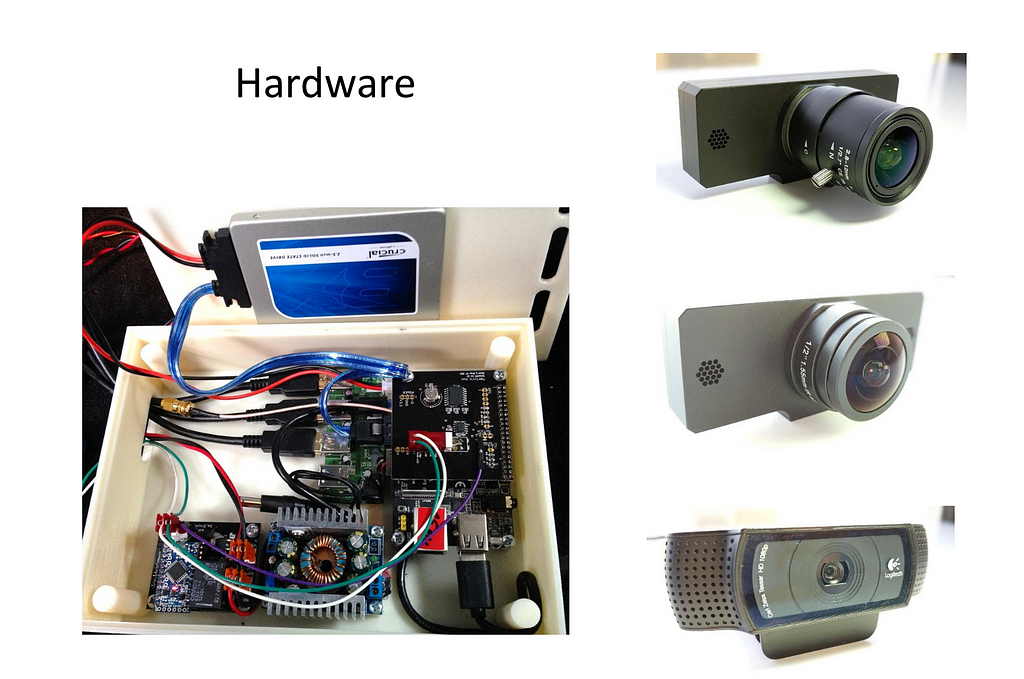

Sensors

Source of Raw Data that can be processed.

- Camera: Visual Systems-RGB, Infra-red.

- RADAR: Ultrasonic.

- LIDAR

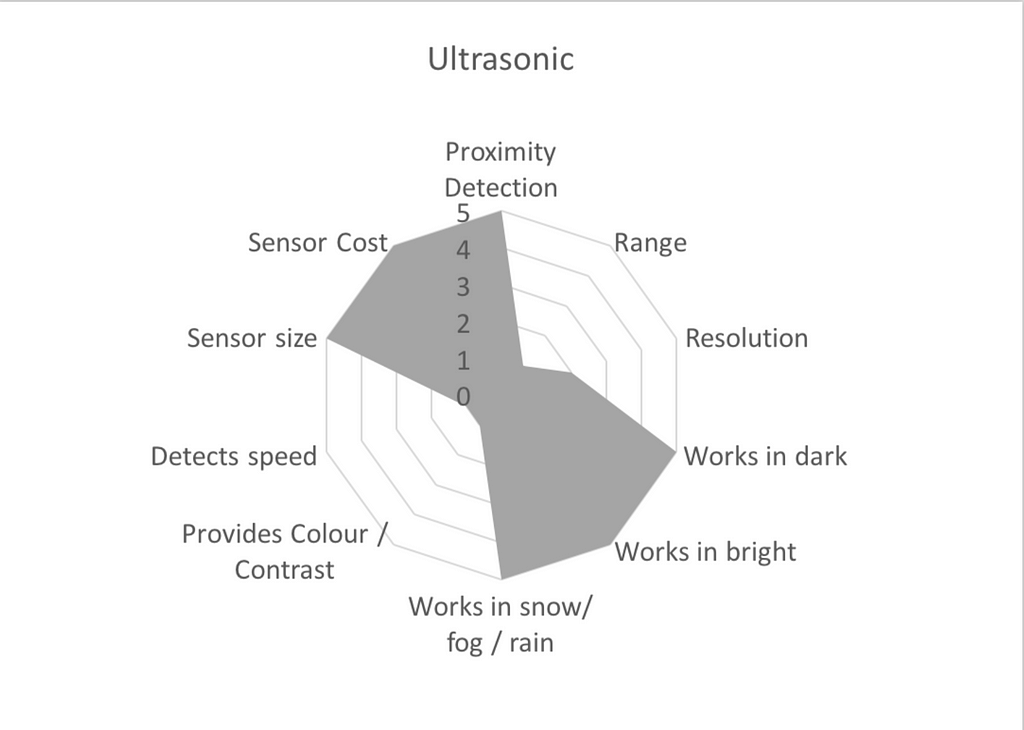

Ultrasonic:

- Works well in proximity.

- Cheap.

- Sensor size can be tiny.

- Works in bad weather, visibility.

- Range is very short.

- Terrible resolution.

- Cannot Detect speed.

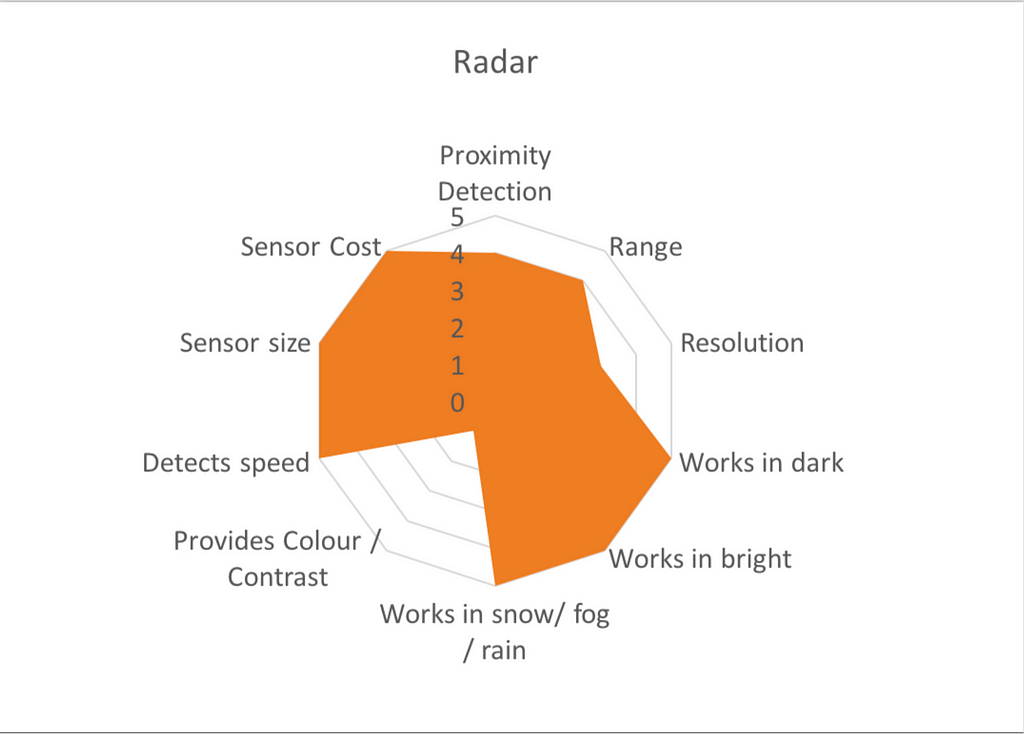

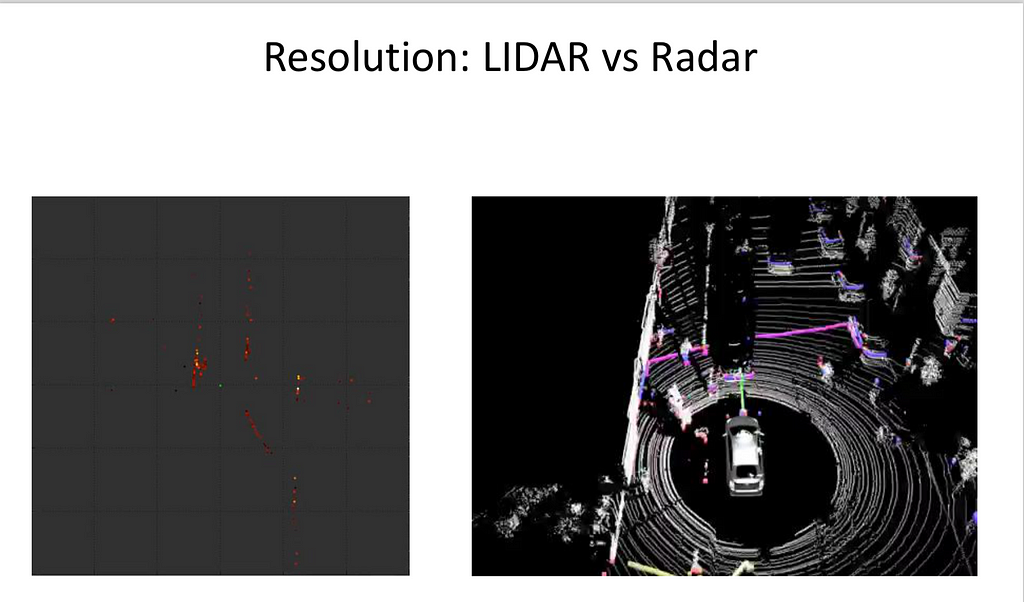

RADAR:

- Commonly available in vehicles with some degree of autonomy.

- Cheap-Both Electronic and Ultrasonic variants are cheap.

- Performs well in challenging weather.

- Low resolution.

- Most reliable today and Widely used.

- RADAR:

- All the plus points of ultrasonics along with ability to detect speeds.

- Low Resolution.

- No Texture, color Resolution.

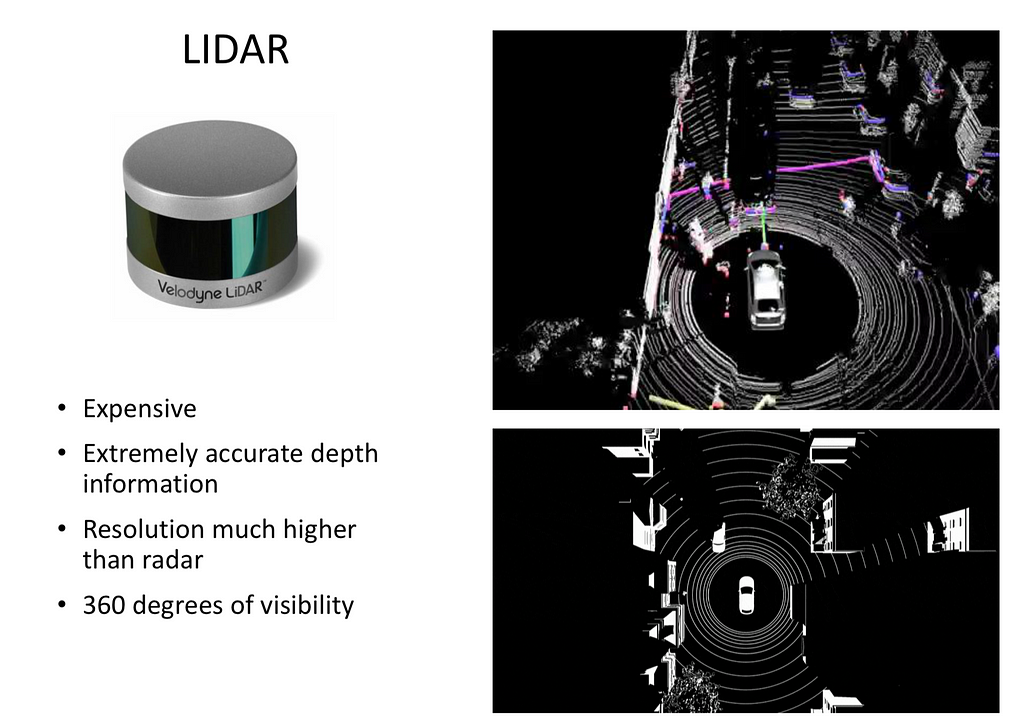

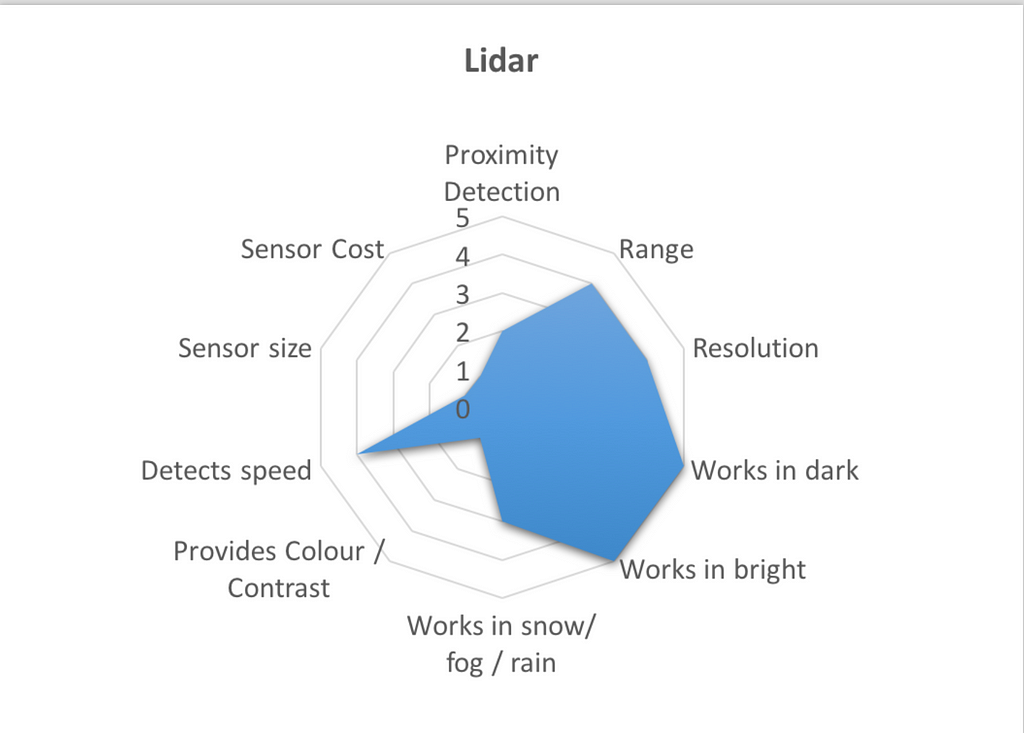

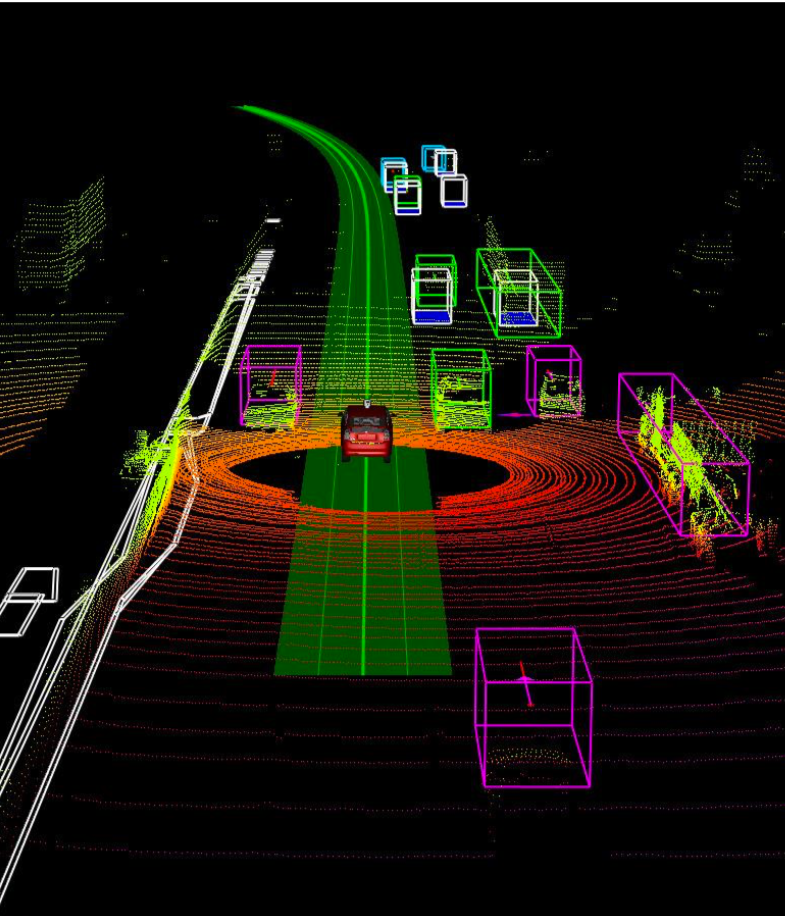

LIDAR:

- Expensive.

- Extremely accurate Depth of Information- Has a very high resolution.

- 360 Degrees of visibility.

- Reliable with much higher density of data.

- LIDAR Plot: Greater the radius of Blue equals greater the performance.

- Range is good, not great.

- Works in dark and bright lighting conditions.

- Not effective in Bad weather conditions.

- No information about color, texture.

- Able to detect speed.

- Huge Sensor Size.

- Expensive.

- Not effective in Ultrasonics.

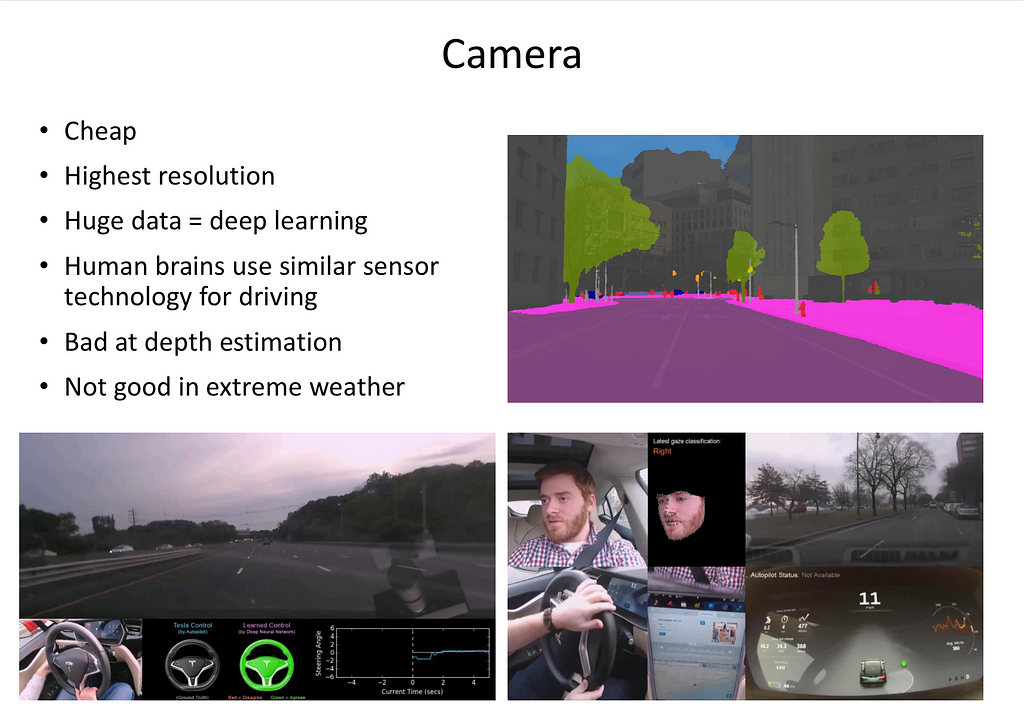

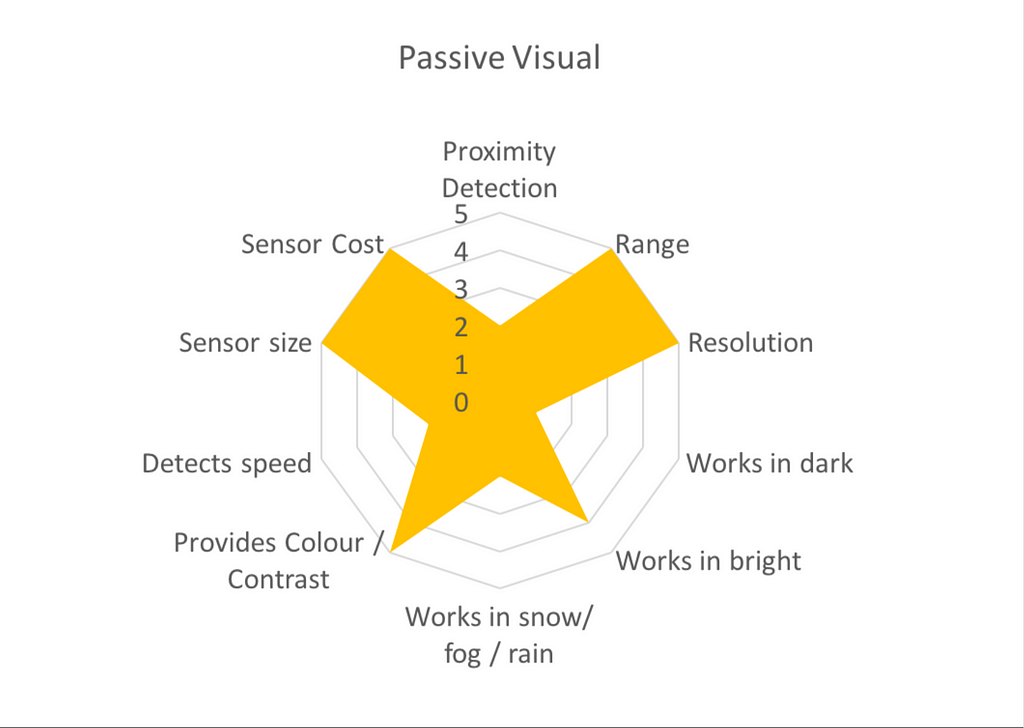

Camera:

- Cheap.

- Highest Resolution: Highest Density of Information- Information can be learned and inferred.

- Many orders of magnitude more data is available compared to other sensors.

- Humans work crudely in a similar manner.

- Downside: Bad at Depth estimation, Not Reliable in extreme weather.

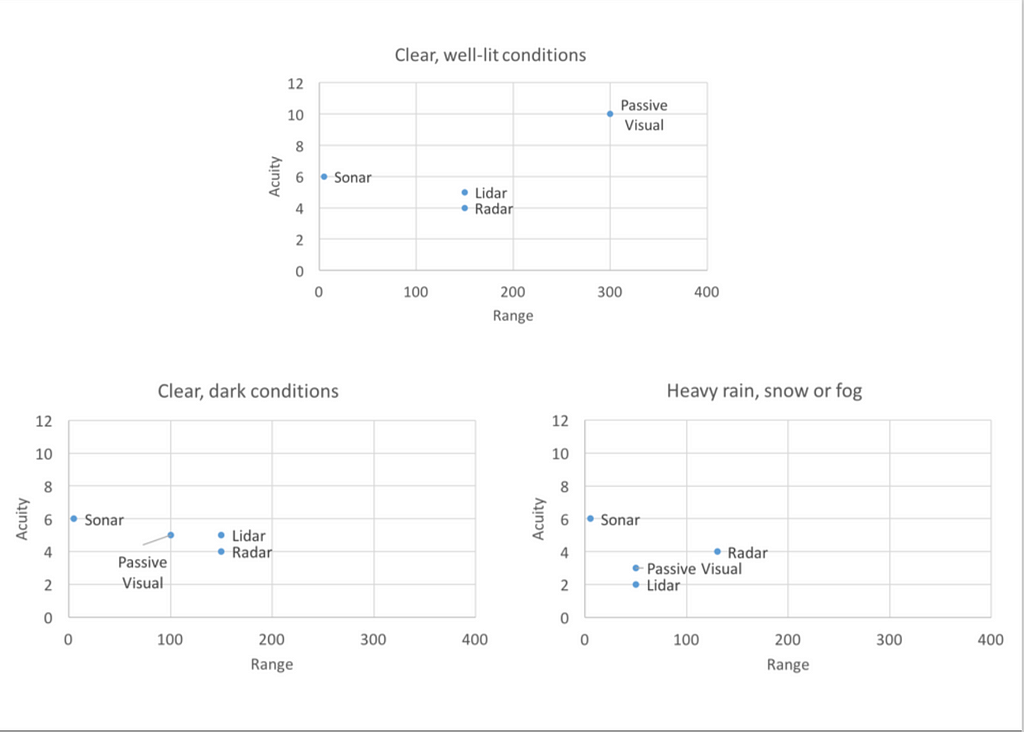

- Plot of Range Vs Acuity. Condition: Clear, well-lit conditions.

- Cameras have the highest Range.

- Ultrasonics have high resolution, but have very small range.

- Condition 2: Clear, Dark Condition Vs Condition 3: Heavy Rain or Fog or Snow:

- Visual not reliable in both.

- RADAR remains unchanged.

- LIDAR works well at night, but not in bad weather conditions.

- Camera:

- Cheap.

- Small sensor size.

- Bad Performance in proximity.

- Range is highest.

- Works well in bright lighting, sensitive to lighting conditions (not always).

- Does not work in dark conditions.

- Not effective in bad visibility (Bad Weather).

- Provides Rich Textural Information (Required for Deep learning).

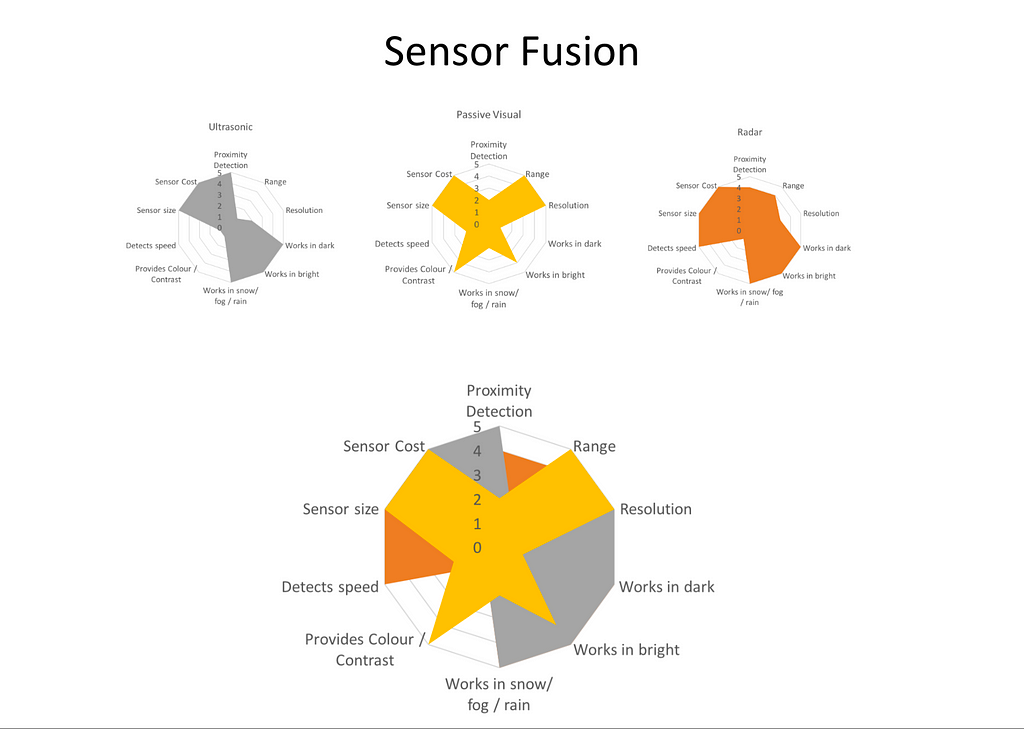

Sensor Fusion:

Cheap Sensors: Ultrasonics + Cameras + RADAR.

Future of Sensors

Cameras Vs LIDAR

- LIDAR.

- Fused Cheap Sensors: Annotated data is growing. DL algorithms are improving.

- Both Perform equally well. Challenge for LIDAR: Cost, Range, Size.

Companies

Waymo

- April 2017: Ended testing, Allowed First public rider.

- November 2017: 4M+ Miles driven ‘autonomously’. (Note: Definition of autonomy is debatable)

- December 2017: No Safety Driver.

Uber

- December 2017: 2M+ Miles Driven ‘autonomously’

Tesla

- September 2014: Released Autopilot.

- October 2016: Started Autopilot 2 from scratch.

- Jan 2018: ~1B Miles driven in Autopilot.

- Jan 2018: ~300k Vehicles equipped with Autopilot.

Audi A8 System (To be released end of 2018):

- Promises ‘L3’.

- Thorsten Leonhardt: Defines L3 as: Functions as intended in traffic jams, under 60 Km/h speeds.

- Car will be liable legally in case of crashes (under Autonomous mode).

Notable Mentions.

Oppurtunities for AI and Deep Learning.

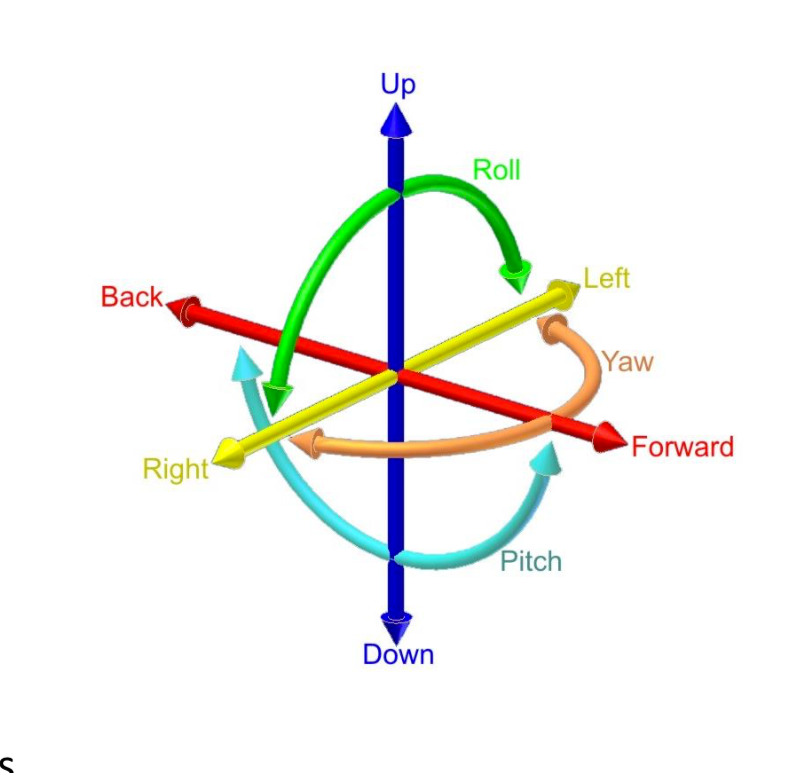

Localisation and Mapping:

Being able to localise itself in space.

- Uses Camera sensors to understand the environement and localise the vehicle.

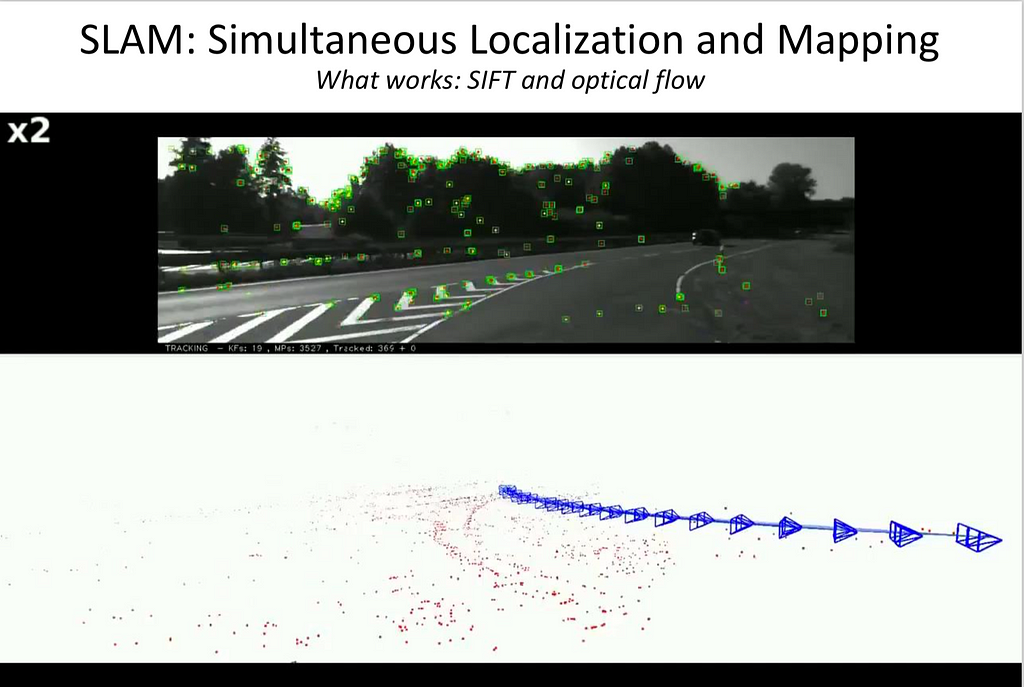

- SLAM Simultaneous Localization and Mapping.

- Detect Features in the Scene and track them from time to time or frame to frame, estimate the location and orientation of the camera.

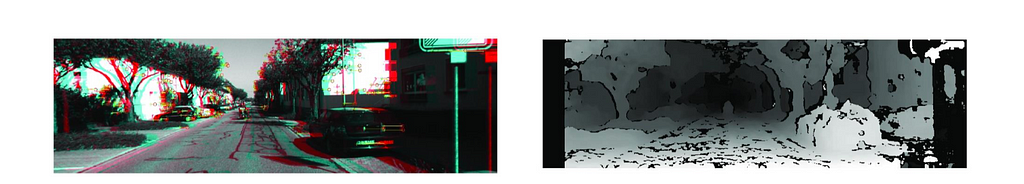

Traditional Approach:

- Getting the Sensor Feed

- (Stereo) Undistortion, Rectification

- (Stereo) Disparity Map Computation

- Feature Detection (e.g., SIFT, FAST)

- Feature Tracking (e.g., KLT: Kanade-Lucas-Tomasi)

- Trajectory Estimation

- Use rigid parts of the scene (requires outlier/inlier detection)

- For mono, need more like camera orientation and height of off the ground

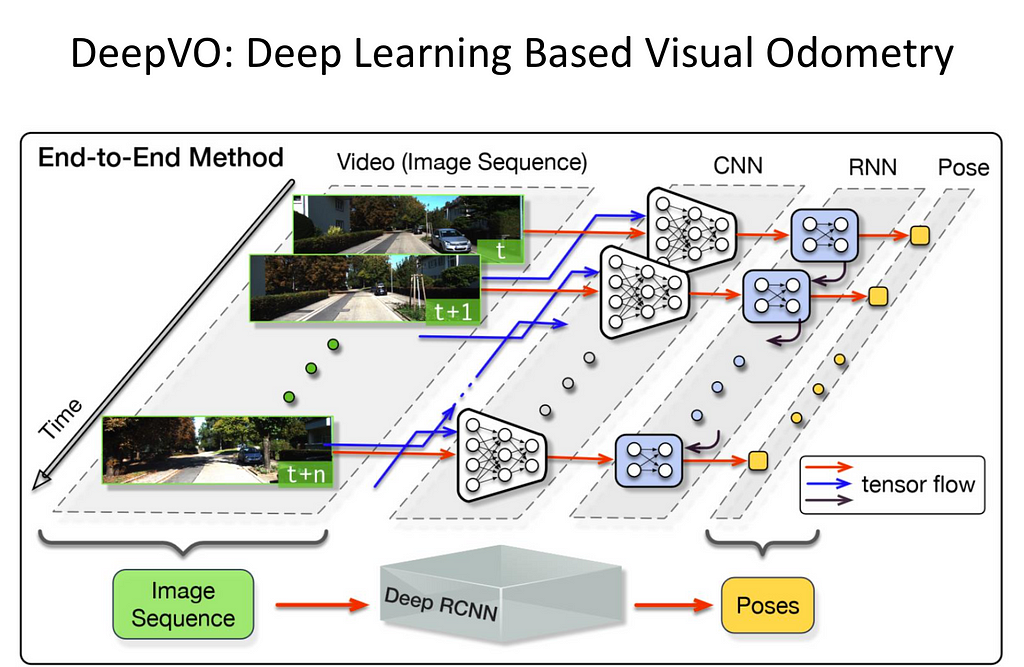

Deep learning approach — End to End Method:

- Extract features from Video, using CNNs.

- Use RNNs to track trajectory over time.

- Estimate Pose.

- Pro: It gets better with Data.

- Pro: It is ‘trainable’.

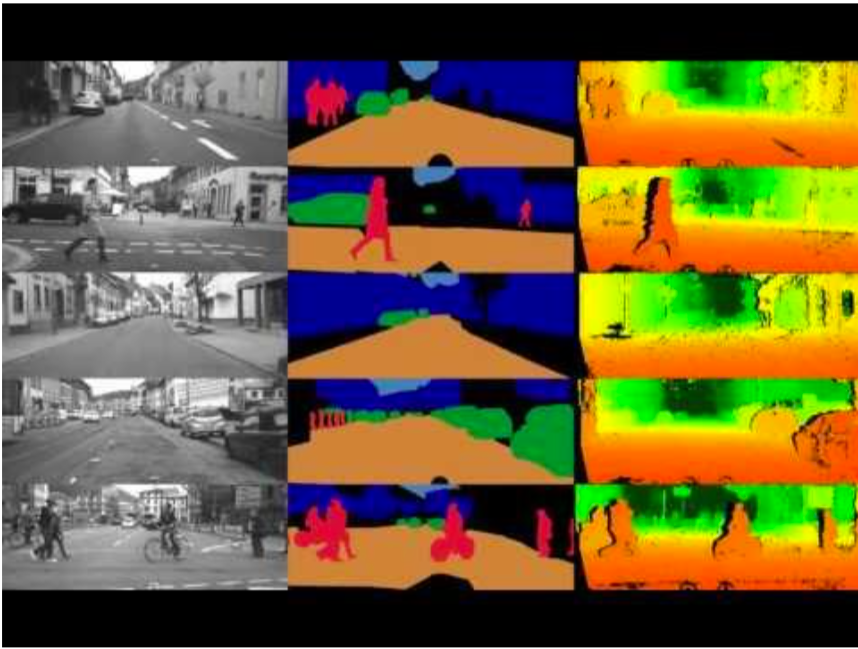

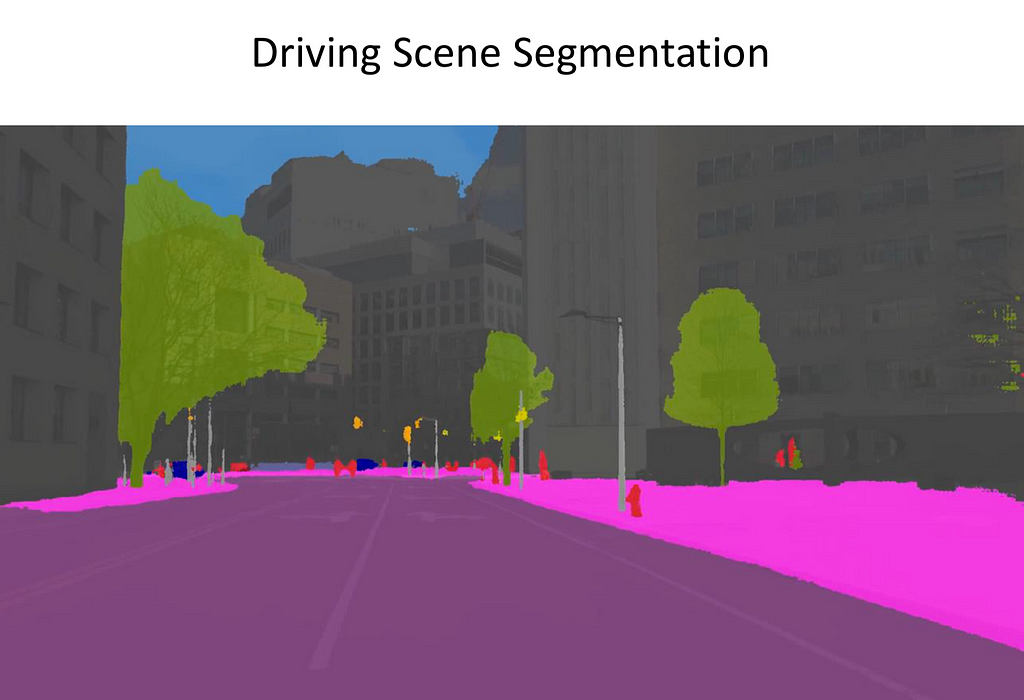

Scene Understanding

- Understanding the environment using Cameras.

- Using cameras as the primary sensors to drive.

- Object Detection: Traditional- HAAR Cascades.

- DL dominates the field with more accuracy for recognition, classification and detection.

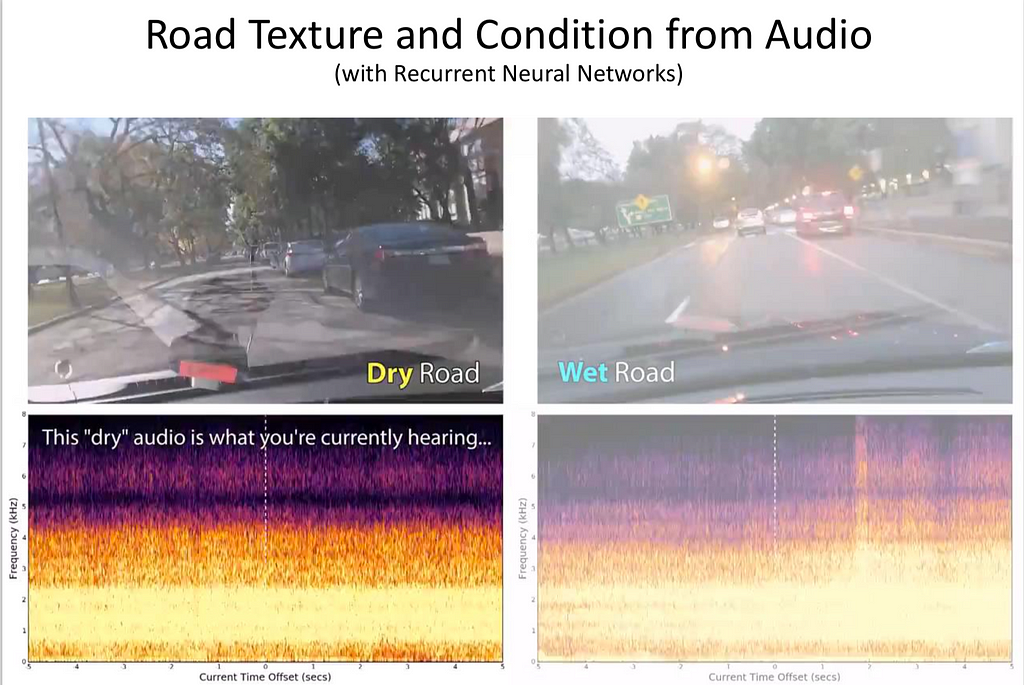

- Road Texture and Condition from Audio: Using RNNs. Pro: Improve traction controls in vehicles.

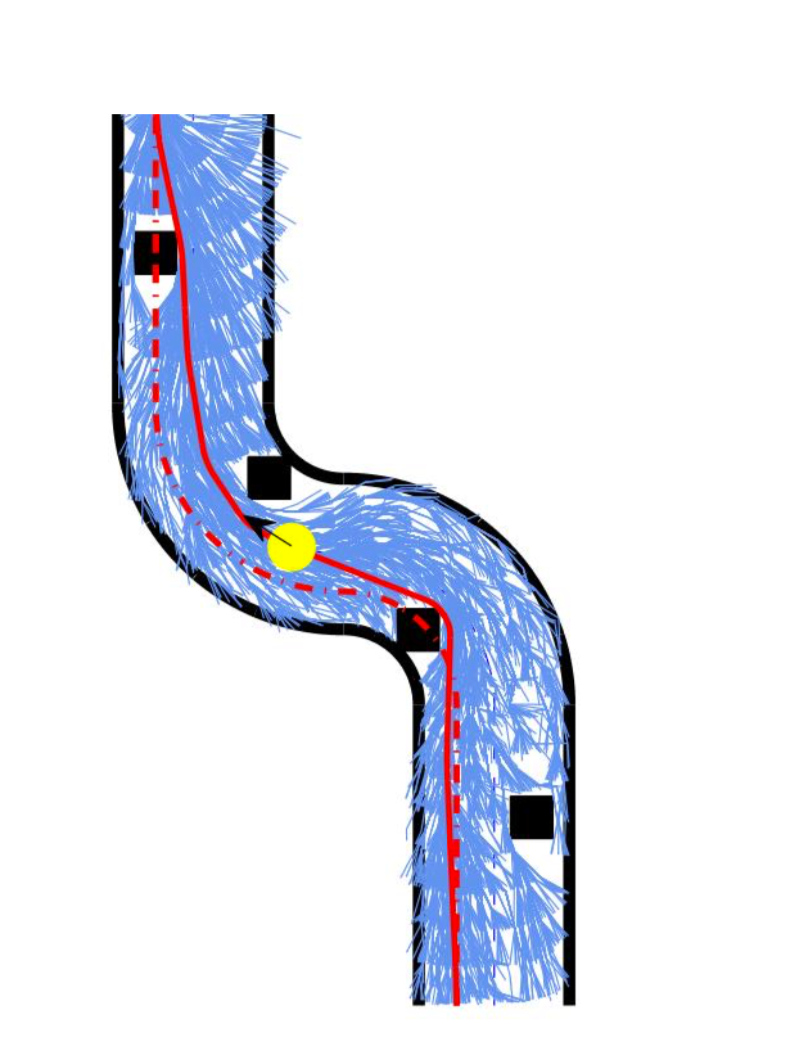

Movement Planning

After understanding the scene, How to get from A to B?

- Traditional: Optimization based control: Determine Optimal control, formalise the problem in forms amendable to optimzation based approaches.

- Deep RL approach.

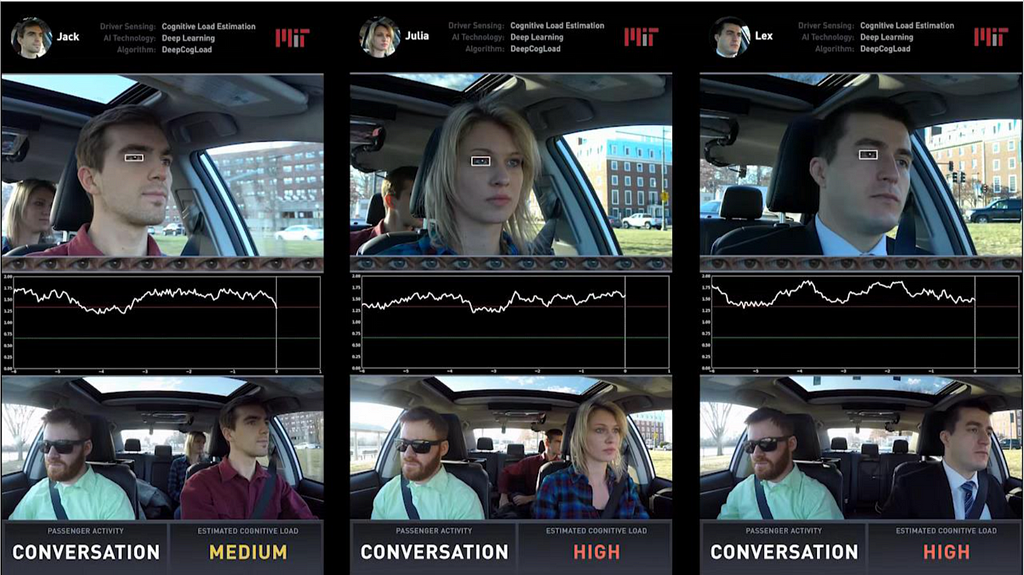

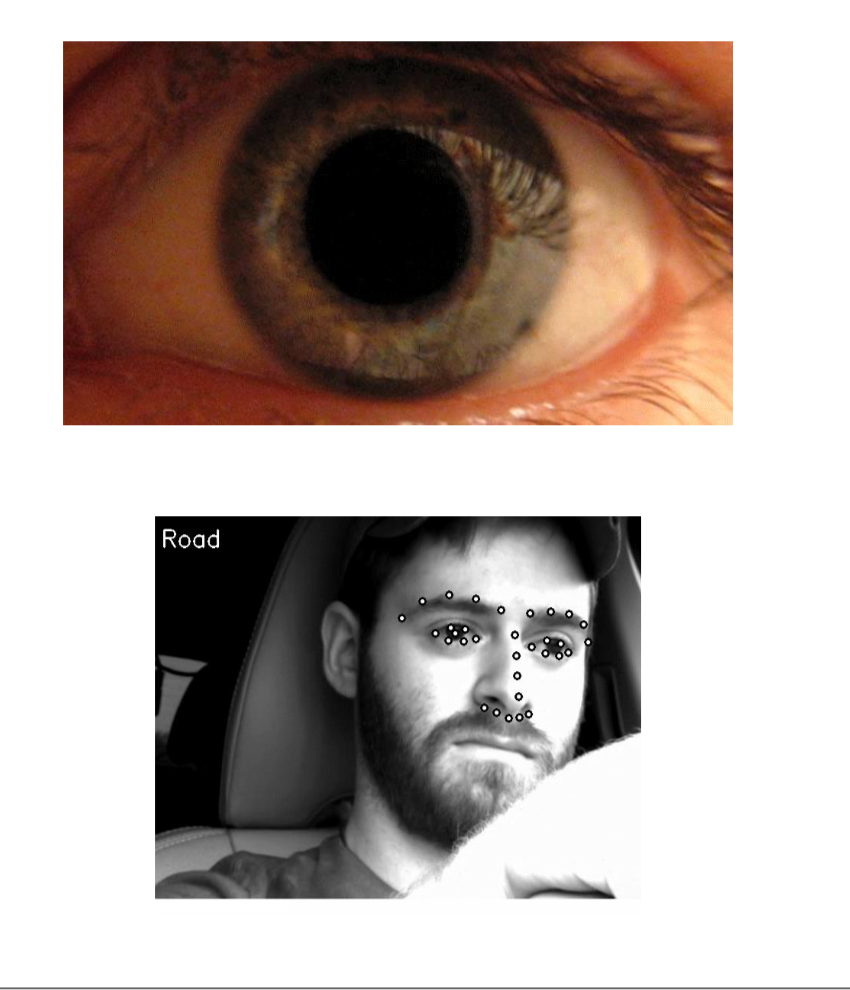

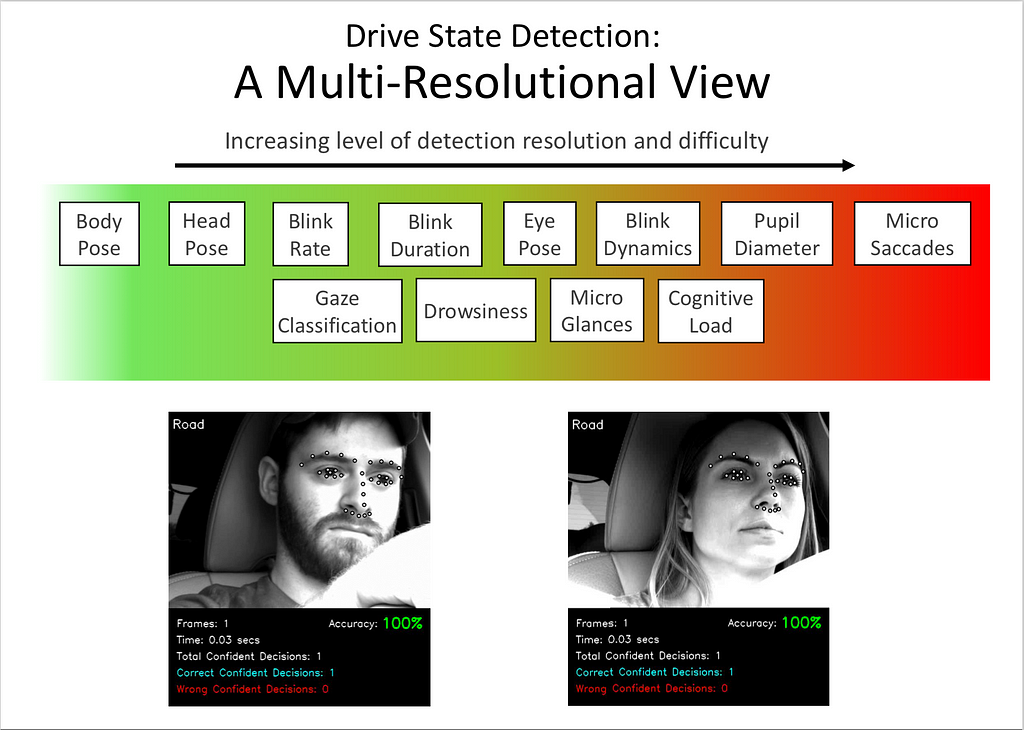

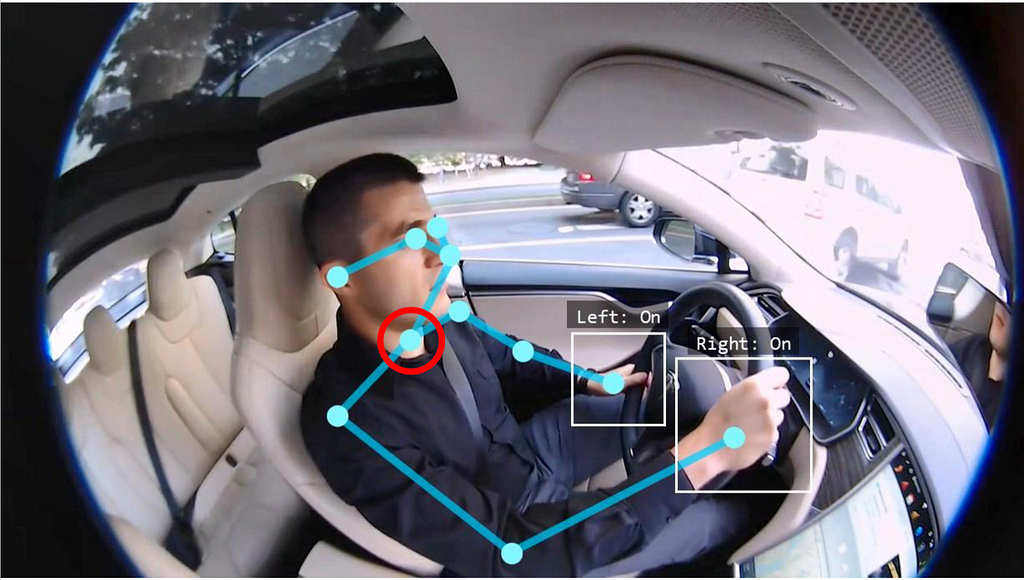

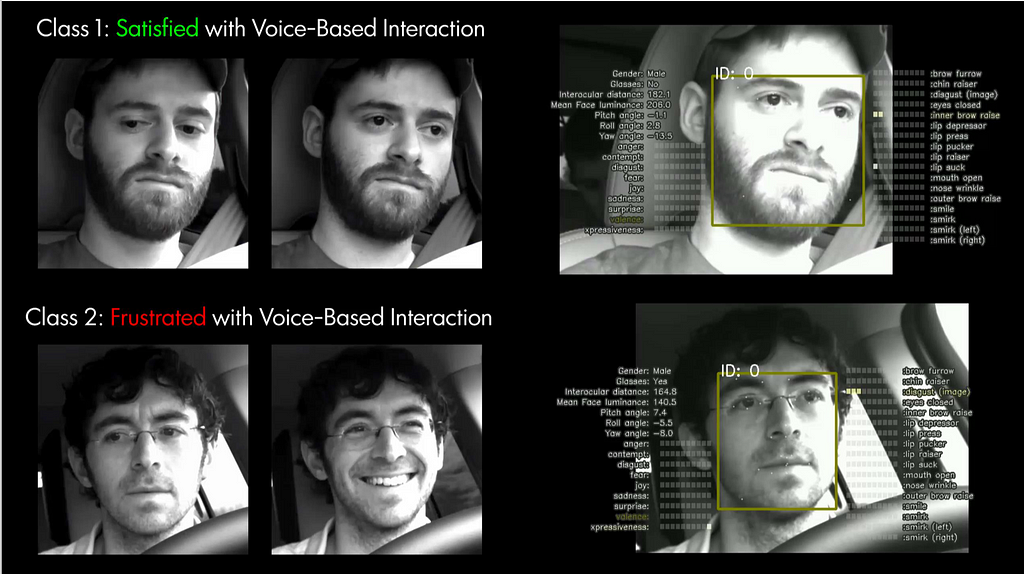

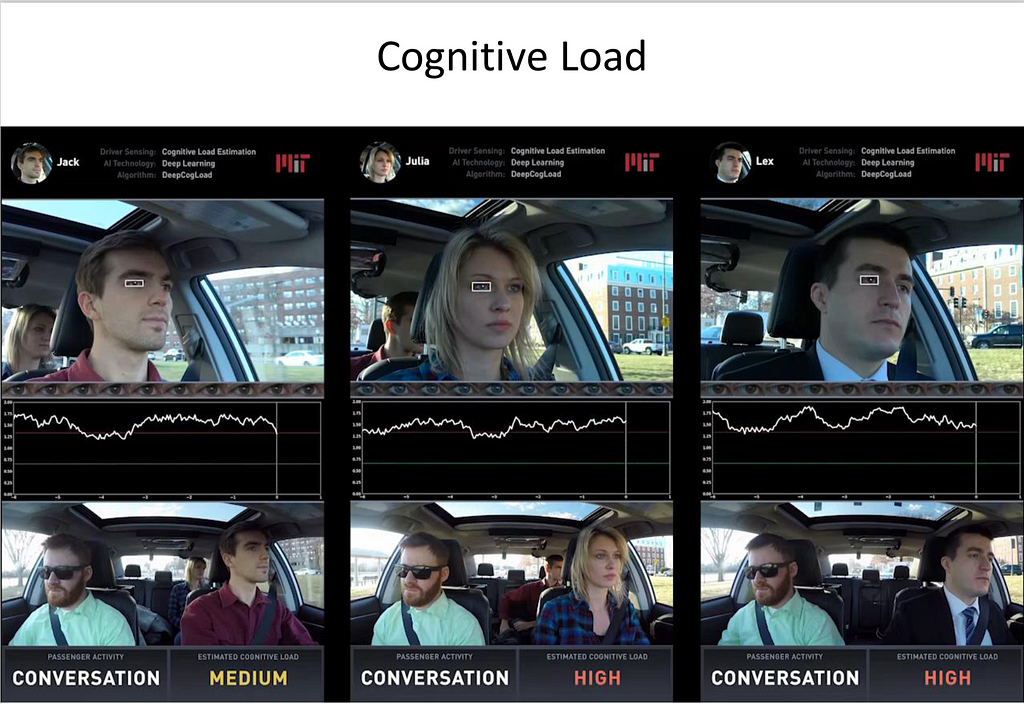

Driver State

- Detecting the Driver State.

- Interacting with the Driver.

- These metrics are important to detect Drowsiness, Emotional State, Detecting Glance (Determining where the attention of the driver is).

- ‘Lizard-Owl Effect’: Lizards(Majority) Move their eyes more compared to head movements Vs Owls(Smaller Fraction) move their heads more compared to eyes.

- Estimating Cognitive Load of the Driver.

- Body Pose Estimation.

- Driver Emotion based on Voice based interactions.

- Cognitive Load: Estimating from the Eye region, blink dynamics to estimate how deep in thought a person is, on a scale of 0–2.

Re: Two Paths to an Autonomous Future:

Argument: A1 systems are more favourable in the current years.

Challenges for A2 Systems:

- Complicated Situations.

- Asserting itself in ‘Busy zones’.

You can find me on Twitter @bhutanisanyam1, connect with me on Linkedin hereHere and Here are two articles on my Learning Path to Self Driving CarsSubscribe to my Newsletter for a Weekly curated list of Deep learning, Computer Vision Articles

MIT 6.S094: Deep Learning for Self-Driving Cars 2018 Lecture 2 Notes was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.