Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

They made this awesome logo using rice grains and flowers.

They made this awesome logo using rice grains and flowers.

This story is going to be about how I handled the live video stream for IIT Guwahati 19th convocation held on 23rd June 2017.

The good folks at computer and communication (CC) centre requested me to set up a live video streaming server for the convocation since I had a little bit of experience working with live streaming while working in Campus Broadcasting System IITG.

In Campus Broadcasting System IITG (we call it as CBS IITG), we run campus radio which runs 24x7 available through local intranet website. Since the content is only audio and the number of users is never more than 200 in normal days, it was not a big deal to handle the traffic.

Normal Set-up:

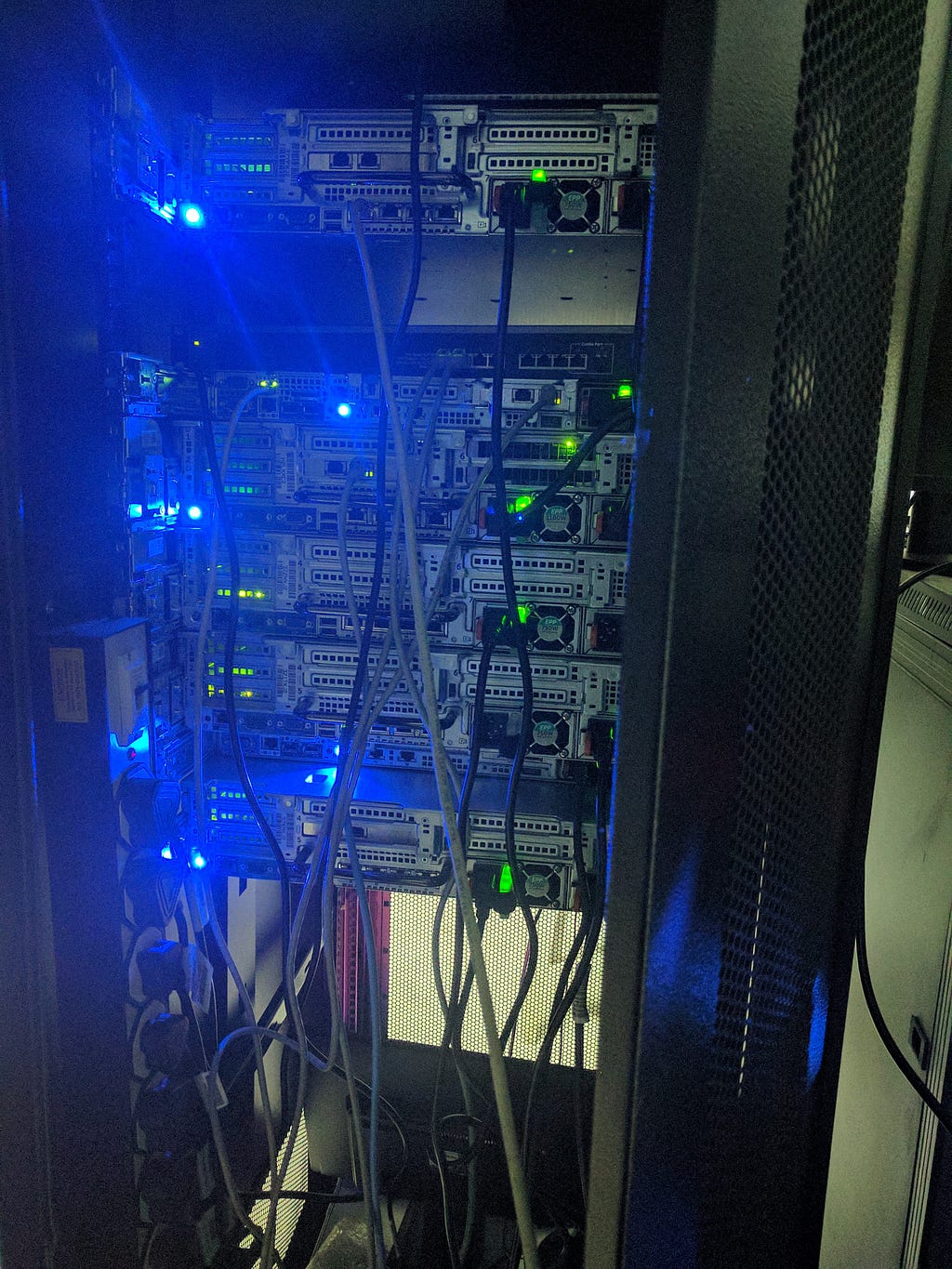

We have a retired server from CC which is an octa-core server with 32 GB RAM running Intel Xeon processor.

model name : Intel(R) Xeon(R) CPU E5410 @ 2.33GHz

I researched a bit about live streaming and ended up learning about Http Live Streaming (HLS) and MPEG Dash (Dash). I will do one more post later on these. I used the Nginx with RTMP module written by Roman Arutyunyan. It supports both HLS and Dash implementations for live streaming. I chose HLS for live streaming at that time.

Now, this is a typical setup which will work for a low number of users and since it is just audio data each connection between user and server takes approx 260 Kbps of the bandwidth. It is easy for the server to manage the load and the network does not become the bottleneck. The server has a gigabit ethernet card. Theoretically, it should be able to handle the traffic up to 1Gbps (125 MBps).

260 * 200 = 26200 kbps 26200 / 1000 = 26.2 Mbps26.2 / 8 = 3.28 MBps

it is only using nearly 40 percent of the available bandwidth.

I never bothered with optimising it since it was working fine and doing its job.

After thinking about the implementation of video streaming for a bit it hit me how difficult scaling would be and while working on optimizing the server for live streaming somewhere I grew a lot of respect for the engineers at YouTube.

Finally, the rehearsal day comes (one day before the convocation) and I was ready with my streaming server(s). 2 of them, one for internet users and one for intranet users. I made the internet server to stream adaptive multi-bitrate video. Which means just like Youtube auto setting in quality, it switches your video resolution based on your available bandwidth.

It is helpful since the clients don’t request a 1080p source all the time which will save my server bandwidth. Now, thinking about the bottlenecks, I could think a few at that time.

Bottlenecks:

- Processing power (Since the server is encoding in four different bitrates)

- Hard disk Read/Write speed (Since in HLS implementation, the encoded files (.ts files) are written to the hard disk and when 100 users request different files at once it has to read them at the same time.)

- Bandwidth (Since the network card available can only take 1Gbps) of streaming server and uplink network.

So this is how I solved these issues:

- Got a new server with Intel Xeon Processor and 32 cores (Powerful enough) and 64GB RAM. More threads are good since FFmpeg can use them all for encoding.

- Tried configuring the server in RAID10 so that the reading speeds become better. But, on the day of rehearsal, there was a problem and the stream was not at all smooth. After a lot of head banging, I was on phone with a close friend of mine(Midhul Varma) discussing this issue and he spotted out something which I completely missed. The GitHub page of Nginx-RTMP module mentions about tmpfs. I quickly read about it and got to know that if I mount my /tmp/ directory on tmpfs, whatever that is written to the /tmp/* will be written directly to the primary memory (RAM). If RAM becomes full it will be written to SWAP, which was another 64GB. It sounded awesome. But, I didn’t get much time to test it and the next day was convocation.

- I was using CAT6 Ethernet cables to connect to the switch which is a gigabit switch again. I requested the CC folks to give me a direct connection to a 10Gbps switch. And I also configured 2 network interfaces so that one IP would be internal IP which is used to transfer the RTMP stream from the encoder (OBS studio) to the server. Because I wanted to reduce the load on the other NIC.And the other IP would be a public IP. I planned on using more network interfaces but didn’t have much time to use them at that point.

- On top of all these I used gzip compression on those .ts files which even reduced the file size drastically. (Nginx is awesome !!)

So, everything was ready but I was still not confident. I was not able to accurately calculate how many users the server can handle. I was hoping for something around 100–150 users at any given instant and hoping the others would go to Youtube (we were streaming to both Youtube and our own server). I also had a backup server setup just incase this one goes down.

Middle server being used.On the day of the event:

Middle server being used.On the day of the event:

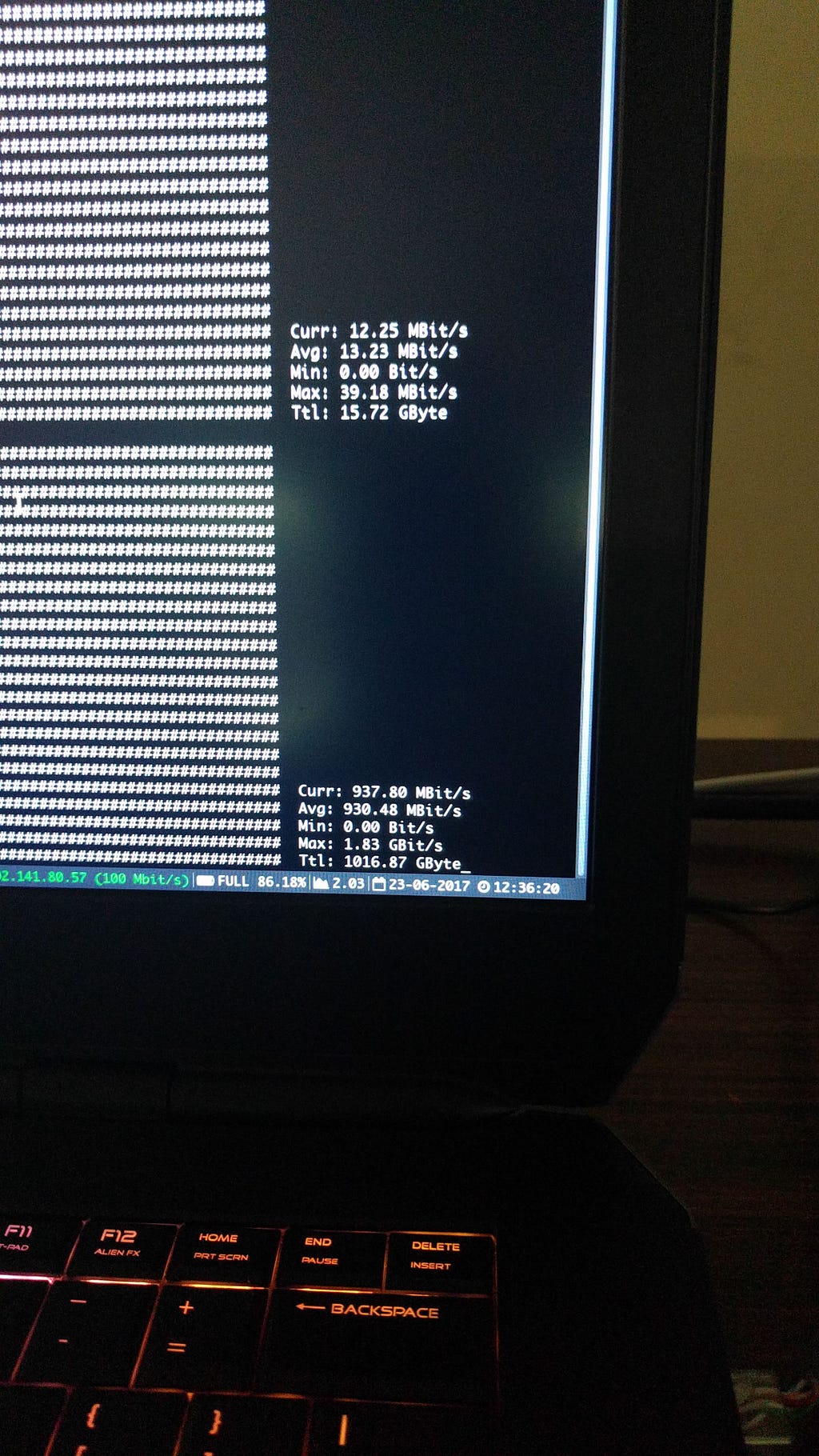

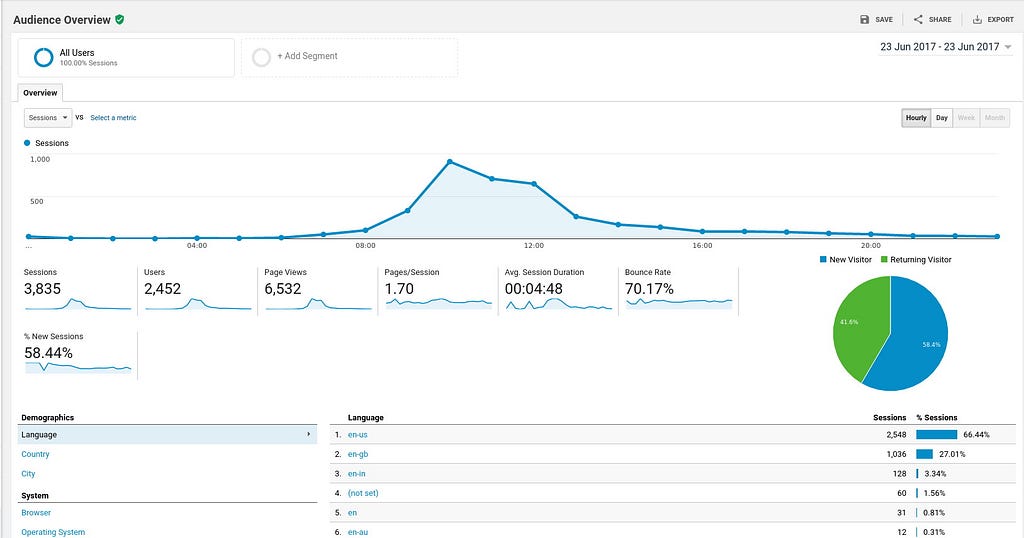

I woke up at 5 in the morning after sleeping in the auditorium and started double checking things (Encoders and servers). I had backup plans and backup plans for those backup plans. I was prepared for everything. Restarted the servers, closed all the unnecessary applications, installed netdata to monitor the server statistics. The CBS team was also ready with its awesome equipment to provide the 1080P Full HD video feed to me. And around at 10:30 AM the live stream started. I was baffled with the traffic I was getting as soon as it started, I was not sure about the number of users at that time but the bandwidth usage was around 600Mbps and I already started panicking because I never saw such high bandwidth usage in a server I managed and it only kept rising. After 30 min I called the webmaster to ask for the Google Analytics statistics for the number of real-time users and was shocked when he told me it was nearly 200(realtime). Now the server CPU usage is around 25% (Thanks to those 32 cores), Network is being used at almost full capacity (Averaging around 960Mbps). Disk read/write is essentially negligible since everything is being loaded from the memory directly. I was worried about when the memory would fill up since I didn’t calculate it beforehand. Netdata was so useful and gave me a notification after 10 min into the stream that at this rate the RAM would be full in 7 hours. Thankfully, the program is only 3–4 hours long.

And as I was looking at these multiple monitors with my eyes peeled out to check for anomalies I realised that the program has ended around at 1:00 PM and that is when I realised that the stream was a success.

According to the people’s feedback, I got to know that the stream was really smooth without a lot of buffering or any other problems.

I really loved working on this project because I had to use my computer science knowledge to optimise this server and I got to explore new stuff at the same time it was really exciting. It was really challenging working with so much of pressure from the entire campus administration and CC. Since it is a really prestigious event to the Institute, we can’t afford any screw up. Since it is the first time we used our own streaming server I proposed that we also stream to YouTube as a backup. That actually turned out to be good because there were around 170 people watching live on YouTube too. My server was already running on its network interface full capacity. By the end of the day the server transferred more than 1.5TB of data to the clients.

Now that I think about it, I criticise myself saying why didn’t you use 2 more interfaces which were there useless, I could have used DNS for load sharing on the 3 network interfaces, or use SSD’s instead of hard disks so that the RAM doesn’t get full. some more improvements. Still, it was fun. Maybe next year some junior will take this up and use some load balancer or implement something more innovative. This would always remain one of the awesome experience in my college life. BTW, I partied with the rest of the CBS team that night.

Here is the Youtube video of the convocation.

Throwaway : This cat gif which Google created from a video shot the same day.

Thank you for reading. Suggestions and feedback are welcome. If you know any more cool techniques to handle these kind of scenarios please do comment.

Adios !!

Handling Live video stream for IIT Guwahati 19th Convocation was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.