Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

This is the second part of a series of posts about our journey on WebAssembly. If you’re starting out with this article, you might want to start there.

In the last article, was exposed our motivation and our PoC to measure WebAssembly alongside with an explanation of our implementation in Vanilla JS. To continue this journey to understand why WebAssembly is, in theory, faster than JavaScript, we need to understand first a little of JavaScript history and what makes it so quick nowadays.

A little of history

JavaScript was created in 1995 by Brendan Eich with the objective to be a language which designers could easily implement dynamic interfaces, in other words, it wasn’t built to be fast; it was created to add behavior layer on HTML pages comfortably and straightforwardly.

When JavaScript was introduced, this was how the internet looked.

When JavaScript was introduced, this was how the internet looked.

Initially, JavaScript was an interpreted language, making the startup phase faster because the interpreter only needs to read the first instruction, translate it into bytecode and run it right away. For the 90’s internet needs, JavaScript did its job very well. The problem lies when applications start to be more complex.

In the decade of 2000, technologies like Ajax made web applications been more dynamic, Gmail in 2004 and Google Maps in 2005 was a trending on this use case of Ajax technology. This new “way” of building web applications end up with more logic written on the client side. At this moment JavaScript had to have a jump on his performance, which happened in 2008 with the appearance of Google and it’s engine V8 which compiled all JavaScript code into bytecode right away. But how do JIT compilers work?

How JavaScript JIT works?

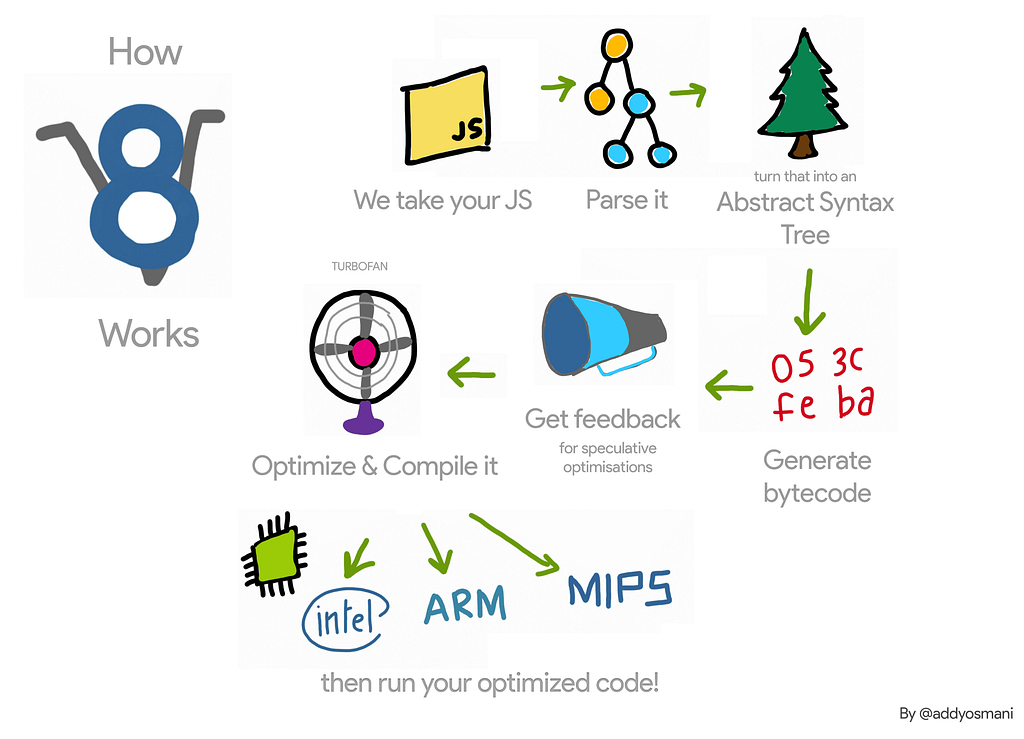

In summary, after JavaScript code is loaded, the source code is transformed into a tree representation called Abstract Syntax Tree or AST. After, depending on the engine/operational system/platform, either a baseline version of this code is compiled, or a bytecode is created to be interpreted.

The Profiler is another entity to be observed, which monitors and collect code execution data. I’ll describe it in summary how it works, taking into account that are differences among browsers engines.

At the first time, everything passes through the interpreter; this process guarantees that the code runs faster after AST is generated. When a piece of code is executed multiple times, as our getNextState() function, the interpreter loses his performance since it needs to interpret the same piece of code over and over again, when this happens, the profiler marks this piece of code as warm and baseline compiler comes to action.

Baseline Compiler

To better illustrate how JIT works, from now on we are going to use the following snippet as an example.

function sum (x, y) { return x + y;}[1, 2, 3, 4, 5, '6', 7, 8, 9, 10].reduce( (prev, curr) => sum(prev, curr), 0);

When profiler marks a piece of code as warm, the JIT sends this code to the baseline compiler, which creates a stub for this part of code while the profiler keeps collecting data regarding the frequency and types used on this code section (among other data). When this code section is executed (on our hypothetical example return x + y;), the JIT only needs to take this compiled piece again. When a warm code is called several times in the same manner (like same types), it’s marked as hot.

Optimizer Compiler

When a piece of code is marked as hot, the optimizer compiler generates an even faster version of this code. It is only possible based on some assumptions that the optimizer compiler makes like the type of the variables or the shape of objects used in this code. On our example, we can say that a hot code of return x + y; will assume that both x and y are typed as anumber.

The problem is when this code has been hit with something not expected by this optimized compiler, in our case the sum(15, '6') call, since y is a string. When this happens, the Profiler assumes that its assumptions were wrong throwing everything out returning to the base compiled (or interpreted) version again. This phase is called deoptimization. Sometimes this happens so often that it makes the optimized version slower than using the base compiled code.

Some JavaScript engines have a limit regarding the quantity of optimizations attempts, stopping trying to optimizing the code when this limit is reached. Others like V8, have heuristics that prevent the code to be optimized when it knows that most probably it will be deoptimized. This process is called bailing out.

So, in summary, the phases of JIT compiler could be described as:

- Parse

- Compile

- Optimize/deoptimize

- Execution

- Garbage Collector

Example of JIT phases on V8 by Addy Osmani

Example of JIT phases on V8 by Addy Osmani

All these advancements brought by JIT compiler make Javascript being faster than 2008 before its advent on Google Chrome, nowadays applications are more robust and sophisticated thanks to the speed found on JavaScript engines, but what will make us have the same jump of performance as when JIT was introduced? We will discuss it in the next article when we will approach WebAssembly and what makes it potentially faster than JavaScript.

Links

- Portuguese version of this article: https://medium.com/p/eef572899b5c

- A crash course in JIT Compilers (em inglês): https://hacks.mozilla.org/2017/02/a-crash-course-in-just-in-time-jit-compilers/

- JavaScript Start-up Performance (em inglês): https://medium.com/reloading/javascript-start-up-performance-69200f43b201

- A re-introduction to JavaScript (JS tutorial): https://developer.mozilla.org/en-US/docs/Web/JavaScript/A_re-introduction_to_JavaScript

WebAssembly, the journey — JIT Compilers was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.